|

Welcome to ShortScience.org! |

|

- ShortScience.org is a platform for post-publication discussion aiming to improve accessibility and reproducibility of research ideas.

- The website has 1584 public summaries, mostly in machine learning, written by the community and organized by paper, conference, and year.

- Reading summaries of papers is useful to obtain the perspective and insight of another reader, why they liked or disliked it, and their attempt to demystify complicated sections.

- Also, writing summaries is a good exercise to understand the content of a paper because you are forced to challenge your assumptions when explaining it.

- Finally, you can keep up to date with the flood of research by reading the latest summaries on our Twitter and Facebook pages.

Learning Hierarchical Features from Generative Models

Zhao, Shengjia and Song, Jiaming and Ermon, Stefano

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Zhao, Shengjia and Song, Jiaming and Ermon, Stefano

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

A Critical Paper Review by Alex Lamb: https://www.youtube.com/watch?v=_seX4kZSr_8  |

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

Zhu, Jun-Yan and Park, Taesung and Isola, Phillip and Efros, Alexei A.

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Zhu, Jun-Yan and Park, Taesung and Isola, Phillip and Efros, Alexei A.

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

_Objective:_ Image-to-image translation to perform visual attribute transfer using unpaired images. _Dataset:_ [Cityscapes](https://www.cityscapes-dataset.com/), [CMP Facade](http://cmp.felk.cvut.cz/%7Etylecr1/facade/), [UT Zappos50k](http://vision.cs.utexas.edu/projects/finegrained/utzap50k/) and [ImageNet](http://www.image-net.org/). _Code:_ [CycleGAN](https://github.com/junyanz/CycleGAN) ## Inner-workings: Basically two GANs for each domain with their respective Generator and Discriminator plus two additional losses (called consistency losses) to make sure that translating to the other domain then back yields an image that is still realistic. [](https://cloud.githubusercontent.com/assets/17261080/26717449/bcd8a9cc-477d-11e7-9137-fd277a0ec04f.png) For the consistency los they use a pixel-wise L1 norm: [](https://cloud.githubusercontent.com/assets/17261080/26717733/bc088cdc-477e-11e7-96af-2defa06a1660.png) ## Architecture: Based on [Perceptual losses for real-time style transfer and super-resolution](https://arxiv.org/pdf/1603.08155.pdf), code available [here](https://github.com/jcjohnson/fast-neural-style). Training seems to employ several tricks and then even use a batch of 1. ## Results: Very impressive and the really key point is that you don't need paired images which makes this trainable on any domain with the same representation behind. [](https://cloud.githubusercontent.com/assets/17261080/26717502/f6d1fb7e-477d-11e7-8174-7bdd621cf1b6.png)  |

The Unified Logging Infrastructure for Data Analytics at Twitter

Lee, George and Lin, Jimmy J. and Liu, Chuang and Lorek, Andrew and Ryaboy, Dmitriy V.

arXiv e-Print archive - 2012 via Local Bibsonomy

Keywords: dblp

Lee, George and Lin, Jimmy J. and Liu, Chuang and Lorek, Andrew and Ryaboy, Dmitriy V.

arXiv e-Print archive - 2012 via Local Bibsonomy

Keywords: dblp

|

[link]

The [paper](http://vldb.org/pvldb/vol5/p1771_georgelee_vldb2012.pdf) presents Twitter's logging infrastructure, how it evolved from application specific logging to a unified logging infrastructure and how session-sequences are used as a common case optimization for a large class of queries.

## Messaging Infrastructure

Twitter uses **Scribe** as its messaging infrastructure. A Scribe daemon runs on every production server and sends log data to a cluster of dedicated aggregators in the same data center. Scribe itself uses **Zookeeper** to discover the hostname of the aggregator. Each aggregator registers itself with Zookeeper. The Scribe daemon consults Zookeeper to find a live aggregator to which it can send the data. Colocated with the aggregators is the staging Hadoop cluster which merges the per-category stream from all the server daemons and writes the compressed results to HDFS. These logs are then moved into main Hadoop data warehouse and are deposited in per-category, per-hour directory (eg /logs/category/YYYY/MM/DD/HH). Within each directory, the messages are bundled in a small number of large files and are partially ordered by time.

Twitter uses **Thrift** as its data serialization framework, as it supports nested structures, and was already being used elsewhere within Twitter. A system called **Elephant Bird** is used to generate Hadoop record readers and writers for arbitrary thrift messages. Production jobs are written in **Pig(Latin)** and scheduled using **Oink**.

## Application Specific Logging

Initially, all applications defined their own custom formats for logging messages. While it made it easy to develop application logging, it had many downsides as well.

* Inconsistent naming conventions: eg uid vs userId vs user_Id

* Inconsistent semantics associated with each category name causing resource discovery problem.

* Inconsistent format of log messages.

All these issues make it difficult to reconstruct user session activity.

## Client Events

This is an effort within Twitter to develop a unified logging framework to get rid of all the issues discussed previously. A hierarchical, 6-level schema is imposed on all the events (as described in the table below).

| Component | Description | Example |

|-----------|------------------------------------|----------------------------------------------|

| client | client application | web, iPhone, android |

| page | page or functional grouping | home, profile, who_to_follow |

| section | tab or stream on a page | home, mentions, retweets, searches, suggestions |

| component | component object or objects | search_box, tweet |

| element | UI element within the component | button, avatar |

| action | actual user or application action | impression, click, hover |

**Table 1: Hierarchical decomposition of client event names.**

For example, the following event, `web:home:mentions:stream:avatar:profile_click` is logged whenever there is an image profile click on the avatar of a tweet in the mentions timeline for a user on twitter.com (read from right to left).

The alternate design was a tree based model for logging client events. That model allowed for arbitrarily deep event namespace with as fine-grained logging as required. But the client events model was chosen to make the top level aggregate queries easier.

A client event is a Thrift structure that contains the components given in the table below.

| Field | Description |

|-----------------|---------------------------------|

| event initiator | {client, server} × {user, app} |

| event_name | event name |

| user_id | user id |

| session_id | session id |

| ip | user’s IP address |

| timestamp | timestamp |

| event_details | event details |

**Table 2: Definition of a client event.**

The logging infrastructure is unified in two senses:

* All log messages share a common format with clear semantics.

* All log messages are stored in a single place.

## Session Sequences

A session sequence is a sequence of symbols *S = {s<sub>0</sub>, s<sub>1</sub>, s<sub>2</sub>...s<sub>n</sub>}* such that each symbol is drawn from a finite alphabet *Σ*. A bijective mapping is defined between Σ and universe of event names. Each symbol in Σ is represented by a valid Unicode point (frequent events are assigned shorter code prints) and each session sequence becomes a valid Unicode string. Once all logs have been imported to the main database, a histogram of event counts is created and is used to map event names to Unicode code points. The counts and samples of each event type are stored in a known location in HDFS. Session sequences are reconstructed from the raw client event logs via a *group-by* on *user_id* and *session_id*. Session sequences are materialized as it is difficult to work with raw client event logs for following reasons:

* A lot of brute force scans.

* Large group-by operations needed to reconstruct user session.

#### Alternate Designs Considered

* Reorganize complete Thrift messages by reconstructing user sessions - This solves the second problem but not the first.

* Use a columnar storage format - This addresses the first issue but it just reduces the time taken by mappers and not the number of mappers itself.

The materialized session sequences are much smaller than raw client event logs (around 50 times smaller) and address both the issues.

## Client Event Catalog

To enhance the accessibility of the client event logs, an automatically generated event data log is used along with a browsing interface to allow users to browse, search and access sample entries for the various client events. (These sample entries are the same entries that were mentioned in the previous section. The catalog is rebuilt every day and is always up to date.

## Applications

Client Event Logs and Session Sequences are used in following applications:

* Summary Statistics - Session sequences are used to compute various statistics about sessions.

* Event Counting - Used to understand what feature of users take advantage of a particular feature.

* Funnel Analytics - Used to focus on user attention in a multi-step process like signup process.

* User Modeling - Used to identify "interesting" user behavior. N-gram models (from NLP domain) can be extended to measure how important temporal signals are by modeling user behavior on the basis of last n actions. The paper also mentions the possibility of extracting "activity collocations" based on the notion of collocations.

## Possible Extensions

Session sequences are limited in the sense that they capture only event name and exclude other details. The solution adopted by Twitter is to use a generic indexing infrastructure that integrates with Hadoop at the level of InputFormats. The indexes reside with the data making it easier to reindex the data. An alternative would have been to use **Trojan layouts** which members indexing in HDFS block header but this means that indexing would require the data to be rewritten. Another possible extension would be to leverage more analogies from the field of Natural Language Processing. This would include the use of automatic grammar induction techniques to learn hierarchical decomposition of user activity. Another area of exploration is around leveraging advanced visualization techniques for exploring sessions and mapping interesting behavioral patterns into distinct visual patterns that can be easily recognized.

|

Playing Atari with Deep Reinforcement Learning

Volodymyr Mnih and Koray Kavukcuoglu and David Silver and Alex Graves and Ioannis Antonoglou and Daan Wierstra and Martin Riedmiller

arXiv e-Print archive - 2013 via Local arXiv

Keywords: cs.LG

First published: 2013/12/19 (11 years ago)

Abstract: We present the first deep learning model to successfully learn control policies directly from high-dimensional sensory input using reinforcement learning. The model is a convolutional neural network, trained with a variant of Q-learning, whose input is raw pixels and whose output is a value function estimating future rewards. We apply our method to seven Atari 2600 games from the Arcade Learning Environment, with no adjustment of the architecture or learning algorithm. We find that it outperforms all previous approaches on six of the games and surpasses a human expert on three of them.

more

less

Volodymyr Mnih and Koray Kavukcuoglu and David Silver and Alex Graves and Ioannis Antonoglou and Daan Wierstra and Martin Riedmiller

arXiv e-Print archive - 2013 via Local arXiv

Keywords: cs.LG

First published: 2013/12/19 (11 years ago)

Abstract: We present the first deep learning model to successfully learn control policies directly from high-dimensional sensory input using reinforcement learning. The model is a convolutional neural network, trained with a variant of Q-learning, whose input is raw pixels and whose output is a value function estimating future rewards. We apply our method to seven Atari 2600 games from the Arcade Learning Environment, with no adjustment of the architecture or learning algorithm. We find that it outperforms all previous approaches on six of the games and surpasses a human expert on three of them.

|

[link]

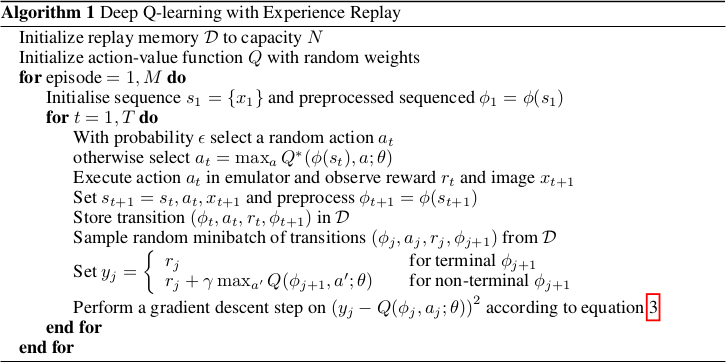

* They use an implementation of Q-learning (i.e. reinforcement learning) with CNNs to automatically play Atari games.

* The algorithm receives the raw pixels as its input and has to choose buttons to press as its output. No hand-engineered features are used. So the model "sees" the game and "uses" the controller, just like a human player would.

* The model achieves good results on various games, beating all previous techniques and sometimes even surpassing human players.

### How

* Deep Q Learning

* *This is yet another explanation of deep Q learning, see also [this blog post](http://www.nervanasys.com/demystifying-deep-reinforcement-learning/) for longer explanation.*

* While playing, sequences of the form (`state1`, `action1`, `reward`, `state2`) are generated.

* `state1` is the current game state. The agent only sees the pixels of that state. (Example: Screen shows enemy.)

* `action1` is an action that the agent chooses. (Example: Shoot!)

* `reward` is the direct reward received for picking `action1` in `state1`. (Example: +1 for a kill.)

* `state2` is the next game state, after the action was chosen in `state1`. (Example: Screen shows dead enemy.)

* One can pick actions at random for some time to generate lots of such tuples. That leads to a replay memory.

* Direct reward

* After playing randomly for some time, one can train a model to predict the direct reward given a screen (we don't want to use the whole state, just the pixels) and an action, i.e. `Q(screen, action) -> direct reward`.

* That function would need a forward pass for each possible action that we could take. So for e.g. 8 buttons that would be 8 forward passes. To make things more efficient, we can let the model directly predict the direct reward for each available action, e.g. for 3 buttons `Q(screen) -> (direct reward of action1, direct reward of action2, direct reward of action3)`.

* We can then sample examples from our replay memory. The input per example is the screen. The output is the reward as a tuple. E.g. if we picked button 1 of 3 in one example and received a reward of +1 then our output/label for that example would be `(1, 0, 0)`.

* We can then train the model by playing completely randomly for some time, then sample some batches and train using a mean squared error. Then play a bit less randomly, i.e. start to use the action which the network thinks would generate the highest reward. Then train again, and so on.

* Indirect reward

* Doing the previous steps, the model will learn to anticipate the *direct* reward correctly. However, we also want it to predict indirect rewards. Otherwise, the model e.g. would never learn to shoot rockets at enemies, because the reward from killing an enemy would come many frames later.

* To learn the indirect reward, one simply adds the reward value of highest reward action according to `Q(state2)` to the direct reward.

* I.e. if we have a tuple (`state1`, `action1`, `reward`, `state2`), we would not add (`state1`, `action1`, `reward`) to the replay memory, but instead (`state1`, `action1`, `reward + highestReward(Q(screen2))`). (Where `highestReward()` returns the reward of the action with the highest reward according to Q().)

* By training to predict `reward + highestReward(Q(screen2))` the network learns to anticipate the direct reward *and* the indirect reward. It takes a leap of faith to accept that this will ever converge to a good solution, but it does.

* We then add `gamma` to the equation: `reward + gamma*highestReward(Q(screen2))`. `gamma` may be set to 0.9. It is a discount factor that devalues future states, e.g. because the world is not deterministic and therefore we can't exactly predict what's going to happen. Note that Q will automatically learn to stack it, e.g. `state3` will be discounted to `gamma^2` at `state1`.

* This paper

* They use the mentioned Deep Q Learning to train their model Q.

* They use a k-th frame technique, i.e. they let the model decide upon an action at (here) every 4th frame.

* Q is implemented via a neural net. It receives 84x84x4 grayscale pixels that show the game and projects that onto the rewards of 4 to 18 actions.

* The input is HxWx4 because they actually feed the last 4 frames into the network, instead of just 1 frame. So the network knows more about what things are moving how.

* The network architecture is:

* 84x84x4 (input)

* 16 convs, 8x8, stride 4, ReLU

* 32 convs, 4x4, stride 2, ReLU

* 256 fully connected neurons, ReLU

* <N_actions> fully connected neurons, linear

* They use a replay memory of 1 million frames.

### Results

* They ran experiments on the Atari games Beam Rider, Breakout, Enduro, Pong, Qbert, Seaquest and Space Invaders.

* Same architecture and hyperparameters for all games.

* Rewards were based on score changes in the games, i.e. they used +1 (score increases) and -1 (score decreased).

* Optimizer: RMSProp, Batch Size: 32.

* Trained for 10 million examples/frames per game.

* They had no problems with instability and their average Q value per game increased smoothly.

* Their method beats all other state of the art methods.

* They managed to beat a human player in games that required not so much "long" term strategies (the less frames the better).

* Video: starts at 46:05.

https://youtu.be/dV80NAlEins?t=46m05s

*The original full algorithm, as shown in the paper.*

--------------------

### Rough chapter-wise notes

* (1) Introduction

* Problems when using neural nets in reinforcement learning (RL):

* Reward signal is often sparse, noise and delayed.

* Often assumption that data samples are independent, while they are correlated in RL.

* Data distribution can change when the algorithm learns new behaviours.

* They use Q-learning with a CNN and stochastic gradient descent.

* They use an experience replay mechanism (i.e. memory) from which they can sample previous transitions (for training).

* They apply their method to Atari 2600 games in the Arcade Learning Environment (ALE).

* They use only the visible pixels as input to the network, i.e. no manual feature extraction.

* (2) Background

* blablabla, standard deep q learning explanation

* (3) Related Work

* TD-Backgammon: "Solved" backgammon. Worked similarly to Q-learning and used a multi-layer perceptron.

* Attempts to copy TD-Backgammon to other games failed.

* Research was focused on linear function approximators as there were problems with non-linear ones diverging.

* Recently again interest in using neural nets for reinforcement learning. Some attempts to fix divergence problems with gradient temporal-difference methods.

* NFQ is a very similar method (to the one in this paper), but worked on the whole batch instead of minibatches, making it slow. It also first applied dimensionality reduction via autoencoders on the images instead of training on them end-to-end.

* HyperNEAT was applied to Atari games and evolved a neural net for each game. The networks learned to exploit design flaws.

* (4) Deep Reinforcement Learning

* They want to connect a reinforcement learning algorithm with a deep neural network, e.g. to get rid of handcrafted features.

* The network is supposes to run on the raw RGB images.

* They use experience replay, i.e. store tuples of (pixels, chosen action, received reward) in a memory and use that during training.

* They use Q-learning.

* They use an epsilon-greedy policy.

* Advantages from using experience replay instead of learning "live" during game playing:

* Experiences can be reused many times (more efficient).

* Samples are less correlated.

* Learned parameters from one batch don't determine as much the distributions of the examples in the next batch.

* They save the last N experiences and sample uniformly from them during training.

* (4.1) Preprocessing and Model Architecture

* Raw Atari images are 210x160 pixels with 128 possible colors.

* They downsample them to 110x84 pixels and then crop the 84x84 playing area out of them.

* They also convert the images to grayscale.

* They use the last 4 frames as input and stack them.

* So their network input has shape 84x84x4.

* They use one output neuron per possible action. So they can compute the Q-value (expected reward) of each action with one forward pass.

* Architecture: 84x84x4 (input) => 16 8x8 convs, stride 4, ReLU => 32 4x4 convs stride 2 ReLU => fc 256, ReLU => fc N actions, linear

* 4 to 18 actions/outputs (depends on the game).

* Aside from the outputs, the architecture is the same for all games.

* (5) Experiments

* Games that they played: Beam Rider, Breakout, Enduro, Pong, Qbert, Seaquest, Space Invaders

* They use the same architecture und hyperparameters for all games.

* They give a reward of +1 whenever the in-game score increases and -1 whenever it decreases.

* They use RMSProp.

* Mini batch size was 32.

* They train for 10 million frames/examples.

* They initialize epsilon (in their epsilon greedy strategy) to 1.0 and decrease it linearly to 0.1 at one million frames.

* They let the agent decide upon an action at every 4th in-game frame (3rd in space invaders).

* (5.1) Training and stability

* They plot the average reward und Q-value per N games to evaluate the agent's training progress,

* The average reward increases in a noisy way.

* The average Q value increases smoothly.

* They did not experience any divergence issues during their training.

* (5.2) Visualizating the Value Function

* The agent learns to predict the value function accurately, even for rather long sequences (here: ~25 frames).

* (5.3) Main Evaluation

* They compare to three other methods that use hand-engineered features and/or use the pixel data combined with significant prior knownledge.

* They mostly outperform the other methods.

* They managed to beat a human player in three games. The ones where the human won seemed to require strategies that stretched over longer time frames.

|

Dropout as data augmentation

Konda, Kishore Reddy and Bouthillier, Xavier and Memisevic, Roland and Vincent, Pascal

arXiv e-Print archive - 2015 via Local Bibsonomy

Keywords: dblp

Konda, Kishore Reddy and Bouthillier, Xavier and Memisevic, Roland and Vincent, Pascal

arXiv e-Print archive - 2015 via Local Bibsonomy

Keywords: dblp

|

[link]

This paper suggests a novel explanation for why dropout training is helpful: because it corresponds to an adaptive data augmentation method. Indeed, the authors point out that, when sampling a mask of the hidden units in a network (effectively setting the corresponding units to 0), the same effect would have been obtained by feeding as input an example tailored to yield activations of 0 for these units and otherwise the same activation for all other units. Since this "ghost" example will have to be different from the original example, and since each different mask would correspond to a different "ghost" example, then effectively mask sampling is similar to data augmentation. While in practice finding a ghost example that replicates exactly the same dropout hidden activations might not be possible, the authors show that finding an "approximate" ghost example that minimizes a distance between the target dropout activation and the deterministic activation of the ghost example works well. Indeed, they show that training a deep neural net on additional data generated by this procedure yields results that are at least as good as regular dropout on MNIST and CIFAR-10 (actually, the deterministic neural net still uses regular dropout at the input layer, however they do show that the additional ghost examples are necessary to match the neural net trained with dropout at all layers). Then the authors use that interpretation to justify a variation of dropout where the dropout rate isn't fixed, but itself is randomly sampled in some range for each example. Indeed, if we think of dropout at a fixed rate as a specific class of ghost data being added, varying the dropout rate corresponds to enriching even more the ghost data pool. The experiments show that this can help, though not by much. Finally, the authors propose an explanation of a property of dropout: that it tends to generate hidden representations that are sparser. Again, the authors rely on their interpretation of dropout as data augmentation. The explanation goes as follows. Training on the ghost data distribution might imply that the classification problem has become significantly harder. Specifically, it is quite possible that the addition of new ghost examples generates new isolated class clusters in input space that the model most now learn to discriminate. And they hypothesize that the generation of such additional clusters would encourage sparsity. To test this hypothesis, the authors synthetically simulate this scenario, by sampling data on a circle, which is clustered in small arcs each assigned to one of 10 possible classes in cycling order. Decreasing the arc length thus increases the number of arcs, i.e. class clusters. They show that training deep networks on datasets with increasing number of class clusters does yield representations that are increasingly sparser. This thus suggests that dropout might indeed be equivalent to modifying the input distribution by adding such isolated class-specific clusters in input space. One assumption behind this analysis is that the sparsity patterns (i.e. the set of non-zero dimensions) play an important role in classification and incorporate most of the discriminative class information. This assumption is also confirmed in experiments, where converting the ReLU activation function by a binary activation (that is 1 if the pre-activation is positive and 0 otherwise) after training still yields a network with good performance (though slightly worse). #### My two cents This is a really original and thought provoking paper. One interpretation I make of these results is that the inductive bias corresponding to using a deep neural network with ReLU activations is more valuable than one might have thought, and that the usefulness of deep neural networks goes beyond just being black boxes that can learn data-dependent representations. Otherwise, it's not clear to me why the ghost data implicitly generated by the architecture would be useful at all. This also suggests an experiment where such ghost samples would be fed to another type of classifier, such as an SVM, to test whether the data augmentation is useful in itself and reflects meaningful structure in the data, as opposed to being somehow useful only for neural nets. I note that the results are mostly specific to architectures based on ReLU activations (not that this is a problem, but one should keep this in mind). I'd really like to see what the ghost samples look like. Do they correspond to interpretable images? The authors also mention that exploring how the samples change with training would be interesting to investigate, and I agree. Finally, I think there might be a typo in Figure 1. While the labels of a) and b) states that the arc length is smaller for a) than b), the plot clearly show otherwise.  |