arXiv is an e-print service in the fields of physics, mathematics, computer science, quantitative biology, quantitative finance and statistics.

- 1996 1

- 2010 1

- 2011 1

- 2012 8

- 2013 31

- 2014 43

- 2015 134

- 2016 231

- 2017 182

- 2018 141

- 2019 100

- 2020 33

- 2021 10

- 2022 7

- 2023 3

Objective-Reinforced Generative Adversarial Networks (ORGAN) for Sequence Generation Models

Guimaraes, Gabriel Lima and Sanchez-Lengeling, Benjamin and Farias, Pedro Luis Cunha and Aspuru-Guzik, Alán

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Guimaraes, Gabriel Lima and Sanchez-Lengeling, Benjamin and Farias, Pedro Luis Cunha and Aspuru-Guzik, Alán

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

This paper's proposed method, the cleverly named ORGAN, combines techniques from GANs and reinforcement learning to generate candidate molecular sequences that incentivize desirable properties while still remaining plausibly on-distribution. Prior papers I've read on molecular generation have by and large used approaches based in maximum likelihood estimation (MLE) - where you construct some distribution over molecular representations, and maximize the probability of your true data under that distribution. However, MLE methods can't be less powerful when it comes to incentivizing your model to precisely conform with structural details of your target data distribution. Generative Adversarial Networks (GANs) on the other hand, use a discriminator loss that directly penalizes your generator for being recognizably different from the true data. However, GANs have historically been difficult to use on data like the string-based molecular representations used in this paper. That's because strings are made up of discrete characters, which need to be sampled from underlying distributions, and we don't naively have good ways of making sampling from discrete distributions a differentiable process. SeqGAN was proposed to remedy this: instead of using the discriminator loss directly as the generator's loss - which would require backpropogating through the sampling operation - the generator is trained with reinforcement learning, using the discriminator score as a reward function. Each addition of an element to the sequence - or, in our case, each addition of a character to our molecular representation string - represents an action, and full sequences are rewarded based on the extent to which they resemble true sequences. https://i.imgur.com/dqtcJDU.png This paper proposes taking that model as a base, but then adding a more actually-reward-oriented reward onto it, incentivizing the model to produce molecules that have certain predicted properties, as determined by a (also very not differentiable) external molecular simulator. So, just taking a weighted sum of discriminator loss and reward, and using that as your RL reward. After all, if you're already using the policy gradient structures of RL to train the underlying generator, you might as well add on some more traditional-looking RL rewards. The central intuition behind using RL in both of these cases is that it provides a way of using training signals that are unknown or - more to the point - non-differentiable functions functions of model output. In their empirical tests focusing on molecules, the authors target the RL to optimize for one of solubility, synthesizability, and druggability (three well-defined properties within molecular simulator RDKit), as well as for uniqueness, penalizing any molecule that had been generated already. https://i.imgur.com/WszVd1M.png For all that this is an interesting mechanic, the empirical results are more equivocal. Compared to Naive RL, which directly optimizes for reward without the discriminator loss, ORGAN (Or, ORWGAN, the better-performing method using a Wasserstein GAN) doesn't have notably better rates of how often its generated strings are valid, and (as you would expect) performs comparably or slightly worse when it comes to optimizing the underlying reward. It does exhibit higher diversity than naive RL on two of the three tasks, but it's hard to get an intuition for the scales involved, and how much that scale of diversity increase would impact real results.  |

Neural Message Passing for Quantum Chemistry

Gilmer, Justin and Schoenholz, Samuel S. and Riley, Patrick F. and Vinyals, Oriol and Dahl, George E.

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Gilmer, Justin and Schoenholz, Samuel S. and Riley, Patrick F. and Vinyals, Oriol and Dahl, George E.

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

In the years before this paper came out in 2017, a number of different graph convolution architectures - which use weight-sharing and order-invariant operations to create representations at nodes in a graph that are contextualized by information in the rest of the graph - had been suggested for learning representations of molecules. The authors of this paper out of Google sought to pull all of these proposed models into a single conceptual framework, for the sake of better comparing and testing the design choices that went into them. All empirical tests were done using the QM9 dataset, where 134,000 molecules have predicted chemical properties attached to them, things like the amount of energy released if bombs are sundered and the energy of electrons at different electron shells. https://i.imgur.com/Mmp8KO6.png An interesting note is that these properties weren't measured empirically, but were simulated by a very expensive quantum simulation, because the former wouldn't be feasible for this large of a dataset. However, this is still a moderately interesting test because, even if we already have the capability to computationally predict these features, a neural network would do much more quickly. And, also, one might aspirationally hope that architectures which learn good representations of molecules for quantum predictions are also useful for tasks with a less available automated prediction mechanism. The framework assumes the existence of "hidden" feature vectors h at each node (atom) in the graph, as well as features that characterize the edges between nodes (whether that characterization comes through sorting into discrete bond categories or through a continuous representation). The features associated with each atom at the lowest input level of the molecule-summarizing networks trained here include: the element ID, the atomic number, whether it accepts electrons or donates them, whether it's in an aromatic system, and which shells its electrons are in. https://i.imgur.com/J7s0q2e.png Given these building blocks, the taxonomy lays out three broad categories of function, each of which different architectures implement in slightly different ways. 1. The Message function, M(). This function is defined with reference to a node w, that the message is coming from, and a node v, that it's being sent to, and is meant to summarize the information coming from w to inform the node representation that will be calculated at v. It takes into account the feature vectors of one or both nodes at the next level down, and sometimes also incorporates feature vectors attached to the edge connecting the two nodes. In a notable example of weight sharing, you'd use the same Message function for every combination of v and w, because you need to be able to process an arbitrary number of pairs, with each v having a different number of neighbors. The simplest example you might imagine here is a simple concatenation of incoming node and edge features; a more typical example from the architectures reviewed is a concatenation followed by a neural network layer. The aggregate message being sent to the receiver node is calculated by summing together the messages from each incoming vector (though it seems like other options are possible; I'm a bit confused why the paper presented summing as the only order-invariant option). 2. The Update function, U(). This function governs how to take the aggregated message vector sent to a particular node, and combine that with the prior-layer representation at that node, to come up with a next-layer representation at that node. Similarly, the same Update function weights are shared across all atoms. 3. The Readout function, R(), which takes the final-layer representation of each atom node and aggregates the representations into a final graph-level representation an order-invariant way Rather than following in the footsteps of the paper by describing each proposed model type and how it can be described in this framework, I'll instead try to highlight some of the more interesting ways in which design choices differed across previously proposed architectures. - Does the message function being sent from w to v depend on the feature value at both w and v, or just v? To put the question more colloquially, you might imagine w wanting to contextually send different information based on different values of the feature vector at node v, and this extra degree of expressivity (not present in the earliest 2015 paper), seems like a quite valuable addition (in that all subsequent papers include it) - Are the edge features static, categorical things, or are they feature vectors that get iteratively updated in the same way that the node vectors do? For most of the architectures reviewed, the former is true, but the authors found that the highest performance in their tests came from networks with continuous edge vectors, rather than just having different weights for different category types of edge - Is the Readout function something as simple as a summation of all top-level feature vectors, or is it more complex? Again, the authors found that they got the best performance by using a more complex approach, a Set2Set aggregator, which uses item-to-item attention within the set of final-layer atom representations to construct an aggregated grap-level embedding The empirical tests within the paper highlight a few more interestingly relevant design choices that are less directly captured by the framework. The first is the fact that it's quite beneficial to explicitly include Hydrogen atoms as part of the graph, rather than just "attaching" them to their nearest-by atoms as a count that goes on that atom's feature vector. The second is that it's valuable to start out your edge features with a continuous representation of the spatial distance between atoms, along with an embedding of the bond type. This is particularly worth considering because getting spatial distance data for a molecule requires solving the free-energy problem to determine its spatial conformation, a costly process. We might ideally prefer a network that can work on bond information alone. The authors do find a non-spatial-information network that can perform reasonably well - reaching full accuracy on 5 of 13 targets, compared to 11 with spatial information. However, the difference is notable, which, at least from my perspective, begs the question of whether it'd ever be possible to learn representations that can match the performance of spatially-informed ones without explicitly providing that information.  |

Efficient Convolutional Network Learning using Parametric Log based Dual-Tree Wavelet ScatterNet

Amarjot Singh and Nick Kingsbury

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.LG, stat.ML

First published: 2017/08/30 (6 years ago)

Abstract: We propose a DTCWT ScatterNet Convolutional Neural Network (DTSCNN) formed by replacing the first few layers of a CNN network with a parametric log based DTCWT ScatterNet. The ScatterNet extracts edge based invariant representations that are used by the later layers of the CNN to learn high-level features. This improves the training of the network as the later layers can learn more complex patterns from the start of learning because the edge representations are already present. The efficient learning of the DTSCNN network is demonstrated on CIFAR-10 and Caltech-101 datasets. The generic nature of the ScatterNet front-end is shown by an equivalent performance to pre-trained CNN front-ends. A comparison with the state-of-the-art on CIFAR-10 and Caltech-101 datasets is also presented.

more

less

Amarjot Singh and Nick Kingsbury

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.LG, stat.ML

First published: 2017/08/30 (6 years ago)

Abstract: We propose a DTCWT ScatterNet Convolutional Neural Network (DTSCNN) formed by replacing the first few layers of a CNN network with a parametric log based DTCWT ScatterNet. The ScatterNet extracts edge based invariant representations that are used by the later layers of the CNN to learn high-level features. This improves the training of the network as the later layers can learn more complex patterns from the start of learning because the edge representations are already present. The efficient learning of the DTSCNN network is demonstrated on CIFAR-10 and Caltech-101 datasets. The generic nature of the ScatterNet front-end is shown by an equivalent performance to pre-trained CNN front-ends. A comparison with the state-of-the-art on CIFAR-10 and Caltech-101 datasets is also presented.

|

[link]

ScatterNets incorporates geometric knowledge of images to produce discriminative and invariant (translation and rotation) features i.e. edge information. The same outcome as CNN's first layers hold. So why not replace that first layer/s with an equivalent, fixed, structure and let the optimizer find the best weights for the CNN with its leading-edge removed. The main motivations of the idea of replacing the first convolutional, ReLU and pooling layers of the CNN with a two-layer parametric log-based Dual-Tree Complex Wavelets Transform (DTCWT), covered by a few papers, were: Despite the success of CNNs, the design and optimizing configuration of these networks is not well understood which makes it difficult to develop these networks This improves the training of the network as the later layers can learn more complex patterns from the start of learning because the edge representations are already present Converge faster as it has fewer filter weights to learn My takeaway: a slight reduction in the amount of data necessary for training! On CIFAR10 and Caltech-101 with 14 self-made CNN with increasing depth, VGG, NIN and WideResnet: When doing transfer learning(Imagenet): DTSCNN outperformed (“useful margin”) all the CNN architectures counterpart when finetuning with only 1000 examples(balanced over classes). While on larger datasets the gap decreases ending on par with. However, when freezing the first layers on VGG and NIN, as in DTSCNN, the NIN results are in par with, while VGG outperforms! DTSCNN learns faster in the rate but reaches the same target with minor speedup (few mins) Complexity analysis in terms of weights and operations is missing Datasets: CIFAR-10 & Caltech-101, is a good start point (further step with a substantial dataset like COCO would be a plus). For other modalities/domains, please try and let me know Great work but ablation study is missing such as comparing full training WResNet+DTCWT vs. WResNet 14 citation so far (Cambridge): probably low value per money at the moment https://i.imgur.com/GrzSviU.png  |

MoleculeNet: A Benchmark for Molecular Machine Learning

Wu, Zhenqin and Ramsundar, Bharath and Feinberg, Evan N. and Gomes, Joseph and Geniesse, Caleb and Pappu, Aneesh S. and Leswing, Karl and Pande, Vijay S.

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Wu, Zhenqin and Ramsundar, Bharath and Feinberg, Evan N. and Gomes, Joseph and Geniesse, Caleb and Pappu, Aneesh S. and Leswing, Karl and Pande, Vijay S.

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

This is a paper released by the creators of the DeepChem library/framework, explaining the efforts they've put into facilitating straightforward and reproducible testing of new methods. They advocate for consistency between tests on three main axes. 1. On the most basic level, that methods evaluate on the same datasets 2. That they use canonical train/test splits 3. That they use canonical metrics. To that end, they've integrated a framework they call "MoleculeNet" into DeepChem, containing standardized interfaces to datasets, metrics, and test sets. **Datasets** MoleculeNet contains 17 different datasets, where "dataset" here just means a collection of data labeled for a certain task or set of tasks. The tasks fall into one of four groups: - quantum mechanical prediction (atomization energy, spectra) - prediction of properties of physical chemistry (solubility, lipophilicity) - prediction of biophysical interactions like bonding affinity - prediction of human-level physiological properties (toxicity, side effects, whether it passes the blood brain barrier) An interesting thing to note here is that only some datasets contain 3D orientations of molecules, because spatial orientations are properties of *a given conformation* of a molecule, and while some output measures (like binding geometry) depend on 3D arrangement, others (like solubility) don't. **Metrics** The metrics chosen were pretty straightforward - Root Mean Squared Error or Absolute Error for continuous prediction tasks, and ROC-AUC or PRC-AUC for prediction ones. The only notable nuance was that the paper argued for PRC-AUC as the standard metric for datasets with a low number of positives, since that metric is the strictest on false positives. **Test/Train Split** Most of these were fairly normal - random split and time-based split - but I found the idea of a scaffold split (where you cluster molecules by similarity, and assign each cluster to either train or test), interesting. The idea here is that if molecules are similar enough to one another, seeing one of a pair during training might be comparable to seeing an actual shared example between training and test, and have the same propensity for overconfident results. **Models** DeepChem has put together implementations of a number of standard machine learning methods (SVM, Random Forest, XGBoost, Logistic Regression) on molecular features, as well as a number of molecule-specific graph-structured methods. At a high level, these are: https://i.imgur.com/x4yutlp.png - Graph Convolutions, which update atom representations by combining transformations of the features of bonded neighbor atoms - DAGs, which create an "atom-centric" graph for each atom in the molecule and "pull" information inwards from farther away nodes (for the record, I don't fully follow how this one works, since I haven't read the underlying paper) - Weave Model, which maintains both atom representations and pair representations between all pairs of atoms, not just ones bonded to one another, and updates each in a cross-cutting way: updating an atom representation from all of its pairs (as well as itself), and then updating a pair representation from the atoms in its pairing (as well as itself). This has the benefit of making information from far-away molecules available immediately, rather than having to propagate through a graph, but is also more computationally taxing - Message Passing Neural Network, which operates like Graph Convolutions except that the feature transform used to pull in information from neighboring atoms changes depending on the type of the bond between atoms - Deep Tensor Neural Network - Instead of bonds, this approach represents atoms in 3D space, and pulls in information based on other atoms nearby in spatial distance **Results** As part of creating its benchmark, MoleculeNet also tested its implementations of its models on all its datasets. It's interesting the extent to which the results form a narrative, in terms of which tasks benefit most from flexible structure-based methods (like graph approaches) vs hand-engineered features. https://i.imgur.com/dCAdJac.png Predictions of quantum mechanical properties and properties of physical chemistry do consistently better with graph-based methods, potentially suggesting that the features we've thought to engineer aren't in line with useful features for those tasks. By contrast, on biophysical tasks, hand-engineered features combined with traditional machine learning mostly comes out on top, a fact I found a bit surprising, given the extent to which I'd read about deep learning methods claiming strong results on prediction of things like binding affinity. This was a useful pointer of things I should do some more work to resolve clarity on. And, when it came to physiological properties like toxicity and side effects, results are pretty mixed between graph-based and traditional methods.  |

The Space of Transferable Adversarial Examples

Tramèr, Florian and Papernot, Nicolas and Goodfellow, Ian J. and Boneh, Dan and McDaniel, Patrick D.

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Tramèr, Florian and Papernot, Nicolas and Goodfellow, Ian J. and Boneh, Dan and McDaniel, Patrick D.

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

Tramer et al. study adversarial subspaces, subspaces of the input space that are spanned by multiple, orthogonal adversarial examples. This is achieved by iteratively searching for orthogonal adversarial examples, relative to a specific test example. This can, for example, be done using classical second- or first-order optimization methods for finding adversarial examples with the additional constraint of finding orthogonal adversarial examples. However, the authors also consider different attack strategies that work on discrete input features. In practice, on MNIST, this allows to find, on average, 44 orthogonal directions per test example. This finding indicates that adversarial examples indeed span large adversarial subspaces. Additionally, adversarial examples from the subspaces seem to transfer reasonably well to other models. The remainder of the paper links this ease of transferability to a similarity in decision boundaries learnt by different models from the same hypotheses set. Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

Sharp Minima Can Generalize For Deep Nets

Dinh, Laurent and Pascanu, Razvan and Bengio, Samy and Bengio, Yoshua

International Conference on Machine Learning - 2017 via Local Bibsonomy

Keywords: dblp

Dinh, Laurent and Pascanu, Razvan and Bengio, Samy and Bengio, Yoshua

International Conference on Machine Learning - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

Dinh et al. show that it is unclear whether flat minima necessarily generalize better than sharp ones. In particular, they study several notions of flatness, both based on the local curvature and based on the notion of “low change in error”. The authors show that the parameterization of the network has a significant impact on the flatness; this means that functions leading to the same prediction function (i.e., being indistinguishable based on their test performance) might have largely varying flatness around the obtained minima, as illustrated in Figure 1. In conclusion, while networks that generalize well usually correspond to flat minima, it is not necessarily true that flat minima generalize better than sharp ones. https://i.imgur.com/gHfolEV.jpg Figure 1: Illustration of the influence of parameterization on the flatness of the obtained minima. Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

Value Prediction Network

Oh, Junhyuk and Singh, Satinder and Lee, Honglak

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Oh, Junhyuk and Singh, Satinder and Lee, Honglak

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

Recently, DeepMind released a new paper showing strong performance on board game tasks using a mechanism similar to the Value Prediction Network one in this paper, which inspired me to go back and get a grounding in this earlier work. A goal of this paper is to design a model-based RL approach that can scale to complex environment spaces, but can still be used to run simulations and do explicit planning. Traditional, model-based RL has worked by learning a dynamics model of the environment - predicting the next observation state given the current one and an action, and then using that model of the world to learn values and plan with. In addition to the advantages of explicit planning, a hope is that model-based systems generalize better to new environments, because they predict one-step changes in local dynamics in a way that can be more easily separated from long-term dynamics or reward patterns. However, a downside of MBRL is that it can be hard to train, especially when your observation space is high-dimensional, and learning a straight model of your environment will lead to you learning details that aren't actually unimportant for planning or creating policies. The synthesis proposed by this paper is the Value Prediction Network. Rather than predicting observed state at the next step, it learns a transition model in latent space, and then learns to predict next-step reward and future value from that latent space vector. Because it learns to encode latent-space state from observations, and also learns a transition model from one latent state to another, the model can be used for planning, by simulating multiple transitions between latent state. However, unlike a normal dynamics model, whose training signal comes from a loss against observational prediction, the signal for training both latent → reward/value/discount predictions, and latent → latent transitions comes from using this pipeline to predict reward values. This means that if an aspect of the environment isn't useful for predicting reward, it won't generally be encoded into latent state, meaning you don't waste model capacity predicting irrelevant detail. https://i.imgur.com/4bJylms.png Once this model exists, it can be used for generating a policy through a tree-search planning approach: simulating future trajectories and aggregating the predicted reward along those trajectories, and then taking the highest-value one. The authors find that their model is able to do better than both model-free and model-based methods on the tasks they tested on. In particular, they find that it has many of the benefits of a model that predicts full observations, but that the Value Prediction Network learns more quickly, and is more robust to stochastic environments where there's an inherent ceiling on how well a next-step observation prediction can work. My main question coming into this paper is: how is this different from simply a value estimator like those used in DQN or A2C, and my impression is that the difference comes from this model's ability to do explicit state simulation in latent space, and then predict a value off of the *latent* state, whereas a value network predicts value from observational state.  |

AI Safety Gridworlds

Jan Leike and Miljan Martic and Victoria Krakovna and Pedro A. Ortega and Tom Everitt and Andrew Lefrancq and Laurent Orseau and Shane Legg

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.LG, cs.AI

First published: 2017/11/27 (6 years ago)

Abstract: We present a suite of reinforcement learning environments illustrating various safety properties of intelligent agents. These problems include safe interruptibility, avoiding side effects, absent supervisor, reward gaming, safe exploration, as well as robustness to self-modification, distributional shift, and adversaries. To measure compliance with the intended safe behavior, we equip each environment with a performance function that is hidden from the agent. This allows us to categorize AI safety problems into robustness and specification problems, depending on whether the performance function corresponds to the observed reward function. We evaluate A2C and Rainbow, two recent deep reinforcement learning agents, on our environments and show that they are not able to solve them satisfactorily.

more

less

Jan Leike and Miljan Martic and Victoria Krakovna and Pedro A. Ortega and Tom Everitt and Andrew Lefrancq and Laurent Orseau and Shane Legg

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.LG, cs.AI

First published: 2017/11/27 (6 years ago)

Abstract: We present a suite of reinforcement learning environments illustrating various safety properties of intelligent agents. These problems include safe interruptibility, avoiding side effects, absent supervisor, reward gaming, safe exploration, as well as robustness to self-modification, distributional shift, and adversaries. To measure compliance with the intended safe behavior, we equip each environment with a performance function that is hidden from the agent. This allows us to categorize AI safety problems into robustness and specification problems, depending on whether the performance function corresponds to the observed reward function. We evaluate A2C and Rainbow, two recent deep reinforcement learning agents, on our environments and show that they are not able to solve them satisfactorily.

|

[link]

The paper proposes a standardized benchmark for a number of safety-related problems, and provides an implementation that can be used by other researchers. The problems fall in two categories: specification and robustness. Specification refers to cases where it is difficult to specify a reward function that encodes our intentions. Robustness means that agent's actions should be robust when facing various complexities of a real-world environment. Here is a list of problems: 1. Specification: 1. Safe interruptibility: agents should neither seek nor avoid interruption. 2. Avoiding side effects: agents should minimize effects unrelated to their main objective. 3. Absent supervisor: agents should not behave differently depending on presence of supervisor. 4. Reward gaming: agents should not try to exploit errors in reward function. 2. Robustness: 1. Self-modification: agents should behave well when environment allows self-modification. 2. Robustness to distributional shift: agents should behave robustly when test differs from train. 3. Robustness to adversaries: agents should detect and adapt to adversarial intentions in environment. 4. Safe exploration: agent should behave safely during learning as well. It is worth noting that problems 1.2, 1.4, 2.2, and 2.4 have been described back in "Concrete Problems in AI Safety". It is suggested that each of these problems be tackled in a "gridworld" environment — a 2D environment where the agent lives on a grid, and the only actions it has available are up/down/left/right movements. The benchmark consists of 10 environments, each corresponding to one of 8 problems mentioned above. Each of the environments is an extremely simple instance of the problem, but nevertheless they are of interest as current SotA algorithms usually don't solve the posed task. Specifically, the authors trained A2C and Rainbow with DQN update on each of the environments and showed that both algorithms fail on all of specification problems, except for Rainbow on 1.1. This is expected, as neither of those algorithms are designed for cases where reward function is misspecified. Both algorithms failed on 2.2--2.4, except for A2C on 2.3. On 2.1, the authors swapped A2C for Rainbow with Sarsa update and showed that Rainbow DQN failed while Rainbow Sarsa performed well. Overall, this is a good groundwork paper with only a few questionable design decisions, such as the design of actual reward in 1.2. It is unlikely to have impact similar to MNIST or ImageNet, but it should stimulate safety-related research.  |

Towards Poisoning of Deep Learning Algorithms with Back-gradient Optimization

Luis Muñoz-González and Battista Biggio and Ambra Demontis and Andrea Paudice and Vasin Wongrassamee and Emil C. Lupu and Fabio Roli

Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security - AISec '17 - 2017 via Local CrossRef

Keywords:

Luis Muñoz-González and Battista Biggio and Ambra Demontis and Andrea Paudice and Vasin Wongrassamee and Emil C. Lupu and Fabio Roli

Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security - AISec '17 - 2017 via Local CrossRef

Keywords:

|

[link]

Munoz-Gonzalez et al. propose a multi-class data poisening attack against deep neural networks based on back-gradient optimization. They consider the common poisening formulation stated as follows:

$ \max_{D_c} \min_w \mathcal{L}(D_c \cup D_{tr}, w)$

where $D_c$ denotes a set of poisened training samples and $D_{tr}$ the corresponding clea dataset. Here, the loss $\mathcal{L}$ used for training is minimized as the inner optimization problem. As result, as long as learning itself does not have closed-form solutions, e.g., for deep neural networks, the problem is computationally infeasible. To resolve this problem, the authors propose using back-gradient optimization. Then, the gradient with respect to the outer optimization problem can be computed while only computing a limited number of iterations to solve the inner problem, see the paper for detail. In experiments, on spam/malware detection and digit classification, the approach is shown to increase test error of the trained model with only few training examples poisened.

Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).

|

UPSET and ANGRI : Breaking High Performance Image Classifiers

Sarkar, Sayantan and Bansal, Ankan and Mahbub, Upal and Chellappa, Rama

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Sarkar, Sayantan and Bansal, Ankan and Mahbub, Upal and Chellappa, Rama

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

Sarkar et al. propose two “learned” adversarial example attacks, UPSET and ANGRI. The former, UPSET, learns to predict universal, targeted adversarial examples. The latter, ANGRI, learns to predict (non-universal) targeted adversarial attacks. For UPSET, a network takes the target label as input and learns to predict a perturbation, which added to the original image results in mis-classification; for ANGRI, a network takes both the target label and the original image as input to predict a perturbation. These networks are then trained using a mis-classification loss while also minimizing the norm of the perturbation. To this end, the target classifier needs to be differentiable – i.e., UPSET and ANGRI require white-box access. Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

Improving Network Robustness against Adversarial Attacks with Compact Convolution

Ranjan, Rajeev and Sankaranarayanan, Swami and Castillo, Carlos D. and Chellappa, Rama

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Ranjan, Rajeev and Sankaranarayanan, Swami and Castillo, Carlos D. and Chellappa, Rama

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

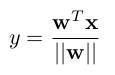

Ranjan et al. propose to constrain deep features to lie on hyperspheres in order to improve robustness against adversarial examples. For the last fully-connected layer, this is achieved by the L2-softmax, which forces the features to lie on the hypersphere. For intermediate convolutional or fully-connected layer, the same effect is achieved analogously, i.e., by normalizing inputs, scaling them and applying the convolution/weight multiplication. In experiments, the authors argue that this improves robustness against simple attacks such as FGSM and DeepFool. Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

Regularizing Neural Networks by Penalizing Confident Output Distributions

Gabriel Pereyra and George Tucker and Jan Chorowski and Łukasz Kaiser and Geoffrey Hinton

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.NE, cs.LG

First published: 2017/01/23 (7 years ago)

Abstract: We systematically explore regularizing neural networks by penalizing low entropy output distributions. We show that penalizing low entropy output distributions, which has been shown to improve exploration in reinforcement learning, acts as a strong regularizer in supervised learning. Furthermore, we connect a maximum entropy based confidence penalty to label smoothing through the direction of the KL divergence. We exhaustively evaluate the proposed confidence penalty and label smoothing on 6 common benchmarks: image classification (MNIST and Cifar-10), language modeling (Penn Treebank), machine translation (WMT'14 English-to-German), and speech recognition (TIMIT and WSJ). We find that both label smoothing and the confidence penalty improve state-of-the-art models across benchmarks without modifying existing hyperparameters, suggesting the wide applicability of these regularizers.

more

less

Gabriel Pereyra and George Tucker and Jan Chorowski and Łukasz Kaiser and Geoffrey Hinton

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.NE, cs.LG

First published: 2017/01/23 (7 years ago)

Abstract: We systematically explore regularizing neural networks by penalizing low entropy output distributions. We show that penalizing low entropy output distributions, which has been shown to improve exploration in reinforcement learning, acts as a strong regularizer in supervised learning. Furthermore, we connect a maximum entropy based confidence penalty to label smoothing through the direction of the KL divergence. We exhaustively evaluate the proposed confidence penalty and label smoothing on 6 common benchmarks: image classification (MNIST and Cifar-10), language modeling (Penn Treebank), machine translation (WMT'14 English-to-German), and speech recognition (TIMIT and WSJ). We find that both label smoothing and the confidence penalty improve state-of-the-art models across benchmarks without modifying existing hyperparameters, suggesting the wide applicability of these regularizers.

|

[link]

Pereyra et al. propose an entropy regularizer for penalizing over-confident predictions of deep neural networks. Specifically, given the predicted distribution $p_\theta(y_i|x)$ for labels $y_i$ and network parameters $\theta$, a regularizer $-\beta \max(0, \Gamma – H(p_\theta(y|x))$ is added to the learning objective. Here, $H$ denotes the entropy and $\beta$, $\Gamma$ are hyper-parameters allowing to weight and limit the regularizers influence. In experiments, this regularizer showed slightly improved performance on MNIST and Cifar-10. Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

Enhanced Attacks on Defensively Distilled Deep Neural Networks

Liu, Yujia and Zhang, Weiming and Li, Shaohua and Yu, Nenghai

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Liu, Yujia and Zhang, Weiming and Li, Shaohua and Yu, Nenghai

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

Liu et al. propose a white-box attack against defensive distillation. In particular, the proposed attack combines the objective of the Carlini+Wagner attack [1] with a slightly different reparameterization to enforce an $L_\infty$-constraint on the perturbation. In experiments, defensive distillation is shown to no be robust. [1] Nicholas Carlini, David A. Wagner: Towards Evaluating the Robustness of Neural Networks. IEEE Symposium on Security and Privacy 2017: 39-57 Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

Interpretability Beyond Feature Attribution: Quantitative Testing with Concept Activation Vectors (TCAV)

Been Kim and Martin Wattenberg and Justin Gilmer and Carrie Cai and James Wexler and Fernanda Viegas and Rory Sayres

arXiv e-Print archive - 2017 via Local arXiv

Keywords: stat.ML

First published: 2017/11/30 (6 years ago)

Abstract: The interpretation of deep learning models is a challenge due to their size, complexity, and often opaque internal state. In addition, many systems, such as image classifiers, operate on low-level features rather than high-level concepts. To address these challenges, we introduce Concept Activation Vectors (CAVs), which provide an interpretation of a neural net's internal state in terms of human-friendly concepts. The key idea is to view the high-dimensional internal state of a neural net as an aid, not an obstacle. We show how to use CAVs as part of a technique, Testing with CAVs (TCAV), that uses directional derivatives to quantify the degree to which a user-defined concept is important to a classification result--for example, how sensitive a prediction of "zebra" is to the presence of stripes. Using the domain of image classification as a testing ground, we describe how CAVs may be used to explore hypotheses and generate insights for a standard image classification network as well as a medical application.

more

less

Been Kim and Martin Wattenberg and Justin Gilmer and Carrie Cai and James Wexler and Fernanda Viegas and Rory Sayres

arXiv e-Print archive - 2017 via Local arXiv

Keywords: stat.ML

First published: 2017/11/30 (6 years ago)

Abstract: The interpretation of deep learning models is a challenge due to their size, complexity, and often opaque internal state. In addition, many systems, such as image classifiers, operate on low-level features rather than high-level concepts. To address these challenges, we introduce Concept Activation Vectors (CAVs), which provide an interpretation of a neural net's internal state in terms of human-friendly concepts. The key idea is to view the high-dimensional internal state of a neural net as an aid, not an obstacle. We show how to use CAVs as part of a technique, Testing with CAVs (TCAV), that uses directional derivatives to quantify the degree to which a user-defined concept is important to a classification result--for example, how sensitive a prediction of "zebra" is to the presence of stripes. Using the domain of image classification as a testing ground, we describe how CAVs may be used to explore hypotheses and generate insights for a standard image classification network as well as a medical application.

|

[link]

Kim et al. propose Concept Activation Vectors (CAV) that represent the direction of features corresponding to specific human-interpretable concepts. In particular, given a network for a classification task, a concept is defined as a set of images with that concept. A linear classifier is then trained to distinguish images with concept from random images without the concept based on a chosen feature layer. The normal of the obtained linear classification boundary corresponds to the learned Concept Activation Vector (CAV). By considering the directional derivative along this direction for a given input allows to quantify how well the input aligns with the chosen concept. This way, images can be ranked and the model’ sensitivity to particular concepts can be quantified. The idea is also illustrated in Figure 1. https://i.imgur.com/KOqPeag.png Figure 1: Process of constructing Concept Activation Vectors (CAVs). Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

On the Robustness of Convolutional Neural Networks to Internal Architecture and Weight Perturbations

Cheney, Nicholas and Schrimpf, Martin and Kreiman, Gabriel

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Cheney, Nicholas and Schrimpf, Martin and Kreiman, Gabriel

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

Cheney et al. study the robustness of deep neural networks, especially AlexNet, with regard to randomly dropping or perturbing weights. In particular, the authors consider three types of perturbations: synapse knockouts set random weights to zero, node knockouts set all weights corresponding to a set of neurons to zero, and weight perturbations add random Gaussian noise to the weights of a specific layer. These perturbations are studied on AlexNet, considering the top-5 accuracy on ImageNet; perturbations are considered per layer. For example, Figure 1 (left) shows the influence on accuracy when knocking out synapses. As can be seen, the lower layers, especially the first convolutional layer, are impacted significantly by these perturbations. Similar observations, Figure 1 (right) are made for random perturbations of weights; although the impact is less significant. Especially high-level features, i.e., the corresponding layers, seem to be robust to these kind of perturbations. The authors also provide evidence that these results extend to the top-1 accuracy, as well as other architectures. For VGG, however, the impact is significantly less pronounced which may also be due to the employed dropout layers. https://i.imgur.com/78T6Gg2.png Figure 1: Left: Influence of setting weights in the corresponding layers to zero. Right: Influence of randomly perturbing weights of specific layers. Experiments are on ImageNet using AlexNet. Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

AE-GAN: adversarial eliminating with GAN

Shen, Shiwei and Jin, Guoqing and Gao, Ke and Zhang, Yongdong

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Shen, Shiwei and Jin, Guoqing and Gao, Ke and Zhang, Yongdong

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

Shen et al. introduce APE-GAN, a generative adversarial network (GAN) trained to remove adversarial noise from adversarial examples. In specific, as illustrated in Figure 1, a GAN is traiend to specifically distinguish clean/real images from adversarial images. The generator is conditioned on th einput image and can be seen as auto encoder. Then, during testing, the generator is applied to remove the adversarial noise. https://i.imgur.com/mgAbzCT.png Figure 1: The proposed adversarial perturbation eliminating GAN (APE-GAN), see the paper for details. Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

Towards A Rigorous Science of Interpretable Machine Learning

Finale Doshi-Velez and Been Kim

arXiv e-Print archive - 2017 via Local arXiv

Keywords: stat.ML, cs.AI, cs.LG

First published: 2017/02/28 (7 years ago)

Abstract: As machine learning systems become ubiquitous, there has been a surge of interest in interpretable machine learning: systems that provide explanation for their outputs. These explanations are often used to qualitatively assess other criteria such as safety or non-discrimination. However, despite the interest in interpretability, there is very little consensus on what interpretable machine learning is and how it should be measured. In this position paper, we first define interpretability and describe when interpretability is needed (and when it is not). Next, we suggest a taxonomy for rigorous evaluation and expose open questions towards a more rigorous science of interpretable machine learning.

more

less

Finale Doshi-Velez and Been Kim

arXiv e-Print archive - 2017 via Local arXiv

Keywords: stat.ML, cs.AI, cs.LG

First published: 2017/02/28 (7 years ago)

Abstract: As machine learning systems become ubiquitous, there has been a surge of interest in interpretable machine learning: systems that provide explanation for their outputs. These explanations are often used to qualitatively assess other criteria such as safety or non-discrimination. However, despite the interest in interpretability, there is very little consensus on what interpretable machine learning is and how it should be measured. In this position paper, we first define interpretability and describe when interpretability is needed (and when it is not). Next, we suggest a taxonomy for rigorous evaluation and expose open questions towards a more rigorous science of interpretable machine learning.

|

[link]

For a machine learning model to be trusted/ used one would need to be confident in its capabilities of dealing with all possible scenarios. To that end, designing unit test cases for more complex and global problems could be costly and bordering on impossible to create. **Idea**: We need a basic guideline that researchers and developers can adhere to when defining problems and outlining solutions, so that model interpretability can be defined accurately in terms of the problem statement. **Solution**: This paper outlines the basics of machine learning interpretability, what that means for different users, and how to classify these into understandable categories that can be evaluated. This paper highlights the need for interpretability, which arises from *incompleteness*,either of the problem statement, or the problem domain knowledge. This paper provides three main categories to evaluating a model/ providing interpretations: - *Application Grounded Evaluation*: These evaluations are more costly, and involve real humans evaluating real tasks that a model would take up. Domain knowledge is necessary for the humans evaluating the real task handled by the model. - *Human Grounded Evaluation:* these evaluations are simpler than application grounded, as they simplify the complex task and have humans evaluate the simplified task. Domain knowledge is not necessary in such an evaluation. - *Functionally Grounded Evaluation:* No humans are involved in this version of evaluation, here previously evaluated models are perfected or tweaked to optimize certain functionality. Explanation quality is measured by a formal definition of interpretability. This paper also outlines certain issues with the above three evaluation processes, there are certain questions that need answering before we can pick an evaluation method and metric. -To highlight the factors of interpretability, we are provided with the Data-driven approach. Here we analyze each task and the various methods used to fulfill the task and see which of these methods and tasks are most significant to the model. - We are introduced to the term latent dimensions of interpretability, i.e. dimensions that are inferred not observed. These are divided into task related latent dimensions and method related latent dimensions, these are a long list of factors that are task specific or method specific. Thus this paper provides a basic taxonomy for how we should evaluate our model, and how these evaluations differ from problem to problem. The ideal scenario outlined is that researchers provide the relevant information to evaluate their proposition correctly (correctly in terms of the domain and the problem scope).  |

Learning Robust Rewards with Adversarial Inverse Reinforcement Learning

Fu, Justin and Luo, Katie and Levine, Sergey

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Fu, Justin and Luo, Katie and Levine, Sergey

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

The fundamental unit of Reinforcement Learning is the reward function, with a core assumption of the area being that actions induce rewards, with some actions being higher reward than others. But, reward functions are just artificial objects we design to induce certain behaviors; the universe doesn’t hand out “true” rewards we can build off of. Inverse Reinforcement Learning as a field is rooted in the difficulty of designing reward functions, and has the aspiration of, instead of requiring a human to hard code a reward function, inferring rewards from observing human behavior. The rough idea is that if we imagine a human is (even if they don’t know it) operating so as to optimize some set of rewards, we might be able to infer that set of underlying incentives from their actions, and, once we’ve extracted a reward function, use that to train new agents. This is a mathematically quite tricky problem, for the basic reason that a human’s actions are often consistent with a wide range of possible underlying “policy” parameters, and also that a given human policy could be an optimal for a wide range of underlying reward functions. This paper proposes using an adversarial frame on the problem, where you learn a reward function by trying to make reward higher for the human demonstrations you observe, relative to the actions the agent itself is taking. This has the effect of trying to learn an agent that can imitate human actions. However, it specifically designs its model structure to allow it to go beyond just imitation. The problem with learning a purely imitative policy is that it’s hard for the model to separate out which actions the human is taking because they are intrinsically high reward (like, perhaps, eating candy), versus actions which are only valuable in a particular environment (perhaps opening a drawer if you’re in a room where that’s where the candy is kept). If you didn’t realize that the real reward was contained in the candy, you might keep opening drawers, even if you’re in a room where the candy is laying out on the table. In mathematical terms, separating out intrinsic vs instrumental (also known as "shaped") rewards is a matter of making sure to learn separate out the reward associated with a given state from value of taking a given action at that state, because the value of your action is only born out based on assumptions about how states transition between each other, which is a function of the specific state to state dynamics of the you’re in. The authors do this by defining a g(s) function, and a h(s) function. They then define their overall reward of an action as (g(s) + h(s’)) - h(s), where s’ is the new state you end up in if you take an action. https://i.imgur.com/3ENPFVk.png This follows the natural form of a Bellman update, where the sum of your future value at state T should be equal to the sum of your future value at time T+1 plus the reward you achieve at time T. https://i.imgur.com/Sd9qHCf.png By adopting this structure, and learning a separate neural network to capture the h(s) function representing the value from here to the end, the authors make it the case that the g(s) function is a purer representation of the reward at a state, regardless of what we expect to happen in the future. Using this, they’re able to use this learned reward to bootstrap good behavior in new environments, even in contexts where a learned value function would be invalid because of the assumptions of instrumental value. They compare their method to the baseline of GAIL, which is a purely imitation-learning approach, and show that theirs is more able to transfer to environments with similar states but different state-to-state dynamics.  |

The Promise and Peril of Human Evaluation for Model Interpretability

Bernease Herman

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.AI, cs.LG, stat.ML

First published: 2017/11/20 (6 years ago)

Abstract: Transparency, user trust, and human comprehension are popular ethical motivations for interpretable machine learning. In support of these goals, researchers evaluate model explanation performance using humans and real world applications. This alone presents a challenge in many areas of artificial intelligence. In this position paper, we propose a distinction between descriptive and persuasive explanations. We discuss reasoning suggesting that functional interpretability may be correlated with cognitive function and user preferences. If this is indeed the case, evaluation and optimization using functional metrics could perpetuate implicit cognitive bias in explanations that threaten transparency. Finally, we propose two potential research directions to disambiguate cognitive function and explanation models, retaining control over the tradeoff between accuracy and interpretability.

more

less

Bernease Herman

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.AI, cs.LG, stat.ML

First published: 2017/11/20 (6 years ago)

Abstract: Transparency, user trust, and human comprehension are popular ethical motivations for interpretable machine learning. In support of these goals, researchers evaluate model explanation performance using humans and real world applications. This alone presents a challenge in many areas of artificial intelligence. In this position paper, we propose a distinction between descriptive and persuasive explanations. We discuss reasoning suggesting that functional interpretability may be correlated with cognitive function and user preferences. If this is indeed the case, evaluation and optimization using functional metrics could perpetuate implicit cognitive bias in explanations that threaten transparency. Finally, we propose two potential research directions to disambiguate cognitive function and explanation models, retaining control over the tradeoff between accuracy and interpretability.

|

[link]

Model Interpretability aims at explaining the inner workings of a model promoting transparency of any decisions made by the model, however for the sake of human acceptance or understanding, these explanations seem to be more geared toward human trust than remaining faithful to the model.

**Idea**

There is a distinct difference and tradeoff between persuasive and descriptive Interpretations of a model, one promotes human trust while the other stays truthful to the model. Promoting the former can lead to a loss in transparency of the model.

**Questions to be answered:**

- How do we balance between a persuasive strategy and a descriptive strategy?

- How do we combat human cognitive bias?

**Solutions:**

- *Separating the descriptive and persuasive steps: *

- We first generate a descriptive explanation, without trying to simplify it

- In our final steps we add persuasiveness to this explanation to make it more understandable

- *Explicit inclusion of cognitive features:*

- We would include attributes that affect our functional measures of interpretability to our objective function.

- This approach has some drawbacks however:

- we would need to map the knowledge of the user which is an expensive process.

- Any features that we fail to add to the objective function would add to the human cognitive bias

- Increased complexity in optimizing of a multi-objective loss function.

**Important terms:**

- *Explanation Strategy*: An explanation strategy is defined as an explanation vehicle coupled with the objective function, constraints, and hyper parameters required to generate a model explanation

- *Explanation model*: An explanation model is defined as the implementation of an explanation strategy, which is fit to a model that is to be interpreted.

- *Human Cognitive Bias*: if an explanation model is highly persuasive or tuned toward human trust as opposed to staying true to the model, the overall evaluation of this explanation would be highly biased compared to a descriptive model. This bias can lead from commonalities between human users across a domain, expertise of the application, or the expectation of a model explanation. Such bias is known as implicit human cognitive bias.

- *Persuasive Explanation Strategy*: A persuasive explanation strategy aim at convincing a user/ humanizing a model so that the user feels more comfortable with the decisions generated by the model. Fidelity or truthfulness to the model in such a strategy can be very low, which can lead to ethical dilemmas as to where to draw the line between being persuasive and being descriptive. Persuasive strategies do promote human understanding and cognition, which are important aspects of interpretability, however they fail to address the certain other aspects such as fidelity to the model.

- *Descriptive Explanation Strategy*: A descriptive explanation strategy stays true to the underlying model, and generates explanations with maximum fidelity to the model. Ideally such a strategy would describe exactly what the inner working of the underlying model is, which is the main purpose of model interpretation in terms of better understanding the actual workings of the model.

|

Interpretable & Explorable Approximations of Black Box Models

Lakkaraju, Himabindu and Kamar, Ece and Caruana, Rich and Leskovec, Jure

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Lakkaraju, Himabindu and Kamar, Ece and Caruana, Rich and Leskovec, Jure

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

Model interpretations must be true to the model but must also promote human understanding of the working of the model. To this end we would need an interpretability model that balances the two.

**Idea** : Although there exist model interpretations that balance fidelity and human cognition on a local level specific to an underlying model, there are no global model agnostic interpretation models that can achieve the same.

**Solution:**

- Break up each aspect of the underlying model into distinct compact decision sets that have no overlap to generate explanations that are faithful to the model, and also cover all possible feature spaces of the model.

- How the solution dealt with:

- *Fidelity* (staying true to the model): the labels in the approximation match that of the underlying model.

- *Unambiguity* (single clear decision): compact decision sets in every feature space ensures unambiguity in the label assigned to it.

- *Interpretability* (Understandable by humans): Intuitive rule based representation, with limited number of rules and predicates.

- *Interactivity* (Allow user to focus on specific feature spaces): Each feature space is divided into distinct compact sets, allowing users to focus on their area of interest.

- Details on a “decision set”:

- Each decision set is a two-level decision (a nested if-then decision set), where the outer if-then clause specifies the sub-space, and the inner if-then clause specifies the logic of assigning a label by the model.

- A default set is defined to assign labels that do not satisfy any of the two-level decisions

- The pros of such a model is that we do not need to trace the logic of an assigned label too far, thus less complex than a decision tree which follows a similar if-then structure.

**Mapping fidelity vs interpretability**

- To see how their model handled fidelity vs interpretability, they mapped the rate of agreement (number of times the approximation label of an instance matches the blackbox assigned label) against pre-defined interpretability complexity defining terms such as:

- Number of predicates (sum of width of all decision sets)

- Number of rules (a set of outer decision, inner decision, and classifier label)

- Number of defined neighborhoods (outer if-then decision)

- Their model reached higher agreement rates to other models at lower values for interpretability complexity.

|

Decoupled Weight Decay Regularization

Ilya Loshchilov and Frank Hutter

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.LG, cs.NE, math.OC

First published: 2017/11/14 (6 years ago)

Abstract: L$_2$ regularization and weight decay regularization are equivalent for standard stochastic gradient descent (when rescaled by the learning rate), but as we demonstrate this is \emph{not} the case for adaptive gradient algorithms, such as Adam. While common implementations of these algorithms employ L$_2$ regularization (often calling it "weight decay" in what may be misleading due to the inequivalence we expose), we propose a simple modification to recover the original formulation of weight decay regularization by \emph{decoupling} the weight decay from the optimization steps taken w.r.t. the loss function. We provide empirical evidence that our proposed modification (i) decouples the optimal choice of weight decay factor from the setting of the learning rate for both standard SGD and Adam and (ii) substantially improves Adam's generalization performance, allowing it to compete with SGD with momentum on image classification datasets (on which it was previously typically outperformed by the latter). Our proposed decoupled weight decay has already been adopted by many researchers, and the community has implemented it in TensorFlow and PyTorch; the complete source code for our experiments is available at https://github.com/loshchil/AdamW-and-SGDW

more

less

Ilya Loshchilov and Frank Hutter

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.LG, cs.NE, math.OC

First published: 2017/11/14 (6 years ago)

Abstract: L$_2$ regularization and weight decay regularization are equivalent for standard stochastic gradient descent (when rescaled by the learning rate), but as we demonstrate this is \emph{not} the case for adaptive gradient algorithms, such as Adam. While common implementations of these algorithms employ L$_2$ regularization (often calling it "weight decay" in what may be misleading due to the inequivalence we expose), we propose a simple modification to recover the original formulation of weight decay regularization by \emph{decoupling} the weight decay from the optimization steps taken w.r.t. the loss function. We provide empirical evidence that our proposed modification (i) decouples the optimal choice of weight decay factor from the setting of the learning rate for both standard SGD and Adam and (ii) substantially improves Adam's generalization performance, allowing it to compete with SGD with momentum on image classification datasets (on which it was previously typically outperformed by the latter). Our proposed decoupled weight decay has already been adopted by many researchers, and the community has implemented it in TensorFlow and PyTorch; the complete source code for our experiments is available at https://github.com/loshchil/AdamW-and-SGDW

|

[link]

A few years ago, a paper came out demonstrating that adaptive gradient methods (which dynamically scale gradient updates in a per-parameter way according to the magnitudes of past updates) have a tendency to generalize less well than non-adaptive methods, even they adaptive methods sometimes look more performant in training, and are easier to hyperparameter tune. The 2017 paper offered a theoretical explanation for this fact based on Adam learning less complex solutions than SGD; this paper offers a different one, namely that Adam performs poorly because it is typically implemented alongside L2 regularization, which has importantly different mechanical consequences than it does in SGD. Specifically, in SGD, L2 regularization, where the loss includes both the actual loss and a L2 norm of the weights, can be made equivalent to weight decay, by choosing the right parameters for each. (see proof below). https://i.imgur.com/79jfZg9.png However, for Adam, this equivalence doesn’t hold. This is true because, in SGD, all the scaling factors are just constants, and for each learning rate value and regularization parameter, a certain weight decay parameter is implied by that. However, since Adam scales its parameter updates not by a constant learning rate but by a matrix, it’s not possible to pick other hyperparameters in a way that could get you something similar to constant-parameter weight decay. To solve this, the authors suggest using an explicit weight decay term, rather than just doing implicit weight decay via L2 regularization. This is salient because the L2 norm is added to the *loss function*, and it makes up part of the gradient update, and thus gets scaled down by Adam by the same adaptive mechanism that scales down historically large gradients. When weight decay is moved outside of the form of being a norm calculation inside a loss function, and just something applied to the final update but not actually part of the adaptive scaling calculation, the authors find that 1) Adam is able to get comparable performance on image and sequence tasks (where it has previously had difficult), and 2) that even for SGD, where it was possible to find a optimal parameter setting to reproduce weight decay, having an explicit and decoupled weight decay parameter made parameters that were previously dependent on one another in their optimal values (regularization and learning rate) more independent.  |

The Marginal Value of Adaptive Gradient Methods in Machine Learning

Wilson, Ashia C. and Roelofs, Rebecca and Stern, Mitchell and Srebro, Nathan and Recht, Benjamin

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Wilson, Ashia C. and Roelofs, Rebecca and Stern, Mitchell and Srebro, Nathan and Recht, Benjamin

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

In modern machine learning, gradient descent has diversified into a zoo of subtly distinct techniques, all designed, analytically, heuristically, or practically, to ease or accelerate our model’s path through multidimensional loss space. A solid contingent of these methods are Adaptive Gradient methods, which scale the size of gradient updates according to variously calculated historical averages or variances of the vector update, which has the effect of scaling down the updates along feature dimensions that have experienced large updates in the past. The intuition behind this is that we may want to effectively reduce the learning rate (by dividing by a larger number) along dimensions where there have been large or highly variable updates. These methods are commonly used because, as the name suggests, they update to the scale of your dataset and particular loss landscape, avoiding what might otherwise be a lengthy process of hyperparameter tuning. But this paper argues that, at least on a simplified problem, adaptive methods can reach overly simplified and overfit solutions that generalize to test data less well than a non-adaptive, more standard gradient descent method. The theoretical core of the paper is a proof showing limitations of the solution reached by adaptive gradient on a simple toy regression problem, on linearly separable data. It’s a little dodgy to try to recapitulate a mathematical proof in verbal form, but I’ll do my best, on the understanding that you should really read the fully thing to fully verify the logic. The goal of the proof is to characterize the solution weight vector learned by different optimization systems. In this simplified environment, a core informational unit of your equations is X^T(y), which (in a world where labels are either -1 or 1), goes through each feature, and for each feature, takes a dot product between that feature vector (across examples) and the label vector, which has the effect of adding up a positive sum of all the feature values attached to positive examples, and then subtracting out (because of the multiply by -1) all the feature values attached to positive examples. When this is summed, we get a per feature value that will be positive if positive values of the feature tend to indicate positive labels, and negative if the opposite is true, in each case with a magnitude relating to the strength of that relationship. The claim made by the paper, supported by a lot of variable transformations, is that the solution learned by Adaptive Gradient methods reduces to a sign() operation on top of that vector, where magnitude information is lost. This happens because the running gradients that you divide out happen to correspond to the absolute value of this vector, and dividing a vector (which would be the core of the solution in the non-adaptive case) by its absolute value gives you a simple sign. The paper then goes on to show that this edge case can lead to cases of pathological overfitting in cases of high feature dimensionality relative to data points. (I wish I could give more deep insight on why this is the case, but I wasn’t really able to translate the math into intuition, outside of this fact of scaling by gradient magnitudes having the effect of losing potentially useful gradient information. The big question from all this is...does this matter? Does it matter, in particular, beyond a toy dataset, and an artificially simple problem? The answer seems to be a pretty strong maybe. The authors test adaptive methods against hyperparameter-optimized SGD and momentum SGD (a variant, but without the adaptive aspects), and find that, while adaptive methods often learn more quickly at first, SGD approaches pick up later in training, first in terms of test set error at a time when adaptive methods’ training set error still seems to be decreasing, and later even in training set error. So there seems to be evidence that solutions learned by adaptive methods generalize worse than ones learned by SGD, at least on some image recognition and language-RNN models. (Though, interestingly, RMS-Prop comes close to the SGD test set levels, doing the best out of the adaptive methods). Overall, this suggests to me that doing fully hyperparameter optimized SGD might be a stronger design choice, but that adaptive methods retain popularity because of their (very appealing, practically) lack of need for hyperparameter tuning to at least to a *reasonable* job, even if their performance might have more of a ceiling than that of vanilla SGD.  |

Inverse Reward Design

Dylan Hadfield-Menell and Smitha Milli and Pieter Abbeel and Stuart Russell and Anca Dragan

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.AI, cs.LG

First published: 2017/11/08 (6 years ago)

Abstract: Autonomous agents optimize the reward function we give them. What they don't know is how hard it is for us to design a reward function that actually captures what we want. When designing the reward, we might think of some specific training scenarios, and make sure that the reward will lead to the right behavior in those scenarios. Inevitably, agents encounter new scenarios (e.g., new types of terrain) where optimizing that same reward may lead to undesired behavior. Our insight is that reward functions are merely observations about what the designer actually wants, and that they should be interpreted in the context in which they were designed. We introduce inverse reward design (IRD) as the problem of inferring the true objective based on the designed reward and the training MDP. We introduce approximate methods for solving IRD problems, and use their solution to plan risk-averse behavior in test MDPs. Empirical results suggest that this approach can help alleviate negative side effects of misspecified reward functions and mitigate reward hacking.

more

less

Dylan Hadfield-Menell and Smitha Milli and Pieter Abbeel and Stuart Russell and Anca Dragan

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.AI, cs.LG

First published: 2017/11/08 (6 years ago)

Abstract: Autonomous agents optimize the reward function we give them. What they don't know is how hard it is for us to design a reward function that actually captures what we want. When designing the reward, we might think of some specific training scenarios, and make sure that the reward will lead to the right behavior in those scenarios. Inevitably, agents encounter new scenarios (e.g., new types of terrain) where optimizing that same reward may lead to undesired behavior. Our insight is that reward functions are merely observations about what the designer actually wants, and that they should be interpreted in the context in which they were designed. We introduce inverse reward design (IRD) as the problem of inferring the true objective based on the designed reward and the training MDP. We introduce approximate methods for solving IRD problems, and use their solution to plan risk-averse behavior in test MDPs. Empirical results suggest that this approach can help alleviate negative side effects of misspecified reward functions and mitigate reward hacking.

|

[link]

The method they use basically tells the robot to reason as follows:

1. The human gave me a reward function $\tilde{r}$, selected in order to get me to behave the way they wanted.

2. So I should favor reward functions which produce that kind of behavior.

This amounts to doing RL (step 1) followed by IRL on the learned policy (step 2); see the final paragraph of section 4.

|

Analyzing the Robustness of Nearest Neighbors to Adversarial Examples

Wang, Yizhen and Jha, Somesh and Chaudhuri, Kamalika

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Wang, Yizhen and Jha, Somesh and Chaudhuri, Kamalika

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]

Wang et al. discuss the robustness of $k$-nearest neighbors against adversarial perturbations, providing both a theoretical analysis as well as a robust 1-nearest neighbor version. Specifically, for low $k$ it is shown that nearest neighbor is usually not robust. Here, robustness is judged in a distributional sense; so for fixed and low $k$, the lowest distance of any training sample to an adversarial sample tends to zero, even if the training set size increases. For $k \in \mathcal{O}(dn \log n)$, however, it is shown that $k$/nearest neighbor can be robust – the prove, showing where the $dn \log n$ comes from can be found in the paper. Finally, they propose a simple but robust $1$-nearest neighbor algorithm. The main idea is to remove samples from the training set that cause adversarial examples. In particular, a minimum distance between any two samples with different labels is enforced.

Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).

|

Interpretation of Neural Networks is Fragile

Ghorbani, Amirata and Abid, Abubakar and Zou, James Y.

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

Ghorbani, Amirata and Abid, Abubakar and Zou, James Y.

arXiv e-Print archive - 2017 via Local Bibsonomy

Keywords: dblp

|

[link]