arXiv is an e-print service in the fields of physics, mathematics, computer science, quantitative biology, quantitative finance and statistics.

- 1996 1

- 2010 1

- 2011 1

- 2012 8

- 2013 31

- 2014 43

- 2015 134

- 2016 231

- 2017 182

- 2018 141

- 2019 100

- 2020 33

- 2021 10

- 2022 7

- 2023 3

Automatic chemical design using a data-driven continuous representation of molecules

Gómez-Bombarelli, Rafael and Duvenaud, David and Hernández-Lobato, José Miguel and Aguilera-Iparraguirre, Jorge and Hirzel, Timothy D. and Adams, Ryan P. and Aspuru-Guzik, Alán

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

Gómez-Bombarelli, Rafael and Duvenaud, David and Hernández-Lobato, José Miguel and Aguilera-Iparraguirre, Jorge and Hirzel, Timothy D. and Adams, Ryan P. and Aspuru-Guzik, Alán

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

I'll admit that I found this paper a bit of a letdown to read, relative to expectations rooted in its high citation count, and my general excitement and interest to see how deep learning could be brought to bear on molecular design. But before a critique, let's first walk through the mechanics of how the authors' approach works. The method proposed is basically a very straightforward Variational Auto Encoder, or VAE. It takes in a textual SMILES string representation of a molecular structure, uses an encoder to map that into a continuous vector representation, a decoder to map the vector representation back into a a SMILES string, and an auxiliary predictor to predict properties of a molecule given the continuous representation. So, the training loss is a combination of the reconstruction loss (log probability of the true molecule under the distribution produced by the decoder) and the semi-supervised predictive loss. The hope with this model is that it would allow you to sample from a space of potential molecules by starting from an existing molecule, and then optimizing the the vector representation of that molecule to make it score higher on whatever property you want to optimize for. https://i.imgur.com/WzZsCOB.png The authors acknowledge that, in this setup, you're just producing a probability distribution over characters, and that the continuous vectors sampled from the latent space might not actually map to valid SMILES strings, and beyond that may well not correspond to chemically valid molecules. Empirically, they said that the proportion of valid generated molecules ranged between 1 and 70%. But they argue that it'd be too difficult to enforce those constraints, and instead just sample from the model and run the results through a hand-designed filter for molecular validity. In my view, this is the central weakness of the method proposed in this paper: that they seem to have not tackled the question of either chemical viability or even syntactic correctness of the produced molecules. I found it difficult to nail down from the paper what the ultimate percentage of valid molecules was from points in latent space that were off of the training . A table reports "percentage of 5000 randomly-selected latent points that decode to valid molecules after 1000 attempts," but I'm confused by what the 1000 attempts means here - does that mean we draw 1000 samples from the distribution given by the decoder, and see if *any* of those samples are valid? That would be a strange metric, if so, and perhaps it means something different, but it's hard to tell. https://i.imgur.com/9sy0MXB.png This paper made me really curious to see whether a GAN could do better in this space, since it would presumably be better at the task of incentivizing syntactic correctness of produced strings (given that any deviation from correctness could be signal for the discriminator), but it might also lead to issues around mode collapse, and when I last checked the literature, GANs on text data in particular were still not great.  |

Low Data Drug Discovery with One-shot Learning

Altae-Tran, Han and Ramsundar, Bharath and Pappu, Aneesh S. and Pande, Vijay S.

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

Altae-Tran, Han and Ramsundar, Bharath and Pappu, Aneesh S. and Pande, Vijay S.

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

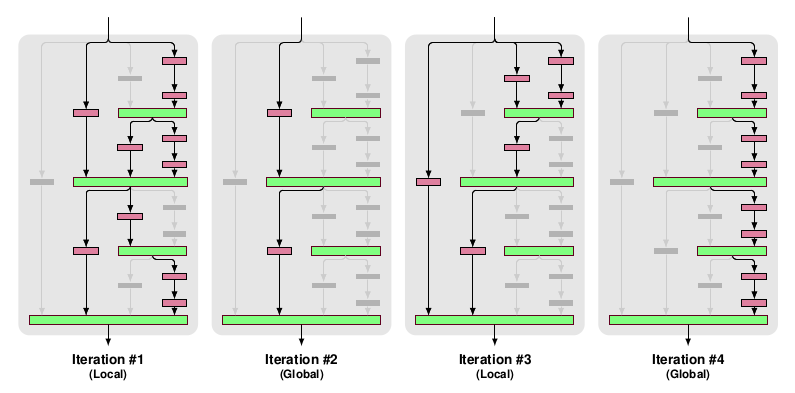

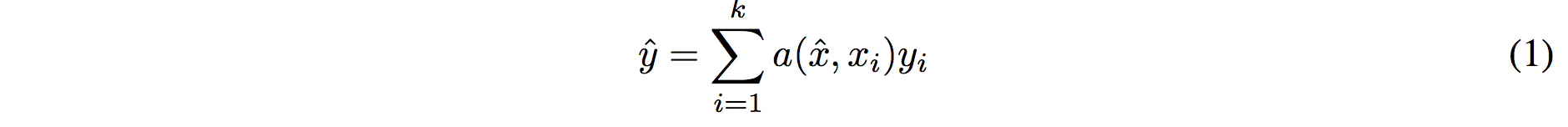

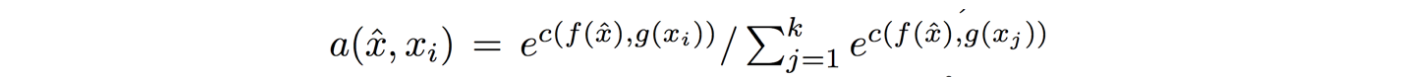

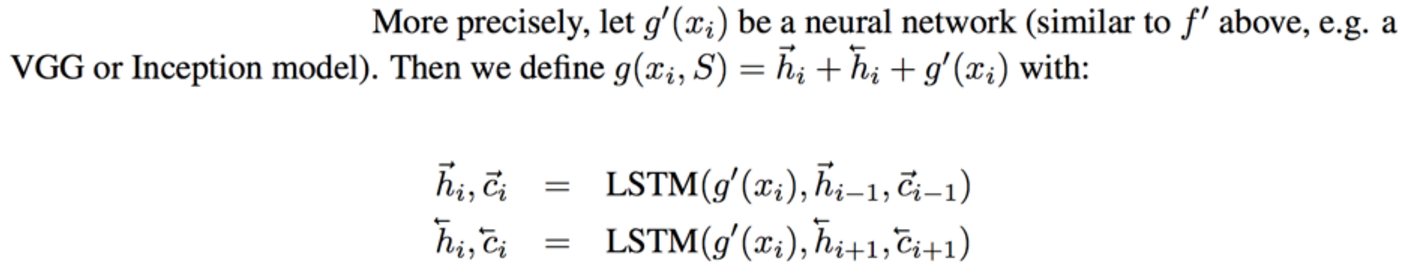

The goal of one-shot learning tasks is to design a learning structure that can perform a new task (or, more canonically, add a new class to an existing task) using only one a small number of examples of the new task or class. So, as an example: you'd want to be able to take one positive and one negative example of a given task and correctly classify subsequent points as either positive or negative. A common way of achieving this, and the way that the paper builds on, is to learn a parametrized function projecting both your labeled points (your "support set") and your unlabeled point (your "query") into an embedding space, and then assigning a class to your query according to how close it is to the support set points associated with each label. The hope is that, in the course of training on different but similar tasks, you've learned a metric space where nearby things tend to be of similar classes. This method is called a "matching network". This paper has the specific objective of using such one-shot methods for drug discovery, and evaluates on tasks drawn from that domain, but most of the mechanics of the paper can be understood without reference to molecular dat in particular. In the simplest version of such a network, the query and support set points are embedded unconditionally - meaning that the query would be embedded in the same way regardless of the values in the support set, and that each point in the support set would be embedded without knowledge of each other. However, given how little data we're giving our model to work with, it might be valuable to allow our query embedder (f(x)) and support set embedder (g(x)) to depend on the values within the support set. Prior work had achieved this by: 1) Creating initial f'(x) and g'(x) query and support embedders. 2) Concatenating the embedded support points g'(x) into a single vector and running a bidirectional LSTM over the concatenation, which results in a representation g(x) of each input that incorporates information from g'(x_i) for all other x_i (albeit in a way that imposes a concatenation ordering that may not correspond to a meaningful order) 3) Calculating f(x) of your embedding point by using an attention mechanism to combine f'(x) with the contextualized embeddings g(x) The authors of the current paper argue that this approach is suboptimal because of the artificially imposed ordering, and because it calculated g(x) prior to f(x) using asymmetrical model structures (though it's not super clear why this latter point is a problem). Instead, they propose a somewhat elaborate and difficult-to-follow attention based mechanism. As best as I can understand, this is what they're suggesting: https://i.imgur.com/4DLWh8H.png 1) Update the query embedding f(x) by calculating an attention distribution over the vector current embeddings of support set points (here referred to as bolded <r>), pooling downward to a single aggregate embedding vector r, and then using a LSTM that takes in that aggregate vector and the prior update to generate a new update. This update, dz, is added to the existing query embedding estimate to get a new one 2) Update the vector of support set embeddings by iteratively calculating an attention mapping between the vector of current support set embeddings and the original features g'(S), and using that attention mapping to create a new <r>, which, similar to the above, is fed into an LSTM to calculate the next update. Since the model is evaluated on molecular tasks, all of the embedding functions are structured as graph convolutions. Other than the obvious fact that attention is a great way of aggregating information in an order-independent way, the authors give disappointingly little justification of why they would expect their method to work meaningfully better than past approaches. Empirically, they do find that it performs slightly better than prior contextualized matching networks on held out tasks of predicting toxicity and side effects with only a small number from the held out task. However, neither this paper's new method nor previous one-shot learning work is able to perform very well on the challenging MUV dataset, where held-out binding tasks involve structurally dissimilar molecules from those seen during training, suggesting that whatever generalization this method is able to achieve doesn't quite rise to the task of making inferences based on molecules with different structures.  |

Molecular Graph Convolutions: Moving Beyond Fingerprints

Kearnes, Steven M. and McCloskey, Kevin and Berndl, Marc and Pande, Vijay S. and Riley, Patrick

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

Kearnes, Steven M. and McCloskey, Kevin and Berndl, Marc and Pande, Vijay S. and Riley, Patrick

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

This paper was published after the 2015 Duvenaud et al paper proposing a differentiable alternative to circular fingerprints of molecules: substituting out exact-match random hash functions to identify molecular structures with learned convolutional-esque kernels. As far as I can tell, the Duvenaud paper was the first to propose something we might today recognize as graph convolutions on atoms. I hoped this paper would build on that one, but it seems to be coming from a conceptually different direction, and it seems like it was more or less contemporaneous, for all that it was released later. This paper introduces a structure that allows for more explicit message passing along bonds, by calculating atom features as a function of their incoming bonds, and then bond features as a function of their constituent atoms, and iterating this procedure, so information from an atom can be passed into a bond, then, on the next iteration, pulled in by another atom on the other end of that bond, and then pulled into that atom's bonds, and so forth. This has the effect of, similar to a convolutional or recurrent network, creating representations for each atom in the molecular graph that are informed by context elsewhere in the graph, to different degrees depending on distance from that atom. More specifically, it defines: - A function mapping from a prior layer atom representation to a subsequent layer atom representation, taking into account only information from that atom (Atom to Atom) - A function mapping from a prior layer bond representation (indexed by the two atoms on either side of the bond), taking into account only information from that bond at the prior layer (Bond to Bond) - A function creating a bond representation by applying a shared function to the atoms at either end of it, and then combining those representations with an aggregator function (Atoms to Bond) - A function creating an atom representation by applying a shared function all the bonds that atom is a part of, and then combining those results with an aggregator function (Bonds to Atom) At the top of this set of layers, when each atom has had information diffused into it by other parts of the graph, depending on the network depth, the authors aggregate the per-atom representations into histograms (basically, instead of summing or max-pooling feature-wise, creating course distributions of each feature), and use that for supervised tasks. One frustration I had with this paper is that it doesn't do a great job of highlighting its differences with and advantages over prior work; in particular, I think it doesn't do a very good job arguing that its performance is superior to the earlier Duvenaud work. That said, for all that the presentation wasn't ideal, the idea of message-passing is an important one in graph convolutions, and will end up becoming more standard in later works.  |

Boosting Docking-based Virtual Screening with Deep Learning

Janaina Cruz Pereira and Ernesto Raul Caffarena and Cicero dos Santos

arXiv e-Print archive - 2016 via Local arXiv

Keywords: q-bio.QM

First published: 2016/08/17 (7 years ago)

Abstract: In this work, we propose a deep learning approach to improve docking-based virtual screening. The introduced deep neural network, DeepVS, uses the output of a docking program and learns how to extract relevant features from basic data such as atom and residues types obtained from protein-ligand complexes. Our approach introduces the use of atom and amino acid embeddings and implements an effective way of creating distributed vector representations of protein-ligand complexes by modeling the compound as a set of atom contexts that is further processed by a convolutional layer. One of the main advantages of the proposed method is that it does not require feature engineering. We evaluate DeepVS on the Directory of Useful Decoys (DUD), using the output of two docking programs: AutodockVina1.1.2 and Dock6.6. Using a strict evaluation with leave-one-out cross-validation, DeepVS outperforms the docking programs in both AUC ROC and enrichment factor. Moreover, using the output of AutodockVina1.1.2, DeepVS achieves an AUC ROC of 0.81, which, to the best of our knowledge, is the best AUC reported so far for virtual screening using the 40 receptors from DUD.

more

less

Janaina Cruz Pereira and Ernesto Raul Caffarena and Cicero dos Santos

arXiv e-Print archive - 2016 via Local arXiv

Keywords: q-bio.QM

First published: 2016/08/17 (7 years ago)

Abstract: In this work, we propose a deep learning approach to improve docking-based virtual screening. The introduced deep neural network, DeepVS, uses the output of a docking program and learns how to extract relevant features from basic data such as atom and residues types obtained from protein-ligand complexes. Our approach introduces the use of atom and amino acid embeddings and implements an effective way of creating distributed vector representations of protein-ligand complexes by modeling the compound as a set of atom contexts that is further processed by a convolutional layer. One of the main advantages of the proposed method is that it does not require feature engineering. We evaluate DeepVS on the Directory of Useful Decoys (DUD), using the output of two docking programs: AutodockVina1.1.2 and Dock6.6. Using a strict evaluation with leave-one-out cross-validation, DeepVS outperforms the docking programs in both AUC ROC and enrichment factor. Moreover, using the output of AutodockVina1.1.2, DeepVS achieves an AUC ROC of 0.81, which, to the best of our knowledge, is the best AUC reported so far for virtual screening using the 40 receptors from DUD.

|

[link]

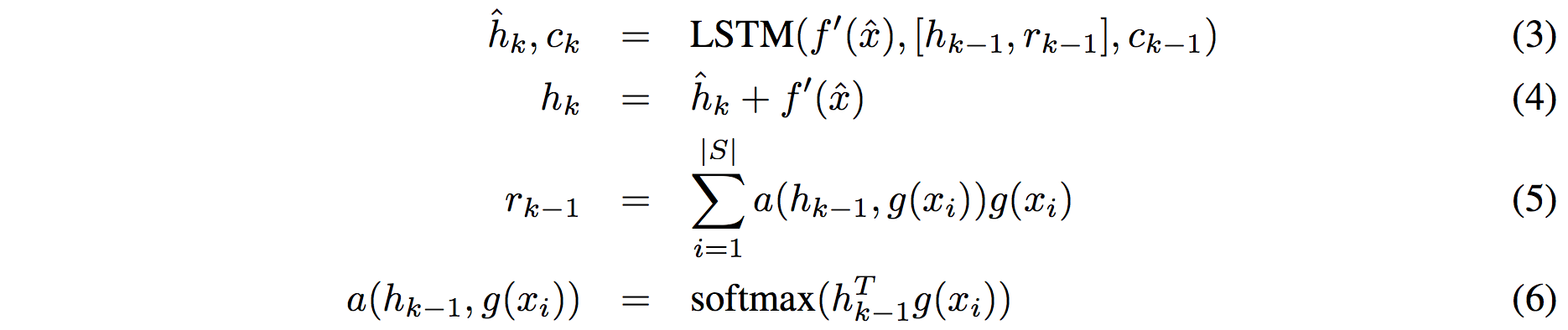

My objective in reading this paper was to gain another perspective on, and thus a more well-grounded view of, machine learning scoring functions for docking-based prediction of ligand/protein binding affinity. As quick background context, these models are useful because many therapeutic compounds act by binding to a target protein, and it can be valuable to prioritize doing wet lab testing on compounds that are predicted to have a stronger binding affinity. Docking systems work by predicting the pose in which a compound (or ligand) would bind to a protein, and then scoring prospective poses based on how likely such a pose would be to have high binding affinity. It's important to note that there are two predictive components in such a pipeline, and thus two sources of potential error: the searching over possible binding poses, done by physics-based systems, and scoring of the affinity of a given pose, assuming that were actually the correct one. Therefore, in the second kind of modeling, which this paper focuses on, you take in features *of a particular binding pose*, which includes information like which atoms of the compound are nearby to which atoms of the protein. The actual neural network structure used here was admittedly a bit underwhelming (though, to be fair, many of the ideas it seems to be gesturing at wouldn't be properly formalized until Graph Convolutional Networks came around). I'll describe the network mechanically first, and then offer some commentary on the design choices. https://i.imgur.com/w9wKS10.png 1. For each atom (a) in the compound, a set of neighborhood features are defined. The neighborhood is based on two hyperparameters, one for "how many atoms from the protein should be included," and one for "how many atoms from the compound should be included". In both cases, you start by adding the closest atom from either the compound or protein, and as hyperparameter values of each increase, you add in farther-away atoms. The neighborhood features here are (i) What are the types of the atoms? (ii) What are the partial charges of the atoms? (iii) How far are the atoms from the reference atom? (iiii) What amino acid within the protein do the protein atoms come? 2. All of these features are turned into embeddings. Yes, all of them, even the ones (distance and charge) that are continuous values. Coming from a machine learning perspective, this is... pretty weird as a design choice. The authors straight-up discretize the distance values, and then use those as discrete values for the purpose of looking up embeddings. (So, you'd have one embedding vector for distance (0.25-0.5, and a different one for 0.0-0.25, say). 3. The embeddings are concatenated together into a single "atom neighborhood vector" based on a predetermined ordering of the neighbor atoms and their property vectors. We now have one atom neighborhood vector for each atom in the compound. 4. The authors then do what they call a convolution over the atom neighborhood vectors. But it doesn't act like a normal convolution in the sense of mixing information from nearby regions of atom space. It just is basically a fully connected layer that's applied to atom neighborhood vector separately, but with shared weights, so the same layer is applied to each neighborhood vector. They then do a feature-wise max pool across the layer-transformed version of neighborhood vectors, getting you one vector for the full compound 5. This single vector is then put into a softmax, which predicts whether this ligand (in in this particular pose) will have strong binding with the protein Some thoughts on what's going on here. First, I really don't have a good explanation for why they'd have needed to embed a discretized version of the continuous variables, and since they don't do an ablation test of that design choice, it's hard to know if it mattered. Second, it's interesting to see, in their "convolution" (which I think is more accurately described as a Siamese Network, since it's only convolution-like insofar as there are shared weights), the beginning intuitions of what would become Graph Convolutions. The authors knew that they needed methods to aggregate information from arbitrary numbers of atoms, and also that they need should learn representations that have visibility onto neighborhoods of atoms, rather than single ones, but they do so in an entirely hand-engineered way: manually specifying a fixed neighborhood and pulling in information from all those neighbors equally, in a big concatenated vector. By contrast, when Graph Convolutions come along, they act by defining a "message-passing" function for features to aggregate across graph edges (here: molecular bonds or binaries on being "near enough" to another atom), which similarly allows information to be combined across neighborhoods. And, then, the 'convolution' is basically just a simple aggregation: necessary because there's no canonical ordering of elements within a graph, so you need an order-agnostic aggregation like a sum or max pool. The authors find that their method is able to improve on the hand-designed scoring functions within the docking programs. However, they also find (similar to another paper I read recently) that their model is able to do quite well without even considering structural relationships of the binding pose with the protein, which suggests the dataset (DUD - a dataset of 40 proteins with ~4K correctly binding ligands, and ~35K ligands paired with proteins they don't bind to) and problem given to the model is too easy. It's also hard to tell how I should consider AUCs within this problem - it's one thing to be better than an existing method, but how much value do you get from a given unit of AUC improvement, when it comes to actually meaningfully reducing wetlab time used on testing compounds? I don't know that there's much to take away from this paper in terms of useful techniques, but it is interesting to see the evolution of ideas that would later be more cleanly formalized in other works.  |

A Theoretical Framework for Robustness of (Deep) Classifiers against Adversarial Examples

Beilun Wang and Ji Gao and Yanjun Qi

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG, cs.CR, cs.CV

First published: 2016/12/01 (7 years ago)

Abstract: Most machine learning classifiers, including deep neural networks, are vulnerable to adversarial examples. Such inputs are typically generated by adding small but purposeful modifications that lead to incorrect outputs while imperceptible to human eyes. The goal of this paper is not to introduce a single method, but to make theoretical steps towards fully understanding adversarial examples. By using concepts from topology, our theoretical analysis brings forth the key reasons why an adversarial example can fool a classifier ($f_1$) and adds its oracle ($f_2$, like human eyes) in such analysis. By investigating the topological relationship between two (pseudo)metric spaces corresponding to predictor $f_1$ and oracle $f_2$, we develop necessary and sufficient conditions that can determine if $f_1$ is always robust (strong-robust) against adversarial examples according to $f_2$. Interestingly our theorems indicate that just one unnecessary feature can make $f_1$ not strong-robust, and the right feature representation learning is the key to getting a classifier that is both accurate and strong-robust.

more

less

Beilun Wang and Ji Gao and Yanjun Qi

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG, cs.CR, cs.CV

First published: 2016/12/01 (7 years ago)

Abstract: Most machine learning classifiers, including deep neural networks, are vulnerable to adversarial examples. Such inputs are typically generated by adding small but purposeful modifications that lead to incorrect outputs while imperceptible to human eyes. The goal of this paper is not to introduce a single method, but to make theoretical steps towards fully understanding adversarial examples. By using concepts from topology, our theoretical analysis brings forth the key reasons why an adversarial example can fool a classifier ($f_1$) and adds its oracle ($f_2$, like human eyes) in such analysis. By investigating the topological relationship between two (pseudo)metric spaces corresponding to predictor $f_1$ and oracle $f_2$, we develop necessary and sufficient conditions that can determine if $f_1$ is always robust (strong-robust) against adversarial examples according to $f_2$. Interestingly our theorems indicate that just one unnecessary feature can make $f_1$ not strong-robust, and the right feature representation learning is the key to getting a classifier that is both accurate and strong-robust.

|

[link]

Wang et al. discuss an alternative definition of adversarial examples, taking into account an oracle classifier. Adversarial perturbations are usually constrained in their norm (e.g., $L_\infty$ norm for images); however, the main goal of this constraint is to ensure label invariance – if the image didn’t change notable, the label didn’t change either. As alternative formulation, the authors consider an oracle for the task, e.g., humans for image classification tasks. Then, an adversarial example is defined as a slightly perturbed input, whose predicted label changes, but where the true label (i.e., the oracle’s label) does not change. Additionally, the perturbation can be constrained in some norm; specifically, the perturbation can be constrained on the true manifold of the data, as represented by the oracle classifier. Based on this notion of adversarial examples, Wang et al. argue that deep neural networks are not robust as they utilize over-complete feature representations. Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

DisturbLabel: Regularizing CNN on the Loss Layer

Lingxi Xie and Jingdong Wang and Zhen Wei and Meng Wang and Qi Tian

Conference and Computer Vision and Pattern Recognition - 2016 via Local CrossRef

Keywords:

Lingxi Xie and Jingdong Wang and Zhen Wei and Meng Wang and Qi Tian

Conference and Computer Vision and Pattern Recognition - 2016 via Local CrossRef

Keywords:

|

[link]

Xie et al. Propose to regularize deep neural networks by randomly disturbing (i.e., changing) training labels. In particular, for each training batch, they randomly change the label of each sample with probability $\alpha$ - when changing a label, it’s sampled uniformly from the set of labels. In experiments, the authors show that this sort of loss regularization improves generalization. However, Dropout usually performs better; in their case, only the combination with leads to noticable improvements on MNIST and SVHN – and only compared to no regularization and data augmentation at all. In their discussion, they offer two interpretations of dropping labels. First, it canbe seen as learning an ensemble of models on different noisy label sets; second, it can be seen as implicitly performing data augmentation. Both interepretation area reasonable, but do not provide a definite answer to why disturbing training labels should work well. https://i.imgur.com/KH36sAM.png Figure 1: Comparison of training testing error rate during training for no regularization, dropout and DropLabel. Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

Defensive Distillation is Not Robust to Adversarial Examples

Carlini, Nicholas and Wagner, David A.

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

Carlini, Nicholas and Wagner, David A.

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

Carlini and Wagner show that defensive distillation as defense against adversarial examples does not work. Specifically, they show that the attack by Papernot et al [1] can easily be modified to attack distilled networks. Interestingly, the main change is to introduce a temperature in the last softmax layer. This termperature, when chosen hgih enough will take care of aligning the gradients from the softmax layer and from the logit layer – otherwise, they will have significantly different magnitude. Personally, I found that this also aligns with the observations in [2] where Carlini and Wagner also find that attack objectives defined on the logits work considerably better. [1] N. Papernot, P. McDaniel, X. Wu, S. Jha, A. Swami. Distillation as a defense to adersarial perturbations against deep neural networks. SP, 2016. [2] N. Carlini, D. Wagner. Towards Evaluating the Robustness of Neural Networks. ArXiv, 2016. Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation

Milletari, Fausto and Navab, Nassir and Ahmadi, Seyed-Ahmad

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

Milletari, Fausto and Navab, Nassir and Ahmadi, Seyed-Ahmad

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

Medical image segmentation have been a classic problem in medical image analysis, with a score of research backing the problem. Many approaches worked by designing hand-crafted features, while others worked using global or local intensity cues. These approaches were sometimes extended to 3D, but most of the algorithms work with 2D images (or 2D slices of a 3D image). It is hypothesized that using the full 3D volume of a scan may improve segmentation performance due to the amount of context that the algorithm can be exposed to, but such approaches have been very expensive computationally. Deep learning approches like ConvNets have been applied to segmentation problems, which are computationally very efficient during inference time due to highly optimized linear algebra routines. Although these approaches form the state-of-art, they still utilize 2D views of a scan, and fail to work well on full 3D volumes. To this end, Milletari et al. propose a new CNN architecture consisting of volumetric convolutions with 3D kernels, on full 3D MRI prostate scans, trained on the task of segmenting the prostate from the images. The network architecture primarily consisted of 3D convolutions which use volumetric kernels having size 5x5x5 voxels. As the data proceeds through different stages along the compression path, its resolution is reduced. This is performed through convolution with 2x2x2 voxels wide kernels applied with stride 2, hence there are no pooling layers in the architecture. The architecutre resembles an encoder-decoder type architecture with the decoder part, also called downsampling, reduces the size of the signal presented as input and increases the receptive field of the features being computed in subsequent network layers. Each of the stages of the left part of the network, computes a number of features which is two times higher than the one of the previous layer. The right portion of the network extracts features and expands the spatial support of the lower resolution feature maps in order to gather and assemble the necessary information to output a two channel volumetric segmentation. The two features maps computed by the very last convolutional layer, having 1x1x1 kernel size and producing outputs of the same size as the input volume, are converted to probabilistic segmentations of the foreground and background regions by applying soft-max voxelwise. In order to train the network, the authors propose to use Dice loss function. The CNN is trained end-to-end on a dataset of 50 prostate scans in MRI. The network approached a 0.869 $\pm$ 0.033 dice loss, and beat the other state-of-art models.  |

Robotic Grasp Detection using Deep Convolutional Neural Networks

Kumra, Sulabh and Kanan, Christopher

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

Kumra, Sulabh and Kanan, Christopher

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

# **Introduction**

### **Goal of the paper**

* The goal of this paper is to use an RGB-D image to find the best pose for grasping an object using a parallel pose gripper.

* The goal of this algorithm is to also give an open loop method for manipulation of the object using vision data.

### **Previous Research**

* Even the state of the art in grasp detection algorithms fail under real world circumstances and cannot work in real time.

* To perform grasping a 7D grasp representation is used. But usually a 5D grasping representation is used and this is projected back into 7D space.

* Previous methods directly found the 7D pose representation using only the vision data.

* Compared to older computer vision techniques like sliding window classifier deep learning methods are more robust to occlusion , rotation and scaling.

* Grasp Point detection gave high accuracy (> 92%) but was helpful for only grasping cloths or towels.

### **Method**

* Grasp detection is generally a computer vision problem.

* The algorithm given by the paper made use of computer vision to find the grasp as a 5D representation. The 5D representation is faster to compute and is also less computationally intensive and can be used in real time.

* The general grasp planning algorithms can be divided into three distinct sequential phases ;

1. Grasp detection

1. Trajectory planning

1. Grasp execution

* One of the most major tasks in grasping algorithms is to find the best place for grasping and to map the vision data to coordinates that can be used for manipulation.

* The method makes use of three neural networks :

1. 50 deep neural network (ResNet 50) to find the features in RGB image. This network is pretrained on the ImageNet dataset.

1. Another neural network to find the feature in depth image.

1. The output from the two neural networks are fed into another network that gives the final grasp configuration as the output.

* The robot grasping configuration can be given as a function of the x,y,w,h and theta where (x,y) are the centre of the grasp rectangle and theta is the angle of the grasp rectangle.

* Since very deep networks are being used (number of layers > 20) , residual layers are used that helps in improving the loss surface of the network and reduce the vanishing gradient problems.

* This paper gives two types of networks for the grasp detection ;

1. Uni-Modal Grasp Predictor

* These use only an RGB 2D image to extract the feature from the input image and then use the features to give the best pose.

* A Linear - SVM is used as the final classifier to classify the best pose for the object.

1. Multi-Modal Grasp Predictor

* This model makes use of both the 2D image and the RGB-D image to extract the grasp.

* RGB-D image is decomposed into an RGB image and a depth image.

* Both the images are passed through the networks and the outputs are the combined together to a shallow CNN.

* The output of the shallow CNN is the best grasp for the object.

### **Experiments and Results**

* The experiments are done on the Cornell Grasp dataset.

* Almost no or minimum preprocessing is done on the images except resizing the image.

* The results of the algorithm given by this paper are compared to unimodal methods that use only RGB images.

* To validate the model it is checked if the predicted angle of grasp is less than 30 degrees and that the Jaccard similarity is more than 25% of the ground truth label.

### **Conclusion**

* This paper shows that Deep-Convolutional neural networks can be used to predict the grasping pose for an object.

* Another major observation is that the deep residual layers help in better extraction of the features of the grasp object from the image.

* The new model was able to run at realtime speeds.

* The model gave state of the art results on Cornell Grasping dataset.

----

### **Open research questions**

* Transfer Learning concepts to try the model on real robots.

* Try the model in industrial environments on objects of different sizes and shapes.

* Formulating the grasping problem as a regression problem.

|

Temporal Action Localization in Untrimmed Videos via Multi-stage CNNs

Zheng Shou and Dongang Wang and Shih-Fu Chang

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/01/09 (8 years ago)

Abstract: We address temporal action localization in untrimmed long videos. This is important because videos in real applications are usually unconstrained and contain multiple action instances plus video content of background scenes or other activities. To address this challenging issue, we exploit the effectiveness of deep networks in temporal action localization via three segment-based 3D ConvNets: (1) a proposal network identifies candidate segments in a long video that may contain actions; (2) a classification network learns one-vs-all action classification model to serve as initialization for the localization network; and (3) a localization network fine-tunes on the learned classification network to localize each action instance. We propose a novel loss function for the localization network to explicitly consider temporal overlap and therefore achieve high temporal localization accuracy. Only the proposal network and the localization network are used during prediction. On two large-scale benchmarks, our approach achieves significantly superior performances compared with other state-of-the-art systems: mAP increases from 1.7% to 7.4% on MEXaction2 and increases from 15.0% to 19.0% on THUMOS 2014, when the overlap threshold for evaluation is set to 0.5.

more

less

Zheng Shou and Dongang Wang and Shih-Fu Chang

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/01/09 (8 years ago)

Abstract: We address temporal action localization in untrimmed long videos. This is important because videos in real applications are usually unconstrained and contain multiple action instances plus video content of background scenes or other activities. To address this challenging issue, we exploit the effectiveness of deep networks in temporal action localization via three segment-based 3D ConvNets: (1) a proposal network identifies candidate segments in a long video that may contain actions; (2) a classification network learns one-vs-all action classification model to serve as initialization for the localization network; and (3) a localization network fine-tunes on the learned classification network to localize each action instance. We propose a novel loss function for the localization network to explicitly consider temporal overlap and therefore achieve high temporal localization accuracy. Only the proposal network and the localization network are used during prediction. On two large-scale benchmarks, our approach achieves significantly superior performances compared with other state-of-the-art systems: mAP increases from 1.7% to 7.4% on MEXaction2 and increases from 15.0% to 19.0% on THUMOS 2014, when the overlap threshold for evaluation is set to 0.5.

|

[link]

## Segmented SNN **Summary**: this paper use 3-stage 3D CNN to identify candidate proposals, recognize actions and localize temporal boundaries. **Models**: this network can be mainly divided into 3 parts: generate proposals, select proposal and refine temporal boundaries, and using NMS to remove redundant proposals. 1. generate multiscale(16,32,64,128,256.512) segment using sliding window with 75% overlap. high computing complexity! 2. network: Each stage of the three-stage network is using 3D convNets concatenating with 3 FC layers. * the proposal network is basically a classifier which will judge if each proposal contains action or not. * the classification network is used to classify each proposal which the proposal network think is valid into background and K action categories * the localization network functioned as a scoring system which raises scores of proposals that have high overlap with corresponding ground truth while decreasing the others. .  |

FlowNet 2.0: Evolution of Optical Flow Estimation with Deep Networks

Eddy Ilg and Nikolaus Mayer and Tonmoy Saikia and Margret Keuper and Alexey Dosovitskiy and Thomas Brox

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/12/06 (7 years ago)

Abstract: The FlowNet demonstrated that optical flow estimation can be cast as a learning problem. However, the state of the art with regard to the quality of the flow has still been defined by traditional methods. Particularly on small displacements and real-world data, FlowNet cannot compete with variational methods. In this paper, we advance the concept of end-to-end learning of optical flow and make it work really well. The large improvements in quality and speed are caused by three major contributions: first, we focus on the training data and show that the schedule of presenting data during training is very important. Second, we develop a stacked architecture that includes warping of the second image with intermediate optical flow. Third, we elaborate on small displacements by introducing a sub-network specializing on small motions. FlowNet 2.0 is only marginally slower than the original FlowNet but decreases the estimation error by more than 50%. It performs on par with state-of-the-art methods, while running at interactive frame rates. Moreover, we present faster variants that allow optical flow computation at up to 140fps with accuracy matching the original FlowNet.

more

less

Eddy Ilg and Nikolaus Mayer and Tonmoy Saikia and Margret Keuper and Alexey Dosovitskiy and Thomas Brox

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/12/06 (7 years ago)

Abstract: The FlowNet demonstrated that optical flow estimation can be cast as a learning problem. However, the state of the art with regard to the quality of the flow has still been defined by traditional methods. Particularly on small displacements and real-world data, FlowNet cannot compete with variational methods. In this paper, we advance the concept of end-to-end learning of optical flow and make it work really well. The large improvements in quality and speed are caused by three major contributions: first, we focus on the training data and show that the schedule of presenting data during training is very important. Second, we develop a stacked architecture that includes warping of the second image with intermediate optical flow. Third, we elaborate on small displacements by introducing a sub-network specializing on small motions. FlowNet 2.0 is only marginally slower than the original FlowNet but decreases the estimation error by more than 50%. It performs on par with state-of-the-art methods, while running at interactive frame rates. Moreover, we present faster variants that allow optical flow computation at up to 140fps with accuracy matching the original FlowNet.

|

[link]

- Implementations:

- https://hub.docker.com/r/mklinov/caffe-flownet2/

- https://github.com/lmb-freiburg/flownet2-docker

- https://github.com/lmb-freiburg/flownet2

- Explanations:

- A Brief Review of FlowNet - not a clear explanation

https://medium.com/towards-data-science/a-brief-review-of-flownet-dca6bd574de0

- https://www.youtube.com/watch?v=JSzUdVBmQP4

Supplementary material:

http://openaccess.thecvf.com/content_cvpr_2017/supplemental/Ilg_FlowNet_2.0_Evolution_2017_CVPR_supplemental.pdf

|

Multi-layer Representation Learning for Medical Concepts

Edward Choi and Mohammad Taha Bahadori and Elizabeth Searles and Catherine Coffey and Jimeng Sun

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG

First published: 2016/02/17 (8 years ago)

Abstract: Learning efficient representations for concepts has been proven to be an important basis for many applications such as machine translation or document classification. Proper representations of medical concepts such as diagnosis, medication, procedure codes and visits will have broad applications in healthcare analytics. However, in Electronic Health Records (EHR) the visit sequences of patients include multiple concepts (diagnosis, procedure, and medication codes) per visit. This structure provides two types of relational information, namely sequential order of visits and co-occurrence of the codes within each visit. In this work, we propose Med2Vec, which not only learns distributed representations for both medical codes and visits from a large EHR dataset with over 3 million visits, but also allows us to interpret the learned representations confirmed positively by clinical experts. In the experiments, Med2Vec displays significant improvement in key medical applications compared to popular baselines such as Skip-gram, GloVe and stacked autoencoder, while providing clinically meaningful interpretation.

more

less

Edward Choi and Mohammad Taha Bahadori and Elizabeth Searles and Catherine Coffey and Jimeng Sun

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG

First published: 2016/02/17 (8 years ago)

Abstract: Learning efficient representations for concepts has been proven to be an important basis for many applications such as machine translation or document classification. Proper representations of medical concepts such as diagnosis, medication, procedure codes and visits will have broad applications in healthcare analytics. However, in Electronic Health Records (EHR) the visit sequences of patients include multiple concepts (diagnosis, procedure, and medication codes) per visit. This structure provides two types of relational information, namely sequential order of visits and co-occurrence of the codes within each visit. In this work, we propose Med2Vec, which not only learns distributed representations for both medical codes and visits from a large EHR dataset with over 3 million visits, but also allows us to interpret the learned representations confirmed positively by clinical experts. In the experiments, Med2Vec displays significant improvement in key medical applications compared to popular baselines such as Skip-gram, GloVe and stacked autoencoder, while providing clinically meaningful interpretation.

|

[link]

This model called Med2Vec is inspired by Word2Vec. It is Word2Vec for time series patient visits with ICD codes. The model learns embeddings for medical codes as well as the demographics of patients.

https://i.imgur.com/Zjj6Xxz.png

The context is temporal. For each $x_t$ as input the model predicts $x_{t+1}$ and $x_{t-1}$ or more depending on the temporal window size.

|

Tree-to-Sequence Attentional Neural Machine Translation

Akiko Eriguchi and Kazuma Hashimoto and Yoshimasa Tsuruoka

Association for Computational Linguistics - 2016 via Local CrossRef

Keywords:

Akiko Eriguchi and Kazuma Hashimoto and Yoshimasa Tsuruoka

Association for Computational Linguistics - 2016 via Local CrossRef

Keywords:

|

[link]

This work extends sequence-to-sequence models for machine translation by using syntactic information on the source language side. This paper looks at the translation task where English is the source language, and Japanese is the target language. The dataset is the ASPEC corpus of scientific paper abstracts that seem to be in both English and Japanese? (See note below). The trees for the source (English) are generated by running the ENJU parser on the English data, resulting in binary trees, and only the bracketing information is used (no phrase category information).

Given that setup, the method is an extension of seq2seq translation models where they augment it with a Tree-LSTM to do the encoding of the source language. They deviate from a standard Tree-LSTM by running an LSTM across tokens first, and using the LSTM hidden states as the leaves of the tree instead of the token embeddings themselves. Once they have the encoding from the tree, it is concatenated with the standard encoding from an LSTM. At decoding time, the attention for output token $y_j$ is computed across all source tree nodes $i$, which includes $n$ input token nodes and $n-1$ phrasal nodes, as the similarity between the hidden state $s_j$ and the encoding at node $i$, then passed through softmax. Another deviation from standard practice (I believe) is that the hidden state calculations $s_j$ in the decoder are a function of the previous output token $y_{t-1}$, the previous time steps hidden state $s_{j-1}$ and the previous time step's attention-modulated hidden state $\tilde{s}_{j-1}$.

The authors introduce an additional trick for improving decoding performance when translating long sentences, since they say standard length normalization did not work. Their method is to compute a probability distribution over output length given input length, and use this to create an additional penalty term in their scoring function, as the log of the probability of the current output length given input length.

They evaluate using RIBES (not familiar) and BLEU scores, and show better performance than other NMT and SMT methods, and similar to the best performing (non-neural) tree to sequence model.

Implementation: They seem to have a custom implementation in C++ rather than using a DNN library. Their implementation takes one day to run one epoch of training on the full training set. They do not say how many epochs they train for.

Note on data: We have looked at this data a bit for a project I'm working on, and the English sentences look like translations from Japanese. A large proportion of the sentences are written in passive form with the structure "X was Yed" e.g.. "the data was processed, the cells were cultured." This looks to me like they translated subject-dropped Japanese sentences which would have the same word order, but are not actually passive! So that raises for me the question of how representative the source side inputs are of natural English.

|

Adversarial Diversity and Hard Positive Generation

Andras Rozsa and Ethan M. Rudd and Terrance E. Boult

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/05/05 (8 years ago)

Abstract: State-of-the-art deep neural networks suffer from a fundamental problem - they misclassify adversarial examples formed by applying small perturbations to inputs. In this paper, we present a new psychometric perceptual adversarial similarity score (PASS) measure for quantifying adversarial images, introduce the notion of hard positive generation, and use a diverse set of adversarial perturbations - not just the closest ones - for data augmentation. We introduce a novel hot/cold approach for adversarial example generation, which provides multiple possible adversarial perturbations for every single image. The perturbations generated by our novel approach often correspond to semantically meaningful image structures, and allow greater flexibility to scale perturbation-amplitudes, which yields an increased diversity of adversarial images. We present adversarial images on several network topologies and datasets, including LeNet on the MNIST dataset, and GoogLeNet and ResidualNet on the ImageNet dataset. Finally, we demonstrate on LeNet and GoogLeNet that fine-tuning with a diverse set of hard positives improves the robustness of these networks compared to training with prior methods of generating adversarial images.

more

less

Andras Rozsa and Ethan M. Rudd and Terrance E. Boult

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/05/05 (8 years ago)

Abstract: State-of-the-art deep neural networks suffer from a fundamental problem - they misclassify adversarial examples formed by applying small perturbations to inputs. In this paper, we present a new psychometric perceptual adversarial similarity score (PASS) measure for quantifying adversarial images, introduce the notion of hard positive generation, and use a diverse set of adversarial perturbations - not just the closest ones - for data augmentation. We introduce a novel hot/cold approach for adversarial example generation, which provides multiple possible adversarial perturbations for every single image. The perturbations generated by our novel approach often correspond to semantically meaningful image structures, and allow greater flexibility to scale perturbation-amplitudes, which yields an increased diversity of adversarial images. We present adversarial images on several network topologies and datasets, including LeNet on the MNIST dataset, and GoogLeNet and ResidualNet on the ImageNet dataset. Finally, we demonstrate on LeNet and GoogLeNet that fine-tuning with a diverse set of hard positives improves the robustness of these networks compared to training with prior methods of generating adversarial images.

|

[link]

Rozsa et al. propose PASS, an perceptual similarity metric invariant to homographies to quantify adversarial perturbations. In particular, PASS is based on the structural similarity metric SSIM [1]; specifically

$PASS(\tilde{x}, x) = SSIM(\psi(\tilde{x},x), x)$

where $\psi(\tilde{x}, x)$ transforms the perturbed image $\tilde{x}$ to the image $x$ by applying a homography $H$ (which can be found through optimization). Based on this similarity metric, they consider additional attacks which create small perturbations in terms of the PASS score, but result in larger $L_p$ norms; see the paper for experimental results.

[1] Z. Wang, A. C. Bovik, H. R. Sheikh, E. P. Simoncelli. Image quality assessment: from error visibility to structural similarity. TIP, 2004.

Also see this summary at [davidstutz.de](https://davidstutz.de/category/reading/).

|

Measuring Neural Net Robustness with Constraints

Osbert Bastani and Yani Ioannou and Leonidas Lampropoulos and Dimitrios Vytiniotis and Aditya Nori and Antonio Criminisi

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG, cs.CV, cs.NE

First published: 2016/05/24 (8 years ago)

Abstract: Despite having high accuracy, neural nets have been shown to be susceptible to adversarial examples, where a small perturbation to an input can cause it to become mislabeled. We propose metrics for measuring the robustness of a neural net and devise a novel algorithm for approximating these metrics based on an encoding of robustness as a linear program. We show how our metrics can be used to evaluate the robustness of deep neural nets with experiments on the MNIST and CIFAR-10 datasets. Our algorithm generates more informative estimates of robustness metrics compared to estimates based on existing algorithms. Furthermore, we show how existing approaches to improving robustness "overfit" to adversarial examples generated using a specific algorithm. Finally, we show that our techniques can be used to additionally improve neural net robustness both according to the metrics that we propose, but also according to previously proposed metrics.

more

less

Osbert Bastani and Yani Ioannou and Leonidas Lampropoulos and Dimitrios Vytiniotis and Aditya Nori and Antonio Criminisi

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG, cs.CV, cs.NE

First published: 2016/05/24 (8 years ago)

Abstract: Despite having high accuracy, neural nets have been shown to be susceptible to adversarial examples, where a small perturbation to an input can cause it to become mislabeled. We propose metrics for measuring the robustness of a neural net and devise a novel algorithm for approximating these metrics based on an encoding of robustness as a linear program. We show how our metrics can be used to evaluate the robustness of deep neural nets with experiments on the MNIST and CIFAR-10 datasets. Our algorithm generates more informative estimates of robustness metrics compared to estimates based on existing algorithms. Furthermore, we show how existing approaches to improving robustness "overfit" to adversarial examples generated using a specific algorithm. Finally, we show that our techniques can be used to additionally improve neural net robustness both according to the metrics that we propose, but also according to previously proposed metrics.

|

[link]

Bastani et al. propose formal robustness measures and an algorithm for approximating them for piece-wise linear networks. Specifically, the notion of robustness is similar to related work:

$\rho(f,x) = \inf\{\epsilon \geq 0 | f \text{ is not } (x,\epsilon)\text{-robust}$

where $(x,\epsilon)$-robustness demands that for every $x'$ with $\|x'-x\|_\infty$ it holds that $f(x') = f(x)$ – in other words, the label does not change for perturbations $\eta = x'-x$ which are small in terms of the $L_\infty$ norm and the constant $\epsilon$. Clearly, a higher $\epsilon$ implies a stronger notion of robustness. Additionally, the above definition is essentially a pointwise definition of robustness.

In order to measure robustness for the whole network (i.e. not only pointwise), the authors introduce the adversarial frequency:

$\psi(f,\epsilon) = p_{x\sim D}(\rho(f,x) \leq \epsilon)$.

This measure measures how often $f$ failes to be robust in the sense of $(x,\epsilon)$-robustness. The network is more robust when it has low adversarial frequency. Additionally, they introduce adversarial severity:

$\mu(f,\epsilon) = \mathbb{E}_{x\sim D}[\rho(f,x) | \rho(f,x) \leq \epsilon]$

which measures how severly $f$ fails to be robust (if it fails to be robust for a sample $x$).

Both above measures can be approximated by counting given that the robustness $\rho(f, x)$ is known for all samples $x$ in a separate test set. And this is the problem of the proposed measures: in order to approximate $\rho(f, x)$, the authors propose an optimization-based approach assuming that the neural network is piece-wise linear. This assumption is not necessarily unrealistic, dot products, convolutions, $\text{ReLU}$ activations and max pooling are all piece-wise linear. Even batch normalization is piece-wise linear at test time. The problem, however, is that th enetwork needs to be encoded in terms of linear programs, which I believe is cumbersome for real-world networks.

Also view this summary at [davidstutz.de](https://davidstutz.de/category/reading/).

|

Robustness of classifiers: from adversarial to random noise

Alhussein Fawzi and Seyed-Mohsen Moosavi-Dezfooli and Pascal Frossard

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG, cs.CV, stat.ML

First published: 2016/08/31 (7 years ago)

Abstract: Several recent works have shown that state-of-the-art classifiers are vulnerable to worst-case (i.e., adversarial) perturbations of the datapoints. On the other hand, it has been empirically observed that these same classifiers are relatively robust to random noise. In this paper, we propose to study a \textit{semi-random} noise regime that generalizes both the random and worst-case noise regimes. We propose the first quantitative analysis of the robustness of nonlinear classifiers in this general noise regime. We establish precise theoretical bounds on the robustness of classifiers in this general regime, which depend on the curvature of the classifier's decision boundary. Our bounds confirm and quantify the empirical observations that classifiers satisfying curvature constraints are robust to random noise. Moreover, we quantify the robustness of classifiers in terms of the subspace dimension in the semi-random noise regime, and show that our bounds remarkably interpolate between the worst-case and random noise regimes. We perform experiments and show that the derived bounds provide very accurate estimates when applied to various state-of-the-art deep neural networks and datasets. This result suggests bounds on the curvature of the classifiers' decision boundaries that we support experimentally, and more generally offers important insights onto the geometry of high dimensional classification problems.

more

less

Alhussein Fawzi and Seyed-Mohsen Moosavi-Dezfooli and Pascal Frossard

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG, cs.CV, stat.ML

First published: 2016/08/31 (7 years ago)

Abstract: Several recent works have shown that state-of-the-art classifiers are vulnerable to worst-case (i.e., adversarial) perturbations of the datapoints. On the other hand, it has been empirically observed that these same classifiers are relatively robust to random noise. In this paper, we propose to study a \textit{semi-random} noise regime that generalizes both the random and worst-case noise regimes. We propose the first quantitative analysis of the robustness of nonlinear classifiers in this general noise regime. We establish precise theoretical bounds on the robustness of classifiers in this general regime, which depend on the curvature of the classifier's decision boundary. Our bounds confirm and quantify the empirical observations that classifiers satisfying curvature constraints are robust to random noise. Moreover, we quantify the robustness of classifiers in terms of the subspace dimension in the semi-random noise regime, and show that our bounds remarkably interpolate between the worst-case and random noise regimes. We perform experiments and show that the derived bounds provide very accurate estimates when applied to various state-of-the-art deep neural networks and datasets. This result suggests bounds on the curvature of the classifiers' decision boundaries that we support experimentally, and more generally offers important insights onto the geometry of high dimensional classification problems.

|

[link]

Fawzi et al. study robustness in the transition from random samples to semi-random and adversarial samples. Specifically they present bounds relating the norm of an adversarial perturbation to the norm of random perturbations – for the exact form I refer to the paper. Personally, I find the definition of semi-random noise most interesting, as it allows to get an intuition for distinguishing random noise from adversarial examples. As in related literature, adversarial examples are defined as

$r_S(x_0) = \arg\min_{x_0 \in S} \|r\|_2$ s.t. $f(x_0 + r) \neq f(x_0)$

where $f$ is the classifier to attack and $S$ the set of allowed perturbations (e.g. requiring that the perturbed samples are still images). If $S$ is mostly unconstrained regarding the direction of $r$ in high dimensional space, Fawzi et al. consider $r$ to be an adversarial examples – intuitively, and adversary can choose $r$ arbitrarily to fool the classifier. If, however, the directions considered in $S$ are constrained to an $m$-dimensional subspace, Fawzi et al. consider $r$ to be semi-random noise. In the extreme case, if $m = 1$, $r$ is random noise. In this case, we can intuitively think of $S$ as a randomly chosen one dimensional subspace – i.e. a random direction in multi-dimensional space.

Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).

|

A Boundary Tilting Persepective on the Phenomenon of Adversarial Examples

Thomas Tanay and Lewis Griffin

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG, stat.ML

First published: 2016/08/27 (7 years ago)

Abstract: Deep neural networks have been shown to suffer from a surprising weakness: their classification outputs can be changed by small, non-random perturbations of their inputs. This adversarial example phenomenon has been explained as originating from deep networks being "too linear" (Goodfellow et al., 2014). We show here that the linear explanation of adversarial examples presents a number of limitations: the formal argument is not convincing, linear classifiers do not always suffer from the phenomenon, and when they do their adversarial examples are different from the ones affecting deep networks. We propose a new perspective on the phenomenon. We argue that adversarial examples exist when the classification boundary lies close to the submanifold of sampled data, and present a mathematical analysis of this new perspective in the linear case. We define the notion of adversarial strength and show that it can be reduced to the deviation angle between the classifier considered and the nearest centroid classifier. Then, we show that the adversarial strength can be made arbitrarily high independently of the classification performance due to a mechanism that we call boundary tilting. This result leads us to defining a new taxonomy of adversarial examples. Finally, we show that the adversarial strength observed in practice is directly dependent on the level of regularisation used and the strongest adversarial examples, symptomatic of overfitting, can be avoided by using a proper level of regularisation.

more

less

Thomas Tanay and Lewis Griffin

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG, stat.ML

First published: 2016/08/27 (7 years ago)

Abstract: Deep neural networks have been shown to suffer from a surprising weakness: their classification outputs can be changed by small, non-random perturbations of their inputs. This adversarial example phenomenon has been explained as originating from deep networks being "too linear" (Goodfellow et al., 2014). We show here that the linear explanation of adversarial examples presents a number of limitations: the formal argument is not convincing, linear classifiers do not always suffer from the phenomenon, and when they do their adversarial examples are different from the ones affecting deep networks. We propose a new perspective on the phenomenon. We argue that adversarial examples exist when the classification boundary lies close to the submanifold of sampled data, and present a mathematical analysis of this new perspective in the linear case. We define the notion of adversarial strength and show that it can be reduced to the deviation angle between the classifier considered and the nearest centroid classifier. Then, we show that the adversarial strength can be made arbitrarily high independently of the classification performance due to a mechanism that we call boundary tilting. This result leads us to defining a new taxonomy of adversarial examples. Finally, we show that the adversarial strength observed in practice is directly dependent on the level of regularisation used and the strongest adversarial examples, symptomatic of overfitting, can be avoided by using a proper level of regularisation.

|

[link]

Tanay and Griffin introduce the boundary tilting perspective as alternative to the “linear explanation” for adversarial examples. Specifically, they argue that it is not reasonable to assume that the linearity in deep neural networks causes the existence of adversarial examples. Originally, Goodfellow et al. [1] explained the impact of adversarial examples by considering a linear classifier: $w^T x' = w^Tx + w^T\eta$ where $\eta$ is the adversarial perturbations. In large dimensions, the second term might result in a significant shift of the neuron's activation. Tanay and Griffin, in contrast, argue that the dimensionality does not have an impact; althought he impact of $w^T\eta$ grows with the dimensionality, so does $w^Tx$, such that the ratio should be preserved. Additionally, they showed (by giving a counter-example) that linearity is not sufficient for the existence of adversarial examples. Instead, they offer a different perspective on the existence of adversarial examples that is, in the course of the paper, formalized. Their main idea is that the training samples live on a manifold in the actual input space. The claim is, that on the manifold there are no adversarial examples (meaning that the classes are well separated on the manifold and it is hard to find adversarial examples for most training samples). However, the decision boundary extends beyond the manifold and might lie close to the manifold such that adversarial examples leaving the manifold can be found easily. This idea is illustrated in Figure 1. https://i.imgur.com/SrviKgm.png Figure 1: Illustration of the underlying idea of the boundary tilting perspective, see the text for details. [1] Ian J. Goodfellow, Jonathon Shlens, Christian Szegedy: Explaining and Harnessing Adversarial Examples. CoRR abs/1412.6572 (2014) Also find this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

Ensemble Robustness of Deep Learning Algorithms

Jiashi Feng and Tom Zahavy and Bingyi Kang and Huan Xu and Shie Mannor

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG, cs.CV, stat.ML

First published: 2016/02/07 (8 years ago)

Abstract: The question why deep learning algorithms perform so well in practice has attracted increasing research interest. However, most of well-established approaches, such as hypothesis capacity, robustness or sparseness, have not provided complete explanations, due to the high complexity of the deep learning algorithms and their inherent randomness. In this work, we introduce a new approach~\textendash~ensemble robustness~\textendash~towards characterizing the generalization performance of generic deep learning algorithms. Ensemble robustness concerns robustness of the \emph{population} of the hypotheses that may be output by a learning algorithm. Through the lens of ensemble robustness, we reveal that a stochastic learning algorithm can generalize well as long as its sensitiveness to adversarial perturbation is bounded in average, or equivalently, the performance variance of the algorithm is small. Quantifying ensemble robustness of various deep learning algorithms may be difficult analytically. However, extensive simulations for seven common deep learning algorithms for different network architectures provide supporting evidence for our claims. Furthermore, our work explains the good performance of several published deep learning algorithms.

more

less

Jiashi Feng and Tom Zahavy and Bingyi Kang and Huan Xu and Shie Mannor

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG, cs.CV, stat.ML

First published: 2016/02/07 (8 years ago)

Abstract: The question why deep learning algorithms perform so well in practice has attracted increasing research interest. However, most of well-established approaches, such as hypothesis capacity, robustness or sparseness, have not provided complete explanations, due to the high complexity of the deep learning algorithms and their inherent randomness. In this work, we introduce a new approach~\textendash~ensemble robustness~\textendash~towards characterizing the generalization performance of generic deep learning algorithms. Ensemble robustness concerns robustness of the \emph{population} of the hypotheses that may be output by a learning algorithm. Through the lens of ensemble robustness, we reveal that a stochastic learning algorithm can generalize well as long as its sensitiveness to adversarial perturbation is bounded in average, or equivalently, the performance variance of the algorithm is small. Quantifying ensemble robustness of various deep learning algorithms may be difficult analytically. However, extensive simulations for seven common deep learning algorithms for different network architectures provide supporting evidence for our claims. Furthermore, our work explains the good performance of several published deep learning algorithms.

|

[link]

Zahavy et al. introduce the concept of ensemble robustness and show that it can be used as indicator for generalization performance. In particular, the main idea is to lift he concept of robustness against adversarial examples to ensemble of networks – as trained, e.g. through Dropout or Bayes-by-Backprop. Letting $Z$ denote the sample set, a learning algorithm is $(K, \epsilon)$ robust if $Z$ can be divided into $K$ disjoint sets $C_1,\ldots,C_K$ such that for every training set $s_1,\ldots,s_n \in Z$ it holds:

$\forall i, \forall z \in Z, \forall k = 1,\ldots, K$: if $s,z \in C_k$, then $l(f,s_i) – l(f,z)| \leq \epsilon(s_1,\ldots,s_n)$

where $f$ is the model produced by the learning algorithm, $l$ measures the loss and $\epsilon:Z^n \mapsto \mathbb{R}$. For ensembles (explicit or implicit) this definition is extended by considering the maximum generalization loss under the expectation of a randomized learning algorithm:

$\forall i, \forall k = 1,\ldots,K$: if $s \in C_k$, then $\mathbb{E}_f \max_{z \in C_k} |l(f,s_i) – l(f,z)| \leq \epsilon(s_1,\ldots,s_n)$

Here, the randomized learning algorithm computes a distribution over models given a training set.

Also view this summary at [davidstutz.de](https://davidstutz.de/category/reading/).

|

Adversarial Machine Learning at Scale

Alexey Kurakin and Ian Goodfellow and Samy Bengio

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV, cs.CR, cs.LG, stat.ML

First published: 2016/11/04 (7 years ago)

Abstract: Adversarial examples are malicious inputs designed to fool machine learning models. They often transfer from one model to another, allowing attackers to mount black box attacks without knowledge of the target model's parameters. Adversarial training is the process of explicitly training a model on adversarial examples, in order to make it more robust to attack or to reduce its test error on clean inputs. So far, adversarial training has primarily been applied to small problems. In this research, we apply adversarial training to ImageNet. Our contributions include: (1) recommendations for how to succesfully scale adversarial training to large models and datasets, (2) the observation that adversarial training confers robustness to single-step attack methods, (3) the finding that multi-step attack methods are somewhat less transferable than single-step attack methods, so single-step attacks are the best for mounting black-box attacks, and (4) resolution of a "label leaking" effect that causes adversarially trained models to perform better on adversarial examples than on clean examples, because the adversarial example construction process uses the true label and the model can learn to exploit regularities in the construction process.

more

less

Alexey Kurakin and Ian Goodfellow and Samy Bengio

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV, cs.CR, cs.LG, stat.ML

First published: 2016/11/04 (7 years ago)

Abstract: Adversarial examples are malicious inputs designed to fool machine learning models. They often transfer from one model to another, allowing attackers to mount black box attacks without knowledge of the target model's parameters. Adversarial training is the process of explicitly training a model on adversarial examples, in order to make it more robust to attack or to reduce its test error on clean inputs. So far, adversarial training has primarily been applied to small problems. In this research, we apply adversarial training to ImageNet. Our contributions include: (1) recommendations for how to succesfully scale adversarial training to large models and datasets, (2) the observation that adversarial training confers robustness to single-step attack methods, (3) the finding that multi-step attack methods are somewhat less transferable than single-step attack methods, so single-step attacks are the best for mounting black-box attacks, and (4) resolution of a "label leaking" effect that causes adversarially trained models to perform better on adversarial examples than on clean examples, because the adversarial example construction process uses the true label and the model can learn to exploit regularities in the construction process.

|

[link]

Kurakin et al. present some larger scale experiments using adversarial training on ImageNet to increase robustness. In particular, they claim to be the first using adversarial training on ImageNet. Furthermore, they provide experiments underlining the following conclusions: - Adversarial training can also be seen as regularizer. This, however, is not surprising as training on noisy training samples is also known to act as regularization. - Label leaking describes the observation that an adversarially trained model is able to defend against (i.e. correctly classify) an adversarial example which has been computed by knowing to true label while not defending against adversarial examples that were crafted without knowing the true label. This means that crafting adversarial examples without guidance by the true label might be beneficial (in terms of a stronger attack). - Model complexity seems to have an impact on robustness after adversarial training. However, from the experiments, it is hard to deduce how this connection might look exactly. Also see this summary at [davidstutz.de](https://davidstutz.de/category/reading/).  |

Delving into Transferable Adversarial Examples and Black-box Attacks

Yanpei Liu and Xinyun Chen and Chang Liu and Dawn Song

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG

First published: 2016/11/08 (7 years ago)

Abstract: An intriguing property of deep neural networks is the existence of adversarial examples, which can transfer among different architectures. These transferable adversarial examples may severely hinder deep neural network-based applications. Previous works mostly study the transferability using small scale datasets. In this work, we are the first to conduct an extensive study of the transferability over large models and a large scale dataset, and we are also the first to study the transferability of targeted adversarial examples with their target labels. We study both non-targeted and targeted adversarial examples, and show that while transferable non-targeted adversarial examples are easy to find, targeted adversarial examples generated using existing approaches almost never transfer with their target labels. Therefore, we propose novel ensemble-based approaches to generating transferable adversarial examples. Using such approaches, we observe a large proportion of targeted adversarial examples that are able to transfer with their target labels for the first time. We also present some geometric studies to help understanding the transferable adversarial examples. Finally, we show that the adversarial examples generated using ensemble-based approaches can successfully attack Clarifai.com, which is a black-box image classification system.

more

less

Yanpei Liu and Xinyun Chen and Chang Liu and Dawn Song

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG

First published: 2016/11/08 (7 years ago)