BEGAN: Boundary Equilibrium Generative Adversarial Networks

David Berthelot and Thomas Schumm and Luke Metz

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.LG, stat.ML

First published: 2017/03/31 (8 years ago)

Abstract: We propose a new equilibrium enforcing method paired with a loss derived from the Wasserstein distance for training auto-encoder based Generative Adversarial Networks. This method balances the generator and discriminator during training. Additionally, it provides a new approximate convergence measure, fast and stable training and high visual quality. We also derive a way of controlling the trade-off between image diversity and visual quality. We focus on the image generation task, setting a new milestone in visual quality, even at higher resolutions. This is achieved while using a relatively simple model architecture and a standard training procedure.

more

less

David Berthelot and Thomas Schumm and Luke Metz

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.LG, stat.ML

First published: 2017/03/31 (8 years ago)

Abstract: We propose a new equilibrium enforcing method paired with a loss derived from the Wasserstein distance for training auto-encoder based Generative Adversarial Networks. This method balances the generator and discriminator during training. Additionally, it provides a new approximate convergence measure, fast and stable training and high visual quality. We also derive a way of controlling the trade-off between image diversity and visual quality. We focus on the image generation task, setting a new milestone in visual quality, even at higher resolutions. This is achieved while using a relatively simple model architecture and a standard training procedure.

|

[link]

* [Detailed Summary](https://blog.heuritech.com/2017/04/11/began-state-of-the-art-generation-of-faces-with-generative-adversarial-networks/)

* [Tensorflow implementation](https://github.com/carpedm20/BEGAN-tensorflow)

### Summary

* They suggest a GAN algorithm that is based on an autoencoder with Wasserstein distance.

* Their method generates highly realistic human faces.

* Their method has a convergence measure, which reflects the quality of the generates images.

* Their method has a diversity hyperparameter, which can be used to set the tradeoff between image diversity and image quality.

### How

* Like other GANs, their method uses a generator G and a discriminator D.

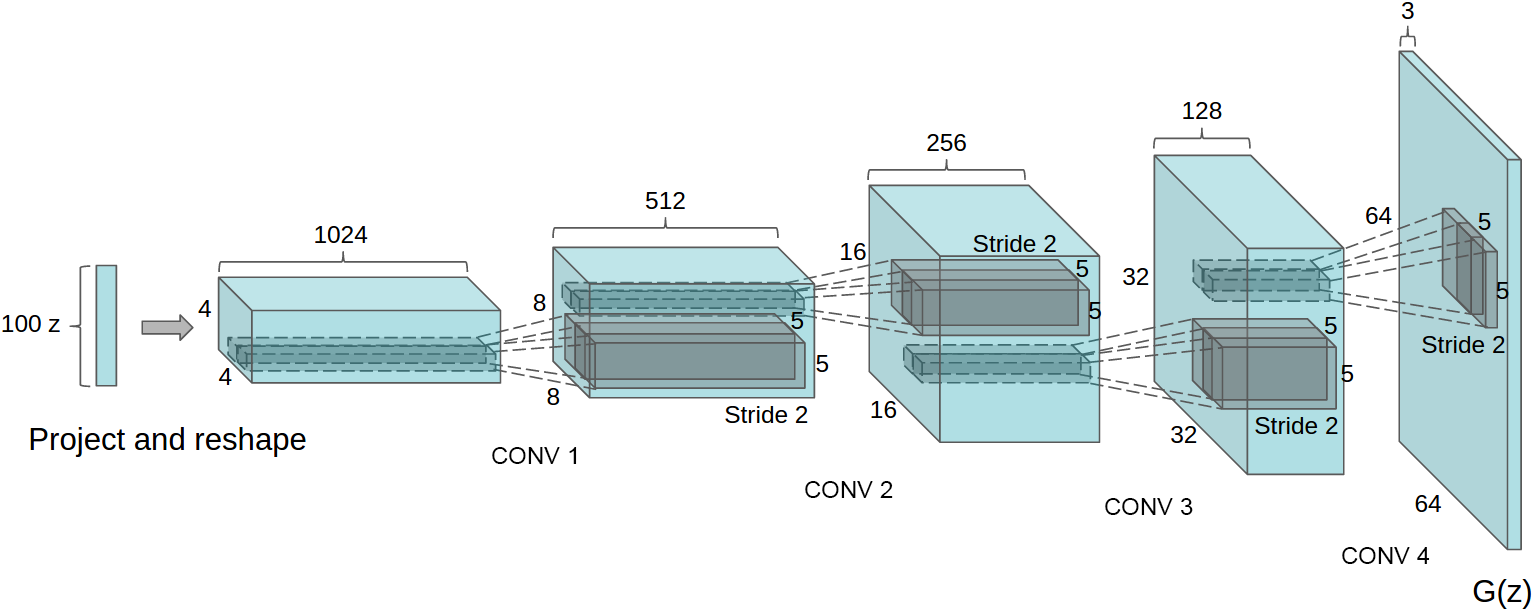

* Generator

* The generator is fairly standard.

* It gets a noise vector `z` as input and uses upsampling+convolutions to generate images.

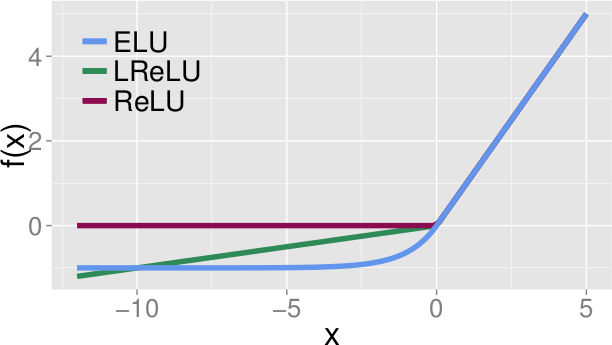

* It uses ELUs and no BN.

* Discriminator

* The discriminator is a full autoencoder (i.e. it converts input images to `8x8x3` tensors, then reconstructs them back to images).

* It has skip-connections from the `8x8x3` layer to each upsampling layer.

* It also uses ELUs and no BN.

* Their method now has the following steps:

1. Collect real images `x_real`.

2. Generate fake images `x_fake = G(z)`.

3. Reconstruct the real images `r_real = D(x_real)`.

4. Reconstruct the fake images `r_fake = D(x_fake)`.

5. Using an Lp-Norm (e.g. L1-Norm), compute the reconstruction loss of real images `d_real = Lp(x_real, r_real)`.

6. Using an Lp-Norm (e.g. L1-Norm), compute the reconstruction loss of fake images `d_fake = Lp(x_fake, r_fake)`.

7. The loss of D is now `L_D = d_real - d_fake`.

8. The loss of G is now `L_G = -L_D`.

* About the loss

* `r_real` and `r_fake` are really losses (e.g. L1-loss or L2-loss). In the paper they use `L(...)` for that. Here they are referenced as `d_*` in order to avoid confusion.

* The loss `L_D` is based on the Wasserstein distance, as in WGAN.

* `L_D` assumes, that the losses `d_real` and `d_fake` are normally distributed and tries to move their mean values. Ideally, the discriminator produces very different means for real/fake images, while the generator leads to very similar means.

* Their formulation of the Wasserstein distance does not require K-Lipschitz functions, which is why they don't have the weight clipping from WGAN.

* Equilibrium

* The generator and discriminator are at equilibrium, if `E[r_fake] = E[r_real]`. (That's undesirable, because it means that D can't differentiate between fake and real images, i.e. G doesn't get a proper gradient any more.)

* Let `g = E[r_fake] / E[r_real]`, then:

* Low `g` means that `E[r_fake]` is low and/or `E[r_real]` is high, which means that real images are not as well reconstructed as fake images. This means, that the discriminator will be more heavily trained towards reconstructing real images correctly (as that is the main source of error).

* High `g` conversely means that real images are well reconstructed (compared to fake ones) and that the discriminator will be trained more towards fake ones.

* `g` gives information about how much G and D should be trained each (so that none of the two overwhelms the other).

* They introduce a hyperparameter `gamma` (from interval `[0,1]`), which reflects the target value of the balance `g`.

* Using `gamma`, they change their losses `L_D` and `L_G` slightly:

* `L_D = d_real - k_t d_fake`

* `L_G = r_fake`

* `k_t+1 = k_t + lambda_k (gamma d_real - d_fake)`.

* `k_t` is a control term that controls how much D is supposed to focus on the fake images. It changes with every batch.

* `k_t` is clipped to `[0,1]` and initialized at `0` (max focus on reconstructing real images).

* `lambda_k` is like the learning rate of the control term, set to `0.001`.

* Note that `gamma d_real - d_fake = 0 <=> gamma d_real = d_fake <=> gamma = d_fake / d_real`.

* Convergence measure

* They measure the convergence of their model using `M`:

* `M = d_real + |gamma d_real - d_fake|`

* `M` goes down, if `d_real` goes down (D becomes better at autoencoding real images).

* `M` goes down, if the difference in reconstruction error between real and fake images goes down, i.e. if G becomes better at generating fake images.

* Other

* They use Adam with learning rate 0.0001. They decrease it by a factor of 2 whenever M stalls.

* Higher initial learning rate could lead to model collapse or visual artifacs.

* They generate images of max size 128x128.

* They don't use more than 128 filters per conv layer.

### Results

* NOTES:

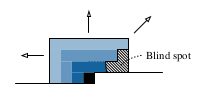

* Below example images are NOT from generators trained on CelebA. They used a custom dataset of celebrity images. They don't show any example images from the dataset. The generated images look like there is less background around the faces, making the task easier.

* Few example images. Unclear how much cherry picking was involved. Though the results from the tensorflow example (see like at top) make it look like the examples are representative (aside from speckle-artifacts).

* No LSUN Bedrooms examples. Human faces are comparatively easy to generate.

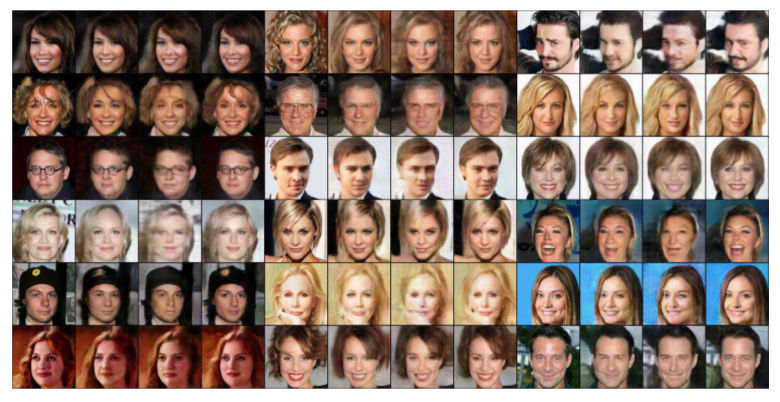

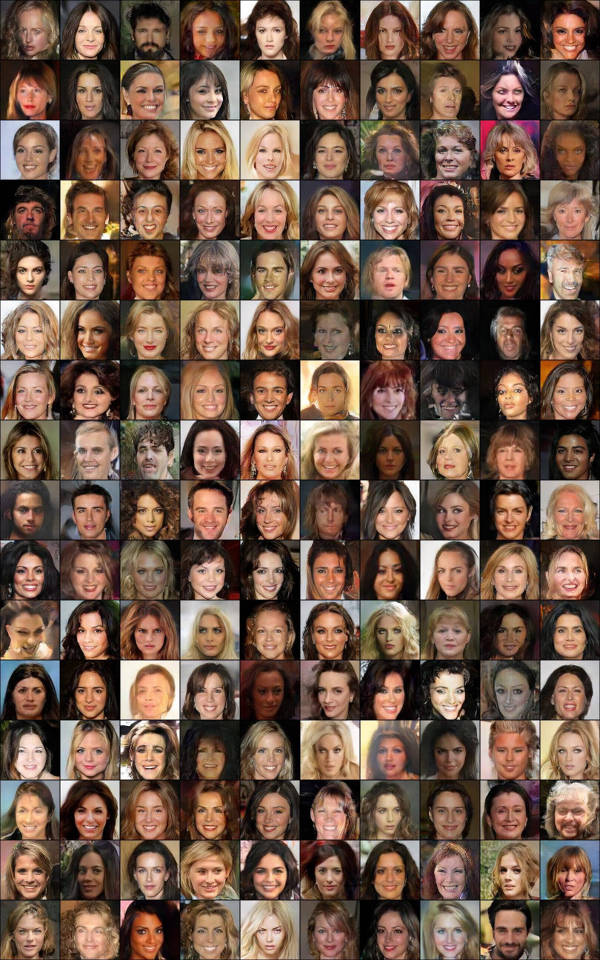

* Example images at 128x128:

*

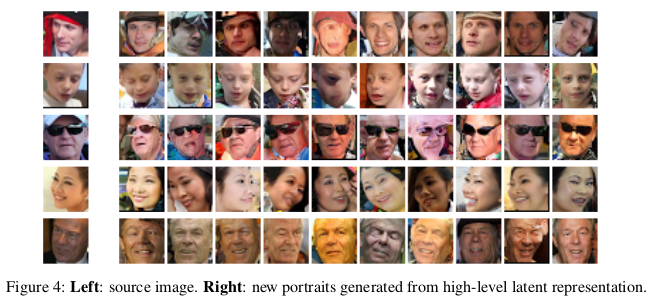

* Effect of changing the target balance `gamma`:

*

* High gamma leads to more diversity at lower quality.

* Interpolations:

*

* Convergence measure `M` and associated image quality during the training:

*

|

StackGAN: Text to Photo-realistic Image Synthesis with Stacked Generative Adversarial Networks

Zhang, Han and Xu, Tao and Li, Hongsheng and Zhang, Shaoting and Huang, Xiaolei and Wang, Xiaogang and Metaxas, Dimitris N.

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

Zhang, Han and Xu, Tao and Li, Hongsheng and Zhang, Shaoting and Huang, Xiaolei and Wang, Xiaogang and Metaxas, Dimitris N.

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

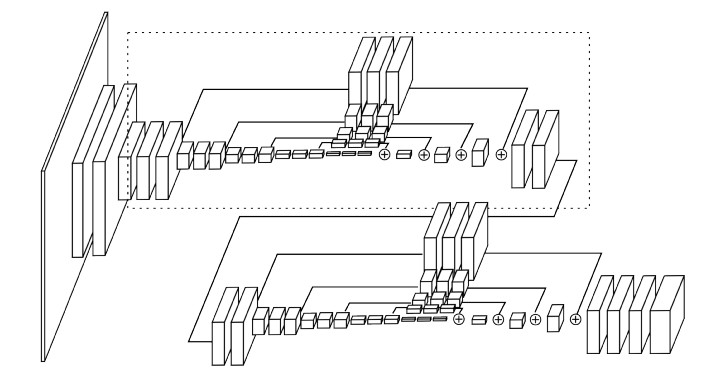

* They propose a two-stage GAN architecture that generates 256x256 images of (relatively) high quality.

* The model gets text as an additional input and the images match the text.

### How

* Most of the architecture is the same as in any GAN:

* Generator G generates images.

* Discriminator D discriminates betweens fake and real images.

* G gets a noise variable `z`, so that it doesn't always do the same thing.

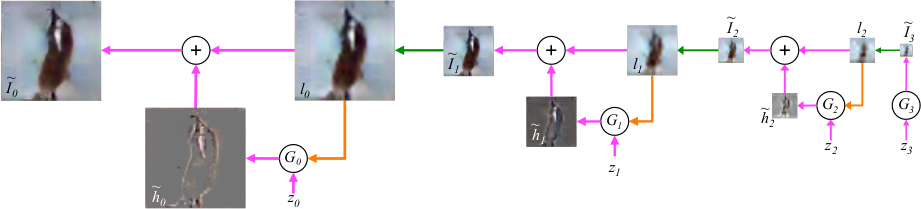

* Two-staged image generation:

* Instead of one step, as in most GANs, they use two steps, each consisting of a G and D.

* The first generator creates 64x64 images via upsampling.

* The first discriminator judges these images via downsampling convolutions.

* The second generator takes the image from the first generator, downsamples it via convolutions, then applies some residual convolutions and then re-upsamples it to 256x256.

* The second discriminator is comparable to the first one (downsampling convolutions).

* Note that the second generator does not get an additional noise term `z`, only the first one gets it.

* For upsampling, they use 3x3 convolutions with ReLUs, BN and nearest neighbour upsampling.

* For downsampling, they use 4x4 convolutions with stride 2, Leaky ReLUs and BN (the first convolution doesn't seem to use BN).

* Text embedding:

* The generated images are supposed to match input texts.

* These input texts are embedded to vectors.

* These vectors are added as:

1. An additional input to the first generator.

2. An additional input to the second generator (concatenated after the downsampling and before the residual convolutions).

3. An additional input to the first discriminator (concatenated after the downsampling).

4. An additional input to the second discriminator (concatenated after the downsampling).

* In case the text embeddings need to be matrices, the values are simply reshaped to `(N, 1, 1)` and then repeated to `(N, H, W)`.

* The texts are converted to embeddings via a network at the start of the model.

* Input to that vector: Unclear. (Concatenated word vectors? Seems to not be described in the text.)

* The input is transformed to a vector via a fully connected layer (the text model is apparently not recurrent).

* The vector is transformed via fully connected layers to a mean vector and a sigma vector.

* These are then interpreted as normal distributions, from which the final output vector is sampled. This uses the reparameterization trick, similar to the method in VAEs.

* Just like in VAEs, a KL-divergence term is added to the loss, which prevents each single normal distribution from deviating too far from the unit normal distribution `N(0,1)`.

* The authors argue, that using the VAE-like formulation -- instead of directly predicting an output vector (via FC layers) -- compensated for the lack of labels (smoother manifold).

* Note: This way of generating text embeddings seems very simple. (No recurrence, only about two layers.) It probably won't do much more than just roughly checking for the existence of specific words and word combinations (e.g. "red head").

* Visualization of the architecture:

*

### Results

* Note: No example images of the two-stage architecture for LSUN bedrooms.

* Using only the first stage of the architecture (first G and D) reduces the Inception score significantly.

* Adding the text to both the first and second generator improves the Inception score slightly.

* Adding the VAE-like text embedding generation (as opposed to only FC layers) improves the Inception score slightly.

* Generating images at higher resolution (256x256 instead of 128x128) improves the Inception score significantly

* Note: The 256x256 architecture has more residual convolutions than the 128x128 one.

* Note: The 128x128 and the 256x256 are both upscaled to 299x299 images before computing the Inception score. That should make the 128x128 images quite blurry and hence of low quality.

* Example images, with text and stage 1/2 results:

*

* More examples of birds:

*

* Examples of failures:

*

* The authors argue, that most failure cases happen when stage 1 messes up.

|

Self-Normalizing Neural Networks

Günter Klambauer and Thomas Unterthiner and Andreas Mayr and Sepp Hochreiter

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.LG, stat.ML

First published: 2017/06/08 (8 years ago)

Abstract: Deep Learning has revolutionized vision via convolutional neural networks (CNNs) and natural language processing via recurrent neural networks (RNNs). However, success stories of Deep Learning with standard feed-forward neural networks (FNNs) are rare. FNNs that perform well are typically shallow and, therefore cannot exploit many levels of abstract representations. We introduce self-normalizing neural networks (SNNs) to enable high-level abstract representations. While batch normalization requires explicit normalization, neuron activations of SNNs automatically converge towards zero mean and unit variance. The activation function of SNNs are "scaled exponential linear units" (SELUs), which induce self-normalizing properties. Using the Banach fixed-point theorem, we prove that activations close to zero mean and unit variance that are propagated through many network layers will converge towards zero mean and unit variance -- even under the presence of noise and perturbations. This convergence property of SNNs allows to (1) train deep networks with many layers, (2) employ strong regularization, and (3) to make learning highly robust. Furthermore, for activations not close to unit variance, we prove an upper and lower bound on the variance, thus, vanishing and exploding gradients are impossible. We compared SNNs on (a) 121 tasks from the UCI machine learning repository, on (b) drug discovery benchmarks, and on (c) astronomy tasks with standard FNNs and other machine learning methods such as random forests and support vector machines. SNNs significantly outperformed all competing FNN methods at 121 UCI tasks, outperformed all competing methods at the Tox21 dataset, and set a new record at an astronomy data set. The winning SNN architectures are often very deep. Implementations are available at: github.com/bioinf-jku/SNNs.

more

less

Günter Klambauer and Thomas Unterthiner and Andreas Mayr and Sepp Hochreiter

arXiv e-Print archive - 2017 via Local arXiv

Keywords: cs.LG, stat.ML

First published: 2017/06/08 (8 years ago)

Abstract: Deep Learning has revolutionized vision via convolutional neural networks (CNNs) and natural language processing via recurrent neural networks (RNNs). However, success stories of Deep Learning with standard feed-forward neural networks (FNNs) are rare. FNNs that perform well are typically shallow and, therefore cannot exploit many levels of abstract representations. We introduce self-normalizing neural networks (SNNs) to enable high-level abstract representations. While batch normalization requires explicit normalization, neuron activations of SNNs automatically converge towards zero mean and unit variance. The activation function of SNNs are "scaled exponential linear units" (SELUs), which induce self-normalizing properties. Using the Banach fixed-point theorem, we prove that activations close to zero mean and unit variance that are propagated through many network layers will converge towards zero mean and unit variance -- even under the presence of noise and perturbations. This convergence property of SNNs allows to (1) train deep networks with many layers, (2) employ strong regularization, and (3) to make learning highly robust. Furthermore, for activations not close to unit variance, we prove an upper and lower bound on the variance, thus, vanishing and exploding gradients are impossible. We compared SNNs on (a) 121 tasks from the UCI machine learning repository, on (b) drug discovery benchmarks, and on (c) astronomy tasks with standard FNNs and other machine learning methods such as random forests and support vector machines. SNNs significantly outperformed all competing FNN methods at 121 UCI tasks, outperformed all competing methods at the Tox21 dataset, and set a new record at an astronomy data set. The winning SNN architectures are often very deep. Implementations are available at: github.com/bioinf-jku/SNNs.

|

[link]

https://github.com/bioinf-jku/SNNs

* They suggest a variation of ELUs, which leads to networks being automatically normalized.

* The effects are comparable to Batch Normalization, while requiring significantly less computation (barely more than a normal ReLU).

### How

* They define Self-Normalizing Neural Networks (SNNs) as neural networks, which automatically keep their activations at zero-mean and unit-variance (per neuron).

* SELUs

* They use SELUs to turn their networks into SNNs.

* Formula:

*

* with `alpha = 1.6733` and `lambda = 1.0507`.

* They proof that with properly normalized weights the activations approach a fixed point of zero-mean and unit-variance. (Different settings for alpha and lambda can lead to other fixed points.)

* They proof that this is still the case when previous layer activations and weights do not have optimal values.

* They proof that this is still the case when the variance of previous layer activations is very high or very low and argue that the mean of those activations is not so important.

* Hence, SELUs with these hyperparameters should have self-normalizing properties.

* SELUs are here used as a basis because:

1. They can have negative and positive values, which allows to control the mean.

2. They have saturating regions, which allows to dampen high variances from previous layers.

3. They have a slope larger than one, which allows to increase low variances from previous layers.

4. They generate a continuous curve, which ensures that there is a fixed point between variance damping and increasing.

* ReLUs, Leaky ReLUs, Sigmoids and Tanhs do not offer the above properties.

* Initialization

* SELUs for SNNs work best with normalized weights.

* They suggest to make sure per layer that:

1. The first moment (sum of weights) is zero.

2. The second moment (sum of squared weights) is one.

* This can be done by drawing weights from a normal distribution `N(0, 1/n)`, where `n` is the number of neurons in the layer.

* Alpha-dropout

* SELUs don't perform as well with normal Dropout, because their point of low variance is not 0.

* They suggest a modification of Dropout called Alpha-dropout.

* In this technique, values are not dropped to 0 but to `alpha' = -lambda * alpha = -1.0507 * 1.6733 = -1.7581`.

* Similar to dropout, activations are changed during training to compensate for the dropped units.

* Each activation `x` is changed to `a(xd+alpha'(1-d))+b`.

* `d = B(1, q)` is the dropout variable consisting of 1s and 0s.

* `a = (q + alpha'^2 q(1-q))^(-1/2)`

* `b = -(q + alpha'^2 q(1-q))^(-1/2) ((1-q)alpha')`

* They made good experiences with dropout rates around 0.05 to 0.1.

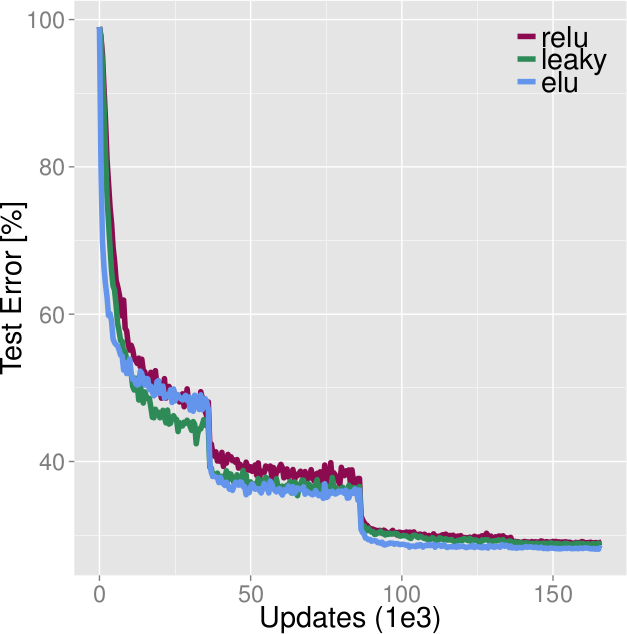

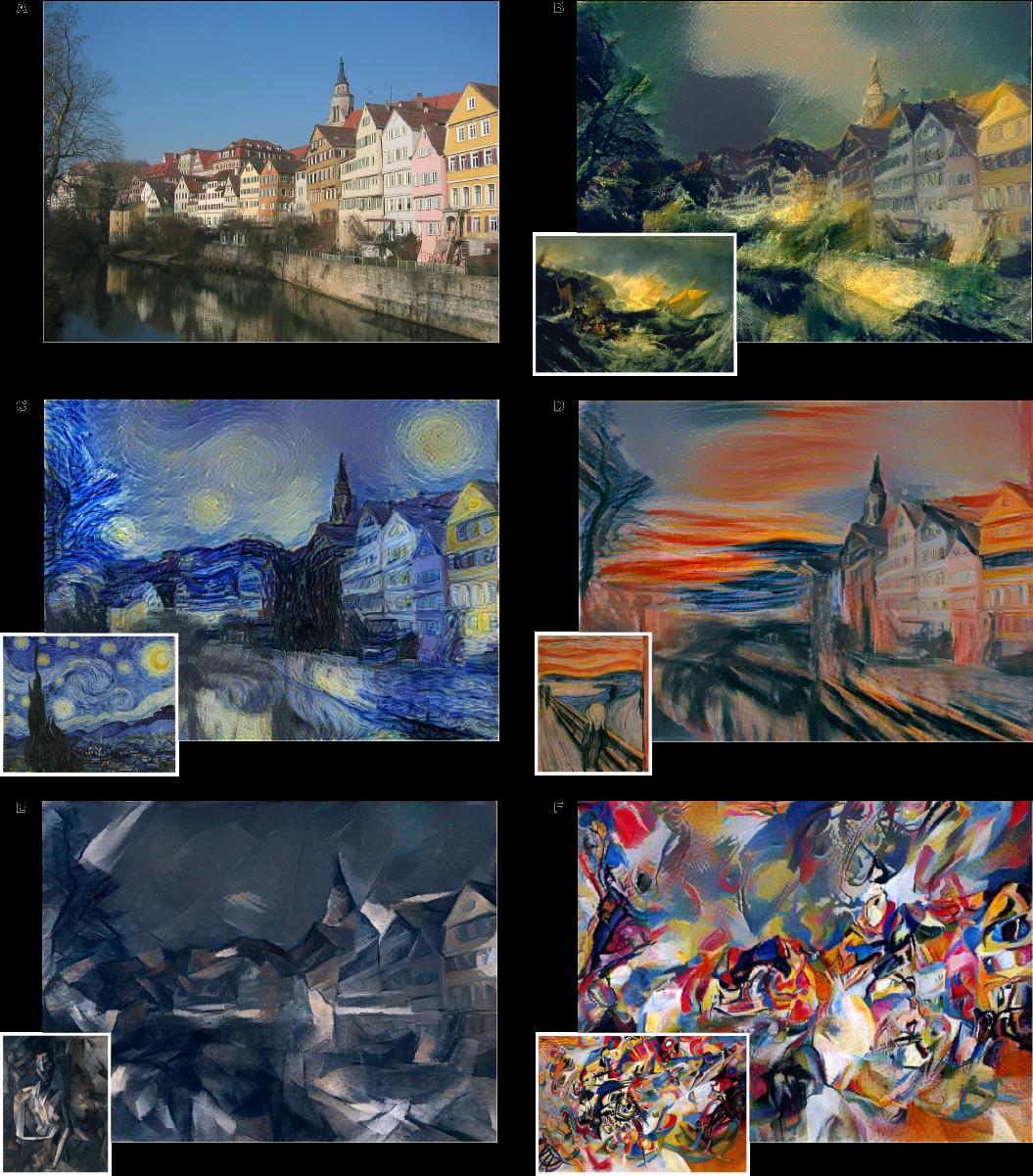

### Results

* Note: All of their tests are with fully connected networks. No convolutions.

* Example training results:

*

* Left: MNIST, Right: CIFAR10

* Networks have N layers each, see legend. No convolutions.

* 121 UCI Tasks

* They manage to beat SVMs and RandomForests, while other networks (Layer Normalization, BN, Weight Normalization, Highway Networks, ResNet) perform significantly worse than their network (and usually don't beat SVMs/RFs).

* Tox21

* They achieve better results than other networks (again, Layer Normalization, BN, etc.).

* They achive almost the same result as the so far best model on the dataset, which consists of a mixture of neural networks, SVMs and Random Forests.

* HTRU2

* They achieve better results than other networks.

* They beat the best non-neural method (Naive Bayes).

* Among all tested other networks, MSRAinit performs best, which references a network withput any normalization, only ReLUs and Microsoft Weight Initialization (see paper: `Delving deep into rectifiers: Surpassing human-level performance on imagenet classification`).

|

Wasserstein GAN

Martin Arjovsky and Soumith Chintala and Léon Bottou

arXiv e-Print archive - 2017 via Local arXiv

Keywords: stat.ML, cs.LG

First published: 2017/01/26 (9 years ago)

Abstract: We introduce a new algorithm named WGAN, an alternative to traditional GAN training. In this new model, we show that we can improve the stability of learning, get rid of problems like mode collapse, and provide meaningful learning curves useful for debugging and hyperparameter searches. Furthermore, we show that the corresponding optimization problem is sound, and provide extensive theoretical work highlighting the deep connections to other distances between distributions.

more

less

Martin Arjovsky and Soumith Chintala and Léon Bottou

arXiv e-Print archive - 2017 via Local arXiv

Keywords: stat.ML, cs.LG

First published: 2017/01/26 (9 years ago)

Abstract: We introduce a new algorithm named WGAN, an alternative to traditional GAN training. In this new model, we show that we can improve the stability of learning, get rid of problems like mode collapse, and provide meaningful learning curves useful for debugging and hyperparameter searches. Furthermore, we show that the corresponding optimization problem is sound, and provide extensive theoretical work highlighting the deep connections to other distances between distributions.

|

[link]

* They suggest a slightly altered algorithm for GANs.

* The new algorithm is more stable than previous ones.

### How

* Each GAN contains a Generator that generates (fake-)examples and a Discriminator that discriminates between fake and real examples.

* Both fake and real examples can be interpreted as coming from a probability distribution.

* The basis of each GAN algorithm is to somehow measure the difference between these probability distributions

and change the network parameters of G so that the fake-distribution becomes more and more similar to the real distribution.

* There are multiple distance measures to do that:

* Total Variation (TV)

* KL-Divergence (KL)

* Jensen-Shannon divergence (JS)

* This one is based on the KL-Divergence and is the basis of the original GAN, as well as LAPGAN and DCGAN.

* Earth-Mover distance (EM), aka Wasserstein-1

* Intuitively, one can imagine both probability distributions as hilly surfaces. EM then reflects, how much mass has to be moved to convert the fake distribution to the real one.

* Ideally, a distance measure has everywhere nice values and gradients

(e.g. no +/- infinity values; no binary 0 or 1 gradients; gradients that get continously smaller when the generator produces good outputs).

* In that regard, EM beats JS and JS beats TV and KL (roughly speaking). So they use EM.

* EM

* EM is defined as

*

* (inf = infinum, more or less a minimum)

* which is intractable, but following the Kantorovich-Rubinstein duality it can also be calculated via

*

* (sup = supremum, more or less a maximum)

* However, the second formula is here only valid if the network is a K-Lipschitz function (under every set of parameters).

* This can be guaranteed by simply clipping the discriminator's weights to the range `[-0.01, 0.01]`.

* Then in practice the following version of the tractable EM is used, where `w` are the parameters of the discriminator:

*

* The full algorithm is mostly the same as for DCGAN:

*

* Line 2 leads to training the discriminator multiple times per batch (i.e. more often than the generator).

* This is similar to the `max w in W` in the third formula (above).

* This was already part of the original GAN algorithm, but is here more actively used.

* Because of the EM distance, even a "perfect" discriminator still gives good gradient (in contrast to e.g. JS, where the discriminator should not be too far ahead). So the discriminator can be safely trained more often than the generator.

* Line 5 and 10 are derived from EM. Note that there is no more Sigmoid at the end of the discriminator!

* Line 7 is derived from the K-Lipschitz requirement (clipping of weights).

* High learning rates or using momentum-based optimizers (e.g. Adam) made the training unstable, which is why they use a small learning rate with RMSprop.

### Results

* Improved stability. The method converges to decent images with models which failed completely when using JS-divergence (like in DCGAN).

* For example, WGAN worked with generators that did not have batch normalization or only consisted of fully connected layers.

* Apparently no more mode collapse. (Mode collapse in GANs = the generator starts to generate often/always the practically same image, independent of the noise input.)

* There is a relationship between loss and image quality. Lower loss (at the generator) indicates higher image quality. Such a relationship did not exist for JS divergence.

* Example images:

*

|

YOLO9000: Better, Faster, Stronger

Joseph Redmon and Ali Farhadi

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/12/25 (9 years ago)

Abstract: We introduce YOLO9000, a state-of-the-art, real-time object detection system that can detect over 9000 object categories. First we propose various improvements to the YOLO detection method, both novel and drawn from prior work. The improved model, YOLOv2, is state-of-the-art on standard detection tasks like PASCAL VOC and COCO. At 67 FPS, YOLOv2 gets 76.8 mAP on VOC 2007. At 40 FPS, YOLOv2 gets 78.6 mAP, outperforming state-of-the-art methods like Faster RCNN with ResNet and SSD while still running significantly faster. Finally we propose a method to jointly train on object detection and classification. Using this method we train YOLO9000 simultaneously on the COCO detection dataset and the ImageNet classification dataset. Our joint training allows YOLO9000 to predict detections for object classes that don't have labelled detection data. We validate our approach on the ImageNet detection task. YOLO9000 gets 19.7 mAP on the ImageNet detection validation set despite only having detection data for 44 of the 200 classes. On the 156 classes not in COCO, YOLO9000 gets 16.0 mAP. But YOLO can detect more than just 200 classes; it predicts detections for more than 9000 different object categories. And it still runs in real-time.

more

less

Joseph Redmon and Ali Farhadi

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/12/25 (9 years ago)

Abstract: We introduce YOLO9000, a state-of-the-art, real-time object detection system that can detect over 9000 object categories. First we propose various improvements to the YOLO detection method, both novel and drawn from prior work. The improved model, YOLOv2, is state-of-the-art on standard detection tasks like PASCAL VOC and COCO. At 67 FPS, YOLOv2 gets 76.8 mAP on VOC 2007. At 40 FPS, YOLOv2 gets 78.6 mAP, outperforming state-of-the-art methods like Faster RCNN with ResNet and SSD while still running significantly faster. Finally we propose a method to jointly train on object detection and classification. Using this method we train YOLO9000 simultaneously on the COCO detection dataset and the ImageNet classification dataset. Our joint training allows YOLO9000 to predict detections for object classes that don't have labelled detection data. We validate our approach on the ImageNet detection task. YOLO9000 gets 19.7 mAP on the ImageNet detection validation set despite only having detection data for 44 of the 200 classes. On the 156 classes not in COCO, YOLO9000 gets 16.0 mAP. But YOLO can detect more than just 200 classes; it predicts detections for more than 9000 different object categories. And it still runs in real-time.

|

[link]

* They suggest a new version of YOLO, a model to detect bounding boxes in images.

* Their new version is more accurate, faster and is trained to recognize up to 9000 classes.

### How

* Their base model is the previous YOLOv1, which they improve here.

* Accuracy improvements

* They add batch normalization to the network.

* Pretraining usually happens on ImageNet at 224x224, fine tuning for bounding box detection then on another dataset, say Pascal VOC 2012, at higher resolutions, e.g. 448x448 in the case of YOLOv1.

This is problematic, because the pretrained network has to learn to deal with higher resolutions and a new task at the same time.

They instead first pretrain on low resolution ImageNet examples, then on higher resolution ImegeNet examples and only then switch to bounding box detection.

That improves their accuracy by about 4 percentage points mAP.

* They switch to anchor boxes, similar to Faster R-CNN. That's largely the same as in YOLOv1. Classification is now done per tested anchor box shape, instead of per grid cell.

The regression of x/y-coordinates is now a bit smarter and uses sigmoids to only translate a box within a grid cell.

* In Faster R-CNN the anchor box shapes are manually chosen (e.g. small squared boxes, large squared boxes, thin but high boxes, ...).

Here instead they learn these shapes from data.

That is done by applying k-Means to the bounding boxes in a dataset.

They cluster them into k=5 clusters and then use the centroids as anchor box shapes.

Their accuracy this way is the same as with 9 manually chosen anchor boxes.

(Using k=9 further increases their accuracy significantly, but also increases model complexity. As they want to predict 9000 classes they stay with k=5.)

* To better predict small bounding boxes, they add a pass-through connection from a higher resolution layer to the end of the network.

* They train their network now at multiple scales. (As the network is now fully convolutional, they can easily do that.)

* Speed improvements

* They get rid of their fully connected layers. Instead the network is now fully convolutional.

* They have also removed a handful or so of their convolutional layers.

* Capability improvement (weakly supervised learning)

* They suggest a method to predict bounding boxes of the 9000 most common classes in ImageNet.

They add a few more abstract classes to that (e.g. dog for all breeds of dogs) and arrive at over 9000 classes (9418 to be precise).

* They train on ImageNet and MSCOCO.

* ImageNet only contains class labels, no bounding boxes. MSCOCO only contains general classes (e.g. "dog" instead of the specific breed).

* They train iteratively on both datasets. MSCOCO is used for detection and classification, while ImageNet is only used for classification.

For an ImageNet example of class `c`, they search among the predicted bounding boxes for the one that has highest predicted probability of being `c`

and backpropagate only the classification loss for that box.

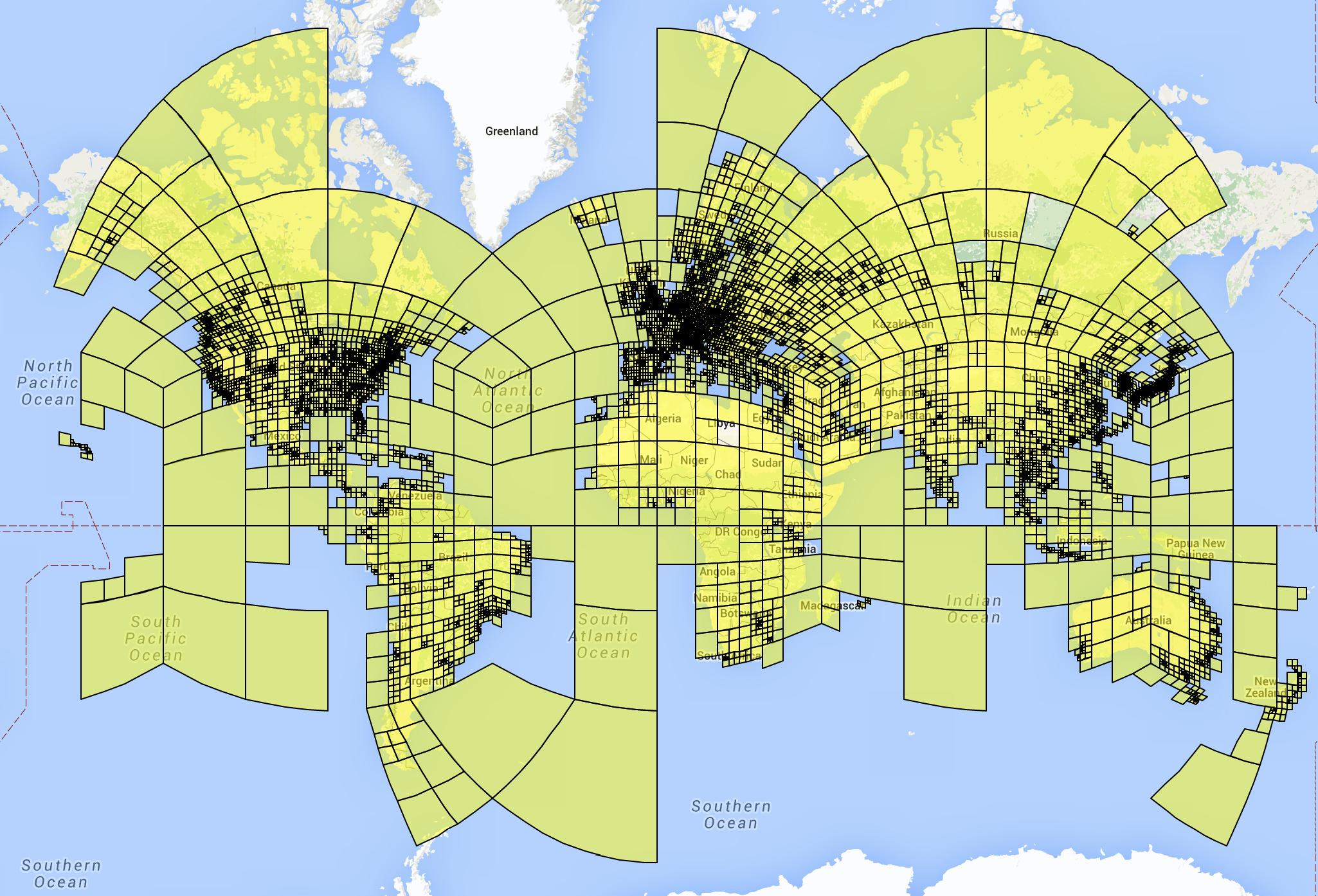

* In order to compensate the problem of different abstraction levels on the classes (e.g. "dog" vs a specific breed), they make use of WordNet.

Based on that data they generate a hierarchy/tree of classes, e.g. one path through that tree could be: object -> animal -> canine -> dog -> hunting dog -> terrier -> yorkshire terrier.

They let the network predict paths in that hierarchy, so that the prediction "dog" for a specific dog breed is not completely wrong.

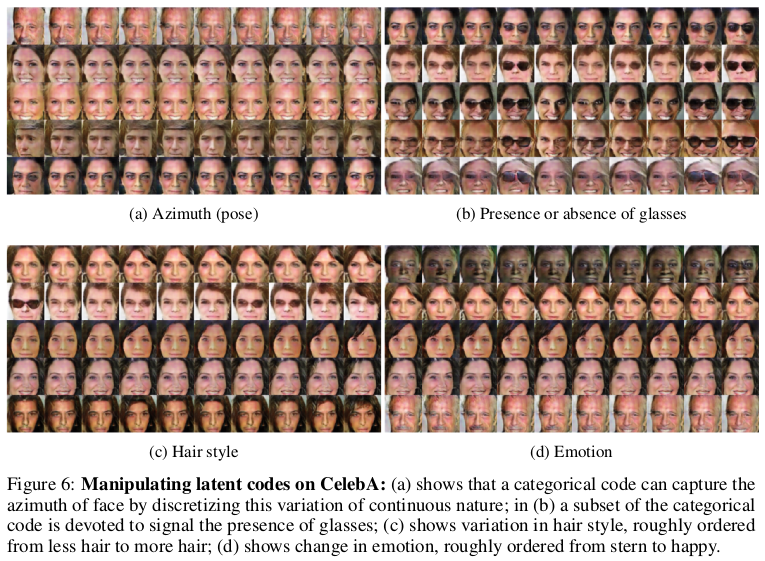

* Visualization of the hierarchy:

*

* They predict many small softmaxes for the paths in the hierarchy, one per node:

*

### Results

* Accuracy

* They reach about 73.4 mAP when training on Pascal VOC 2007 and 2012. That's slightly behind Faster R-CNN with VGG16 with 75.9 mAP, trained on MSCOCO+2007+2012.

* Speed

* They reach 91 fps (10ms/image) at image resolution 288x288 and 40 fps (25ms/image) at 544x544.

* Weakly supervised learning

* They test their 9000-class-detection on ImageNet's detection task, which contains bounding boxes for 200 object classes.

* They achieve 19.7 mAP for all classes and 16.0% mAP for the 156 classes which are not part of MSCOCO.

* For some classes they get 0 mAP accuracy.

* The system performs well for all kinds of animals, but struggles with not-living objects, like sunglasses.

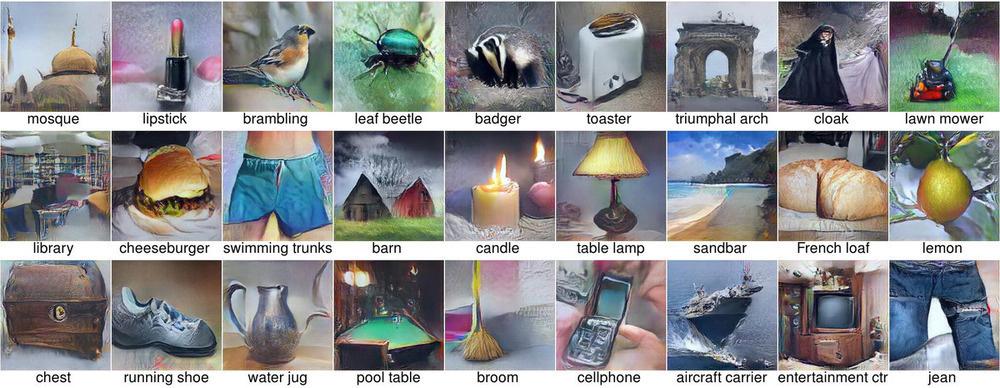

* Example images (notice the class labels):

*

|

You Only Look Once: Unified, Real-Time Object Detection

Redmon, Joseph and Divvala, Santosh Kumar and Girshick, Ross B. and Farhadi, Ali

Conference and Computer Vision and Pattern Recognition - 2016 via Local Bibsonomy

Keywords: dblp

Redmon, Joseph and Divvala, Santosh Kumar and Girshick, Ross B. and Farhadi, Ali

Conference and Computer Vision and Pattern Recognition - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

* They suggest a model ("YOLO") to detect bounding boxes in images.

* In comparison to Faster R-CNN, this model is faster but less accurate.

### How

* Architecture

* Input are images with a resolution of 448x448.

* Output are `S*S*(B*5 + C)` values (per image).

* `S` is the grid size (default value: 7). Each image is split up into `S*S` cells.

* `B` is the number of "tested" bounding box shapes at each cell (default value: 2).

So at each cell, the network might try one large and one small bounding box.

The network predicts additionally for each such tested bounding box `5` values.

These cover the exact position (x, y) and scale (height, width) of the bounding box as well as a confidence value.

They allow the network to fine tune the bounding box shape and reject it, e.g. if there is no object in the grid cell.

The confidence value is zero if there is no object in the grid cell and otherwise matches the IoU between predicted and true bounding box.

* `C` is the number of classes in the dataset (e.g. 20 in Pascal VOC). For each grid cell, the model decides once to which of the `C` objects the cell belongs.

* Rough overview of their outputs:

*

* In contrast to Faster R-CNN, their model does *not* use a separate region proposal network (RPN).

* Per bounding box they actually predict the *square root* of height and width instead of the raw values.

That is supposed to result in similar errors/losses for small and big bounding boxes.

* They use a total of 24 convolutional layers and 2 fully connected layers.

* Some of these convolutional layers are 1x1-convs that halve the number of channels (followed by 3x3s that double them again).

* Overview of the architecture:

*

* They use Leaky ReLUs (alpha=0.1) throughout the network. The last layer uses linear activations (apparently even for the class prediction...!?).

* Similarly to Faster R-CNN, they use a non maximum suppression that drops predicted bounding boxes if they are too similar to other predictions.

* Training

* They pretrain their network on ImageNet, then finetune on Pascal VOC.

* Loss

* They use sum-squared losses (apparently even for the classification, i.e. the `C` values).

* They dont propagate classification loss (for `C`) for grid cells that don't contain an object.

* For each grid grid cell they "test" `B` example shapes of bounding boxes (see above).

Among these `B` shapes, they only propagate the bounding box losses (regarding x, y, width, height, confidence) for the shape that has highest IoU with a ground truth bounding box.

* Most grid cells don't contain a bounding box. Their confidence values will all be zero, potentialle dominating the total loss.

To prevent that, the weighting of the confidence values in the loss function is reduced relative to the regression components (x, y, height, width).

### Results

* The coarse grid and B=2 setting lead to some problems. Namely, small objects are missed and bounding boxes can end up being dropped if they are too close to other bounding boxes.

* The model also has problems with unusual bounding box shapes.

* Overall their accuracy is about 10 percentage points lower than Faster R-CNN with VGG16 (63.4% vs 73.2%, measured in mAP on Pascal VOC 2007).

* They achieve 45fps (22ms/image), compared to 7fps (142ms/image) with Faster R-CNN + VGG16.

* Overview of results on Pascal VOC 2012:

*

* They also suggest a faster variation of their model which reached 145fps (7ms/image) at a further drop of 10 percentage points mAP (to 52.7%).

* A significant part of their error seems to come from badly placed or sized bounding boxes (e.g. too wide or too much to the right).

* They mistake background less often for objects than Fast R-CNN. They test combining both models with each other and can improve Fast R-CNN's accuracy by about 2.5 percentage points mAP.

* They test their model on paintings/artwork (Picasso and People-Art datasets) and notice that it generalizes fairly well to that domain.

* Example results (notice the paintings at the top):

*

|

PVANet: Lightweight Deep Neural Networks for Real-time Object Detection

Sanghoon Hong and Byungseok Roh and Kye-Hyeon Kim and Yeongjae Cheon and Minje Park

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/11/23 (9 years ago)

Abstract: In object detection, reducing computational cost is as important as improving accuracy for most practical usages. This paper proposes a novel network structure, which is an order of magnitude lighter than other state-of-the-art networks while maintaining the accuracy. Based on the basic principle of more layers with less channels, this new deep neural network minimizes its redundancy by adopting recent innovations including C.ReLU and Inception structure. We also show that this network can be trained efficiently to achieve solid results on well-known object detection benchmarks: 84.9% and 84.2% mAP on VOC2007 and VOC2012 while the required compute is less than 10% of the recent ResNet-101.

more

less

Sanghoon Hong and Byungseok Roh and Kye-Hyeon Kim and Yeongjae Cheon and Minje Park

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/11/23 (9 years ago)

Abstract: In object detection, reducing computational cost is as important as improving accuracy for most practical usages. This paper proposes a novel network structure, which is an order of magnitude lighter than other state-of-the-art networks while maintaining the accuracy. Based on the basic principle of more layers with less channels, this new deep neural network minimizes its redundancy by adopting recent innovations including C.ReLU and Inception structure. We also show that this network can be trained efficiently to achieve solid results on well-known object detection benchmarks: 84.9% and 84.2% mAP on VOC2007 and VOC2012 while the required compute is less than 10% of the recent ResNet-101.

|

[link]

* They present a variation of Faster R-CNN.

* Faster R-CNN is a model that detects bounding boxes in images.

* Their variation is about as accurate as the best performing versions of Faster R-CNN.

* Their variation is significantly faster than these variations (roughly 50ms per image).

### How

* PVANET reuses the standard Faster R-CNN architecture:

* A base network that transforms an image into a feature map.

* A region proposal network (RPN) that uses the feature map to predict bounding box candidates.

* A classifier that uses the feature map and the bounding box candidates to predict the final bounding boxes.

* PVANET modifies the base network and keeps the RPN and classifier the same.

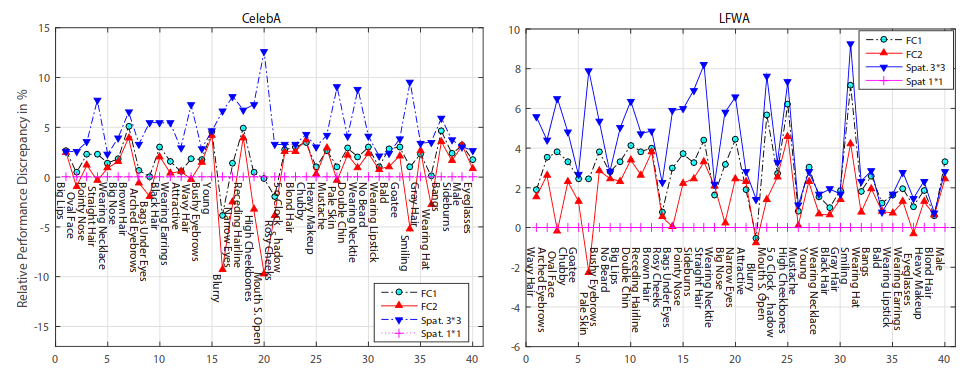

* Inception

* Their base network uses eight Inception modules.

* They argue that these are good choices here, because they are able to represent an image at different scales (aka at different receptive field sizes)

due to their mixture of 3x3 and 1x1 convolutions.

*

* Representing an image at different scales is useful here in order to detect both large and small bounding boxes.

* Inception modules are also reasonably fast.

* Visualization of their Inception modules:

*

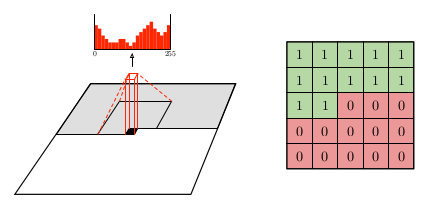

* Concatenated ReLUs

* Before the eight Inception modules, they start the network with eight convolutions using concatenated ReLUs.

* These CReLUs compute both the classic ReLU result (`max(0, x)`) and concatenate to that the negated result, i.e. something like `f(x) = max(0, x <concat> (-1)*x)`.

* That is done, because among the early one can often find pairs of convolution filters that are the negated variations of each other.

So by adding CReLUs, the network does not have to compute these any more, instead they are created (almost) for free, reducing the computation time by up to 50%.

* Visualization of their final CReLU block:

* TODO

*

* Multi-Scale output

* Usually one would generate the final feature map simply from the output of the last convolution.

* They instead combine the outputs of three different convolutions, each resembling a different scale (or level of abstraction).

* They take one from an early point of the network (downscaled), one from the middle part (kept the same) and one from the end (upscaled).

* They concatenate these and apply a 1x1 convolution to generate the final output.

* Other stuff

* Most of their network uses residual connections (including the Inception modules) to facilitate learning.

* They pretrain on ILSVRC2012 and then perform fine-tuning on MSCOCO, VOC 2007 and VOC 2012.

* They use plateau detection for their learning rate, i.e. if a moving average of the loss does not improve any more, they decrease the learning rate. They say that this increases accuracy significantly.

* The classifier in Faster R-CNN consists of fully connected layers. They compress these via Truncated SVD to speed things up. (That was already part of Fast R-CNN, I think.)

### Results

* On Pascal VOC 2012 they achieve 82.5% mAP at 46ms/image (Titan X GPU).

* Faster R-CNN + ResNet-101: 83.8% at 2.2s/image.

* Faster R-CNN + VGG16: 75.9% at 110ms/image.

* R-FCN + ResNet-101: 82.0% at 133ms/image.

* Decreasing the number of region proposals from 300 per image to 50 almost doubles the speed (to 27ms/image) at a small loss of 1.5 percentage points mAP.

* Using Truncated SVD for the classifier reduces the required timer per image by about 30% at roughly 1 percentage point of mAP loss.

|

R-FCN: Object Detection via Region-based Fully Convolutional Networks

Dai, Jifeng and Li, Yi and He, Kaiming and Sun, Jian

Neural Information Processing Systems Conference - 2016 via Local Bibsonomy

Keywords: dblp

Dai, Jifeng and Li, Yi and He, Kaiming and Sun, Jian

Neural Information Processing Systems Conference - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

* They present a variation of Faster R-CNN, i.e. a model that predicts bounding boxes in images and classifies them.

* In contrast to Faster R-CNN, their model is fully convolutional.

* In contrast to Faster R-CNN, the computation per bounding box candidate (region proposal) is very low.

### How

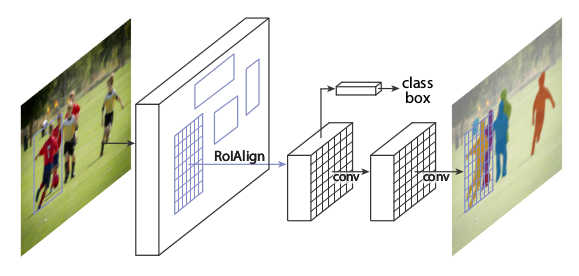

* The basic architecture is the same as in Faster R-CNN:

* A base network transforms an image to a feature map. Here they use ResNet-101 to do that.

* A region proposal network (RPN) uses the feature map to locate bounding box candidates ("region proposals") in the image.

* A classifier uses the feature map and the bounding box candidates and classifies each one of them into `C+1` classes,

where `C` is the number of object classes to spot (e.g. "person", "chair", "bottle", ...) and `1` is added for the background.

* During that process, small subregions of the feature maps (those that match the bounding box candidates) must be extracted and converted to fixed-sizes matrices.

The method to do that is called "Region of Interest Pooling" (RoI-Pooling) and is based on max pooling.

It is mostly the same as in Faster R-CNN.

* Visualization of the basic architecture:

*

* Position-sensitive classification

* Fully convolutional bounding box detectors tend to not work well.

* The authors argue, that the problems come from the translation-invariance of convolutions, which is a desirable property in the case of classification but not when precise localization of objects is required.

* They tackle that problem by generating multiple heatmaps per object class, each one being slightly shifted ("position-sensitive score maps").

* More precisely:

* The classifier generates per object class `c` a total of `k*k` heatmaps.

* In the simplest form `k` is equal to `1`. Then only one heatmap is generated, which signals whether a pixel is part of an object of class `c`.

* They use `k=3*3`. The first of those heatmaps signals, whether a pixel is part of the *top left* corner of a bounding box of class `c`. The second heatmap signals, whether a pixel is part of the *top center* of a bounding box of class `c` (and so on).

* The RoI-Pooling is applied to these heatmaps.

* For `k=3*3`, each bounding box candidate is converted to `3*3` values. The first one resembles the top left corner of the bounding box candidate. Its value is generated by taking the average of the values in that area in the first heatmap.

* Once the `3*3` values are generated, the final score of class `c` for that bounding box candidate is computed by averaging the values.

* That process is repeated for all classes and a softmax is used to determine the final class.

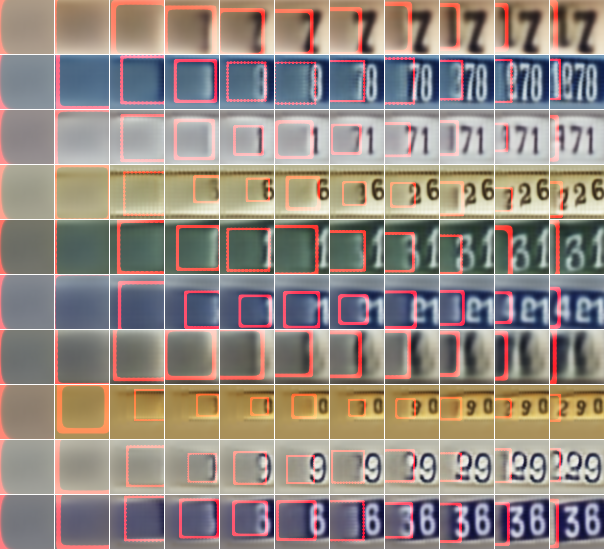

* The graphic below shows examples for that:

*

* The above described RoI-Pooling uses only averages and hence is almost (computationally) free.

* They make use of that during the training by sampling many candidates and only backpropagating on those with high losses (online hard example mining, OHEM).

* À trous trick

* In order to increase accuracy for small bounding boxes they use the à trous trick.

* That means that they use a pretrained base network (here ResNet-101), then remove a pooling layer and set the à trous rate (aka dilation) of all convolutions after the removed pooling layer to `2`.

* The á trous rate describes the distance of sampling locations of a convolution. Usually that is `1` (sampled locations are right next to each other). If it is set to `2`, there is one value "skipped" between each pair of neighbouring sampling location.

* By doing that, the convolutions still behave as if the pooling layer existed (and therefore their weights can be reused). At the same time, they work at an increased resolution, making them more capable of classifying small objects. (Runtime increases though.)

* Training of R-FCN happens similarly to Faster R-CNN.

### Results

* Similar accuracy as the most accurate Faster R-CNN configurations at a lower runtime of roughly 170ms per image.

* Switching to ResNet-50 decreases accuracy by about 2 percentage points mAP (at faster runtime). Switching to ResNet-152 seems to provide no measureable benefit.

* OHEM improves mAP by roughly 2 percentage points.

* À trous trick improves mAP by roughly 2 percentage points.

* Training on `k=1` (one heatmap per class) results in a failure, i.e. a model that fails to predict bounding boxes. `k=7` is slightly more accurate than `k=3`.

1 Comments

|

Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks

Ren, Shaoqing and He, Kaiming and Girshick, Ross B. and Sun, Jian

Neural Information Processing Systems Conference - 2015 via Local Bibsonomy

Keywords: dblp

Ren, Shaoqing and He, Kaiming and Girshick, Ross B. and Sun, Jian

Neural Information Processing Systems Conference - 2015 via Local Bibsonomy

Keywords: dblp

|

[link]

* R-CNN and its successor Fast R-CNN both rely on a "classical" method to find region proposals in images (i.e. "Which regions of the image look like they *might* be objects?").

* That classical method is selective search.

* Selective search is quite slow (about two seconds per image) and hence the bottleneck in Fast R-CNN.

* They replace it with a neural network (region proposal network, aka RPN).

* The RPN reuses the same features used for the remainder of the Fast R-CNN network, making the region proposal step almost free (about 10ms).

### How

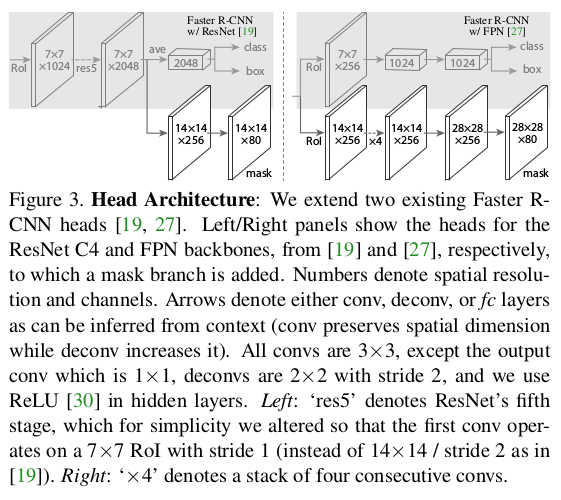

* They now have three components in their network:

* A model for feature extraction, called the "feature extraction network" (**FEN**). Initialized with the weights of a pretrained network (e.g. VGG16).

* A model to use these features and generate region proposals, called the "Region Proposal Network" (**RPN**).

* A model to use these features and region proposals to classify each regions proposal's object and readjust the bounding box, called the "classification network" (**CN**). Initialized with the weights of a pretrained network (e.g. VGG16).

* Usually, FEN will contain the convolutional layers of the pretrained model (e.g. VGG16), while CN will contain the fully connected layers.

* (Note: Only "RPN" really pops up in the paper, the other two remain more or less unnamed. I added the two names to simplify the description.)

* Rough architecture outline:

*

* The basic method at test is as follows:

1. Use FEN to convert the image to features.

2. Apply RPN to the features to generate region proposals.

3. Use Region of Interest Pooling (RoI-Pooling) to convert the features of each region proposal to a fixed sized vector.

4. Apply CN to the RoI-vectors to a) predict the class of each object (out of `K` object classes and `1` background class) and b) readjust the bounding box dimensions (top left coordinate, height, width).

* RPN

* Basic idea:

* Place anchor points on the image, all with the same distance to each other (regular grid).

* Around each anchor point, extract rectangular image areas in various shapes and sizes ("anchor boxes"), e.g. thin/square/wide and small/medium/large rectangles. (More precisely: The features of these areas are extracted.)

* Visualization:

*

* Feed the features of these areas through a classifier and let it rate/predict the "regionness" of the rectangle in a range between 0 and 1. Values greater than 0.5 mean that the classifier thinks the rectangle might be a bounding box. (CN has to analyze that further.)

* Feed the features of these areas through a regressor and let it optimize the region size (top left coordinate, height, width). That way you get all kinds of possible bounding box shapes, even though you only use a few base shapes.

* Implementation:

* The regular grid of anchor points naturally arises due to the downscaling of the FEN, it doesn't have to be implemented explicitly.

* The extraction of anchor boxes and classification + regression can be efficiently implemented using convolutions.

* They first apply a 3x3 convolution on the feature maps. Note that the convolution covers a large image area due to the downscaling.

* Not so clear, but sounds like they use 256 filters/kernels for that convolution.

* Then they apply some 1x1 convolutions for the classification and regression.

* They use `2*k` 1x1 convolutions for classification and `4*k` 1x1 convolutions for regression, where `k` is the number of different shapes of anchor boxes.

* They use `k=9` anchor box types: Three sizes (small, medium, large), each in three shapes (thin, square, wide).

* The way they build training examples (below) forces some 1x1 convolutions to react only to some anchor box types.

* Training:

* Positive examples are anchor boxes that have an IoU with a ground truth bounding box of 0.7 or more. If no anchor point has such an IoU with a specific box, the one with the highest IoU is used instead.

* Negative examples are all anchor boxes that have IoU that do not exceed 0.3 for any bounding box.

* Any anchor point that falls in neither of these groups does not contribute to the loss.

* Anchor boxes that would violate image boundaries are not used as examples.

* The loss is similar to the one in Fast R-CNN: A sum consisting of log loss for the classifier and smooth L1 loss (=smoother absolute distance) for regression.

* Per batch they only sample examples from one image (for efficiency).

* They use 128 positive examples and 128 negative ones. If they can't come up with 128 positive examples, they add more negative ones.

* Test:

* They use non-maximum suppression (NMS) to remove too identical region proposals, i.e. among all region proposals that have an IoU overlap of 0.7 or more, they pick the one that has highest score.

* They use the 300 proposals with highest score after NMS (or less if there aren't that many).

* Feature sharing

* They want to share the features of the FEN between the RPN and the CN.

* So they need a special training method that fine-tunes all three components while keeping the features extracted by FEN useful for both RPN and CN at the same time (not only for one of them).

* Their training methods are:

* Alternating traing: One batch for FEN+RPN, one batch for FEN+CN, then again one batch for FEN+RPN and so on.

* Approximate joint training: Train one network of FEN+RPN+CN. Merge the gradients of RPN and CN that arrive at FEN via simple summation. This method does not compute a gradient from CN through the RPN's regression task, as that is non-trivial. (This runs 25-50% faster than alternating training, accuracy is mostly the same.)

* Non-approximate joint training: This would compute the above mentioned missing gradient, but isn't implemented.

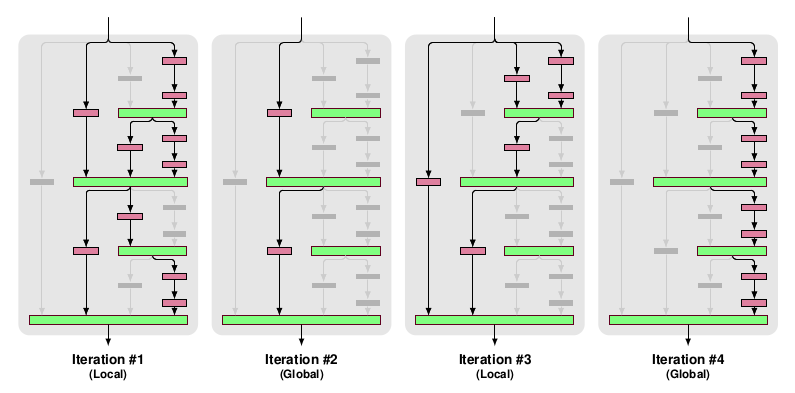

* 4-step alternating training:

1. Clone FEN to FEN1 and FEN2.

2. Train the pair FEN1 + RPN.

3. Train the pair FEN2 + CN using the region proposals from the trained RPN.

4. Fine-tune the pair FEN2 + RPN. FEN2 is fixed, RPN takes the weights from step 2.

5. Fine-tune the pair FEN2 + CN. FEN2 is fixed, CN takes the weights from step 3, region proposals come from RPN from step 4.

* Results

* Example images:

*

* Pascal VOC (with VGG16 as FEN)

* Using an RPN instead of SS (selective search) slightly improved mAP from 66.9% to 69.9%.

* Training RPN and CN on the same FEN (sharing FEN's weights) does not worsen the mAP, but instead improves it slightly from 68.5% to 69.9%.

* Using the RPN instead of SS significantly speeds up the network, from 1830ms/image (less than 0.5fps) to 198ms/image (5fps). (Both stats with VGG16. They also use ZF as the FEN, which puts them at 17fps, but mAP is lower.)

* Using per anchor point more scales and shapes (ratios) for the anchor boxes improves results.

* 1 scale, 1 ratio: 65.8% mAP (scale `128*128`, ratio 1:1) or 66.7% mAP (scale `256*256`, same ratio).

* 3 scales, 3 ratios: 69.9% mAP (scales `128*128`, `256*256`, `512*512`; ratios 1:1, 1:2, 2:1).

* Two-staged vs one-staged

* Instead of the two-stage system (first, generate proposals via RPN, then classify them via CN), they try a one-staged system.

* In the one-staged system they move a sliding window over the computed feature maps and regress at every location the bounding box sizes and classify the box.

* When doing this, their performance drops from 58.7% to about 54%.

|

Fast R-CNN

Girshick, Ross B.

International Conference on Computer Vision - 2015 via Local Bibsonomy

Keywords: dblp

Girshick, Ross B.

International Conference on Computer Vision - 2015 via Local Bibsonomy

Keywords: dblp

|

[link]

* The original R-CNN had three major disadvantages:

1. Two-staged training pipeline: Instead of only training a CNN, one had to train first a CNN and then multiple SVMs.

2. Expensive training: Training was slow and required lots of disk space (feature vectors needed to be written to disk for all region proposals (2000 per image) before training the SVMs).

3. Slow test: Each region proposal had to be handled independently.

* Fast R-CNN ist an improved version of R-CNN and tackles the mentioned problems.

* It no longer uses SVMs, only CNNs (single-stage).

* It does one single feature extraction per image instead of per region, making it much faster (9x faster at training, 213x faster at test).

* It is more accurate than R-CNN.

### How

* The basic architecture, training and testing methods are mostly copied from R-CNN.

* For each image at test time they do:

* They generate region proposals via selective search.

* They feed the image once through the convolutional layers of a pre-trained network, usually VGG16.

* For each region proposal they extract the respective region from the features generated by the network.

* The regions can have different sizes, but the following steps need fixed size vectors. So each region is downscaled via max-pooling so that it has a size of 7x7 (so apparently they ignore regions of sizes below 7x7...?).

* This is called Region of Interest Pooling (RoI-Pooling).

* During the backwards pass, partial derivatives can be transferred to the maximum value (as usually in max pooling). That derivative values are summed up over different regions (in the same image).

* They reshape the 7x7 regions to vectors of length `F*7*7`, where `F` was the number of filters in the last convolutional layer.

* They feed these vectors through another network which predicts:

1. The class of the region (including background class).

2. Top left x-coordinate, top left y-coordinate, log height and log width of the bounding box (i.e. it fine-tunes the region proposal's bounding box). These values are predicted once for every class (so `K*4` values).

* Architecture as image:

*

* Sampling for training

* Efficiency

* If batch size is `B` it is inefficient to sample regions proposals from `B` images as each image will require a full forward pass through the base network (e.g. VGG16).

* It is much more efficient to use few images to share most of the computation between region proposals.

* They use two images per batch (each 64 region proposals) during training.

* This technique introduces correlations between examples in batches, but they did not observe any problems from that.

* They call this technique "hierarchical sampling" (first images, then region proposals).

* IoUs

* Positive examples for specific classes during training are region proposals that have an IoU with ground truth bounding boxes of `>=0.5`.

* Examples for background region proposals during training have IoUs with any ground truth box in the interval `(0.1, 0.5]`.

* Not picking IoUs below 0.1 is similar to hard negative mining.

* They use 25% positive examples, 75% negative/background examples per batch.

* They apply horizontal flipping as data augmentation, nothing else.

* Outputs

* For their class predictions the use a simple softmax with negative log likelihood.

* For their bounding box regression they use a smooth L1 loss (similar to mean absolute error, but switches to mean squared error for very low values).

* Smooth L1 loss is less sensitive to outliers and less likely to suffer from exploding gradients.

* The smooth L1 loss is only active for positive examples (not background examples). (Not active means that it is zero.)

* Training schedule

* The use SGD.

* They train 30k batches with learning rate 0.001, then 0.0001 for another 10k batches. (On Pascal VOC, they use more batches on larger datasets.)

* They use twice the learning rate for the biases.

* They use momentum of 0.9.

* They use parameter decay of 0.0005.

* Truncated SVD

* The final network for class prediction and bounding box regression has to be applied to every region proposal.

* It contains one large fully connected hidden layer and one fully connected output layer (`K+1` classes plus `K*4` regression values).

* For 2000 proposals that becomes slow.

* So they compress the layers after training to less weights via truncated SVD.

* A weights matrix is approximated via

* U (`u x t`) are the first `t` left-singular vectors of W.

* Sigma is a `t x t` diagonal matrix of the top `t` singular values.

* V (`v x t`) are the first `t` right-singular vectors of W.

* W is then replaced by two layers: One contains `Sigma V^T` as weights (no biases), the other contains `U` as weights (with original biases).

* Parameter count goes down to `t(u+v)` from `uv`.

### Results

* They try three base models:

* AlexNet (Small, S)

* VGG-CNN-M-1024 (Medium, M)

* VGG16 (Large, L)

* On VGG16 and Pascal VOC 2007, compared to original R-CNN:

* Training time down to 9.5h from 84h (8.8x faster).

* Test rate *with SVD* (1024 singular values) improves from 47 seconds per image to 0.22 seconds per image (213x faster).

* Test rate *without SVD* improves similarly to 0.32 seconds per image.

* mAP improves from 66.0% to 66.6% (66.9% without SVD).

* Per class accuracy results:

* Fast_R-CNN__pvoc2012.jpg

*

* Fixing the weights of VGG16's convolutional layers and only fine-tuning the fully connected layers (those are applied to each region proposal), decreases the accuracy to 61.4%.

* This decrease in accuracy is most significant for the later convolutional layers, but marginal for the first layers.

* Therefor they only train the convolutional layers starting with `conv3_1` (9 out of 13 layers), which speeds up training.

* Multi-task training

* Training models on classification and bounding box regression instead of only on classification improves the mAP (from 62.6% to 66.9%).

* Doing this in one hierarchy instead of two seperate models (one for classification, one for bounding box regression) increases mAP by roughly 2-3 percentage points.

* They did not find a significant benefit of training the model on multiple scales (e.g. same image sometimes at 400x400, sometimes at 600x600, sometimes at 800x800 etc.).

* Note that their raw CNN (everything before RoI-Pooling) is fully convolutional, so they can feed the images at any scale through the network.

* Increasing the amount of training data seemed to improve mAP a bit, but not as much as one might hope for.

* Using a softmax loss instead of an SVM seemed to marginally increase mAP (0-1 percentage points).

* Using more region proposals from selective search does not simply increase mAP. Instead it can lead to higher recall, but lower precision.

*

* Using densely sampled region proposals (as in sliding window) significantly reduces mAP (from 59.2% to 52.9%). If SVMs instead of softmaxes are used, the results are even worse (49.3%).

|

Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation

Girshick, Ross B. and Donahue, Jeff and Darrell, Trevor and Malik, Jitendra

Conference and Computer Vision and Pattern Recognition - 2014 via Local Bibsonomy

Keywords: dblp

Girshick, Ross B. and Donahue, Jeff and Darrell, Trevor and Malik, Jitendra

Conference and Computer Vision and Pattern Recognition - 2014 via Local Bibsonomy

Keywords: dblp

|

[link]

* Previously, methods to detect bounding boxes in images were often based on the combination of manual feature extraction with SVMs.

* They replace the manual feature extraction with a CNN, leading to significantly higher accuracy.

* They use supervised pre-training on auxiliary datasets to deal with the small amount of labeled data (instead of the sometimes used unsupervised pre-training).

* They call their method R-CNN ("Regions with CNN features").

### How

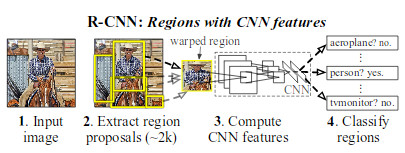

* Their system has three modules: 1) Region proposal generation, 2) CNN-based feature extraction per region proposal, 3) classification.

*

* Region proposals generation

* A region proposal is a bounding box candidate that *might* contain an object.

* By default they generate 2000 region proposals per image.

* They suggest "simple" (i.e. not learned) algorithms for this step (e.g. objectneess, selective search, CPMC).

* They use selective search (makes it comparable to previous systems).

* CNN features

* Uses a CNN to extract features, applied to each region proposal (replaces the previously used manual feature extraction).

* So each region proposal ist turned into a fixed length vector.

* They use AlexNet by Krizhevsky et al. as their base CNN (takes 227x227 RGB images, converts them into 4096-dimensional vectors).

* They add `p=16` pixels to each side of every region proposal, extract the pixels and then simply resize them to 227x227 (ignoring aspect ratio, so images might end up distorted).

* They generate one 4096d vector per image, which is less than what some previous manual feature extraction methods used. That enables faster classification, less memory usage and thus more possible classes.

* Classification

* A classifier that receives the extracted feature vectors (one per region proposal) and classifies them into a predefined set of available classes (e.g. "person", "car", "bike", "background / no object").

* They use one SVM per available class.

* The regions that were not classified as background might overlap (multiple bounding boxes on the same object).

* They use greedy non-maximum suppresion to fix that problem (for each class individually).

* That method simply rejects regions if they overlap strongly with another region that has higher score.

* Overlap is determined via Intersection of Union (IoU).

* Training method

* Pre-Training of CNN

* They use AlexNet pretrained on Imagenet (1000 classes).

* They replace the last fully connected layer with a randomly initialized one that leads to `C+1` classes (`C` object classes, `+1` for background).

* Fine-Tuning of CNN

* The use SGD with learning rate `0.001`.

* Batch size is 128 (32 positive windows, 96 background windows).

* A region proposal is considered positive, if its IoU with any ground-truth bounding box is `>=0.5`.

* SVM

* They train one SVM per class via hard negative mining.

* For positive examples they use here an IoU threshold of `>=0.3`, which performed better than 0.5.

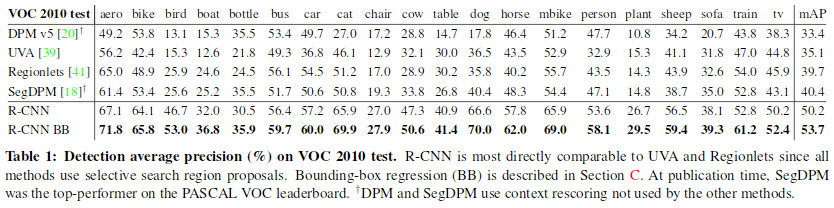

### Results

* Pascal VOC 2010

* They: 53.7% mAP

* Closest competitor (SegDPM): 40.4% mAP

* Closest competitor that uses the same region proposal method (UVA): 35.1% mAP

*

* ILSVRC2013 detection

* They: 31.4% mAP

* Closest competitor (OverFeat): 24.3% mAP

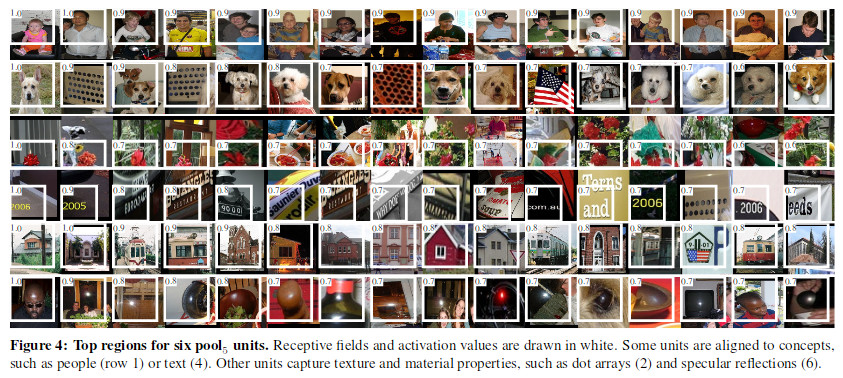

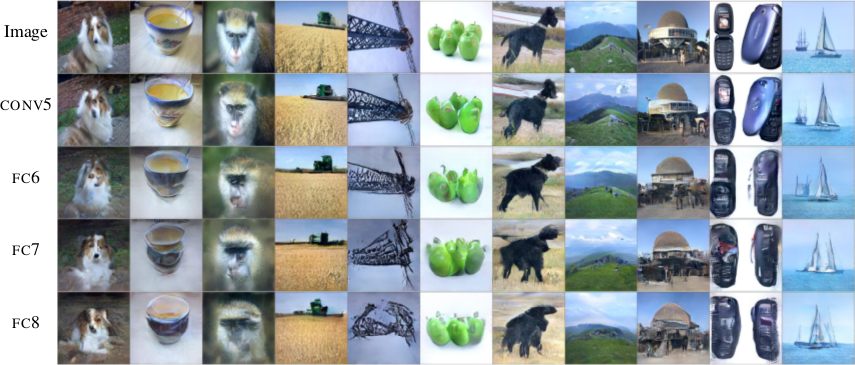

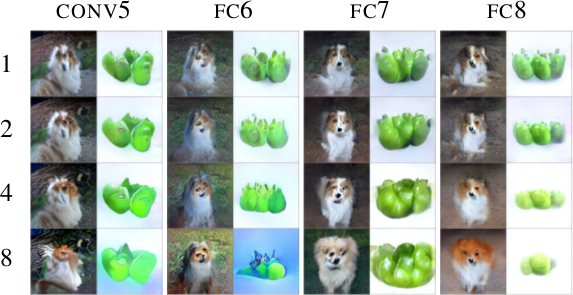

* The feed a large number of region proposals through the network and log for each filter in the last conv-layer which images activated it the most:

*

* Usefulness of layers:

* They remove later layers of the network and retrain in order to find out which layers are the most useful ones.

* Their result is that both fully connected layers of AlexNet seemed to be very domain-specific and profit most from fine-tuning.

* Using VGG16:

* Using VGG16 instead of AlexNet increased mAP from 58.5% to 66.0% on Pascal VOC 2007.

* Computation time was 7 times higher.

* They train a linear regression model that improves the bounding box dimensions based on the extracted features of the last pooling layer. That improved their mAP by 3-4 percentage points.

* The region proposals generated by selective search have a recall of 98% on Pascal VOC and 91.6% on ILSVRC2013 (measured by IoU of `>=0.5`).

|

Ten Years of Pedestrian Detection, What Have We Learned?

Benenson, Rodrigo and Omran, Mohamed and Hosang, Jan Hendrik and Schiele, Bernt

European Conference on Computer Vision - 2014 via Local Bibsonomy

Keywords: dblp

Benenson, Rodrigo and Omran, Mohamed and Hosang, Jan Hendrik and Schiele, Bernt

European Conference on Computer Vision - 2014 via Local Bibsonomy

Keywords: dblp

|

[link]

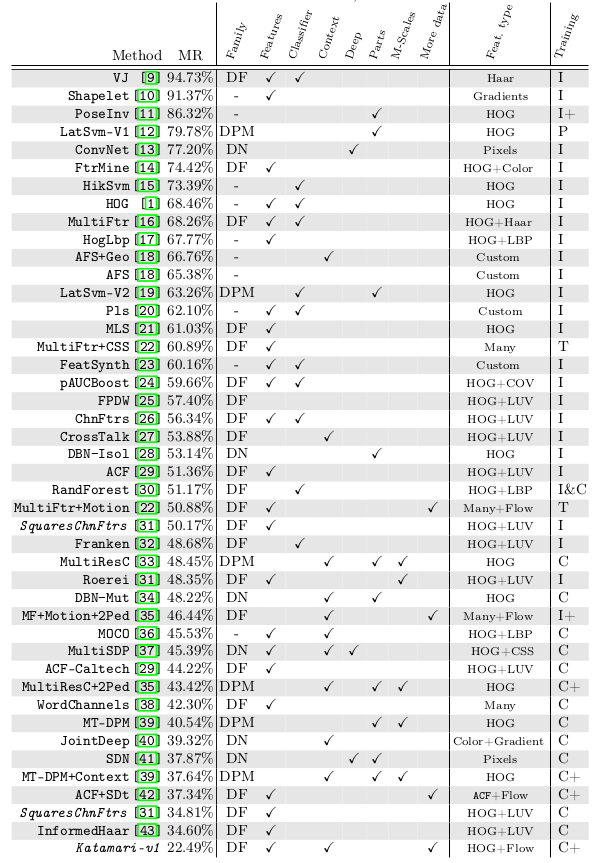

* They compare the results of various models for pedestrian detection.

* The various models were developed over the course of ~10 years (2003-2014).

* They analyze which factors seemed to improve the results.

* They derive new models for pedestrian detection from that.

### Comparison: Datasets

* Available datasets

* INRIA: Small dataset. Diverse images.

* ETH: Video dataset. Stereo images.

* TUD-Brussels: Video dataset.

* Daimler: No color channel.

* Daimler stereo: Stereo images.

* Caltech-USA: Most often used. Large dataset.

* KITTI: Often used. Large dataset. Stereo images.

* All datasets except KITTI are part of the "unified evaluation toolbox" that allows authors to easily test on all of these datasets.

* The evaluation started initially with per-window (FPPW) and later changed to per-image (FPPI), because per-window skewed the results.

* Common evaluation metrics:

* MR: Log-average miss-rate (lower is better)

* AUC: Area under the precision-recall curve (higher is better)

### Comparison: Methods

* Families

* They identified three families of methods: Deformable Parts Models, Deep Neural Networks, Decision Forests.

* Decision Forests was the most popular family.

* No specific family seemed to perform better than other families.

* There was no evidence that non-linearity in kernels was needed (given sophisticated features).

* Additional data

* Adding (coarse) optical flow data to each image seemed to consistently improve results.

* There was some indication that adding stereo data to each image improves the results.

* Context

* For sliding window detectors, adding context from around the window seemed to improve the results.

* E.g. context can indicate whether there were detections next to the window as people tend to walk in groups.

* Deformable parts

* They saw no evidence that deformable part models outperformed other models.

* Multi-Scale models

* Training separate models for each sliding window scale seemed to improve results slightly.

* Deep architectures

* They saw no evidence that deep neural networks outperformed other models. (Note: Paper is from 2014, might have changed already?)

* Features

* Best performance was usually achieved with simple HOG+LUV features, i.e. by converting each window into:

* 6 channels of gradient orientations

* 1 channel of gradient magnitude

* 3 channels of LUV color space

* Some models use significantly more channels for gradient orientations, but there was no evidence that this was necessary to achieve good accuracy.

* However, using more different features (and more sophisticated ones) seemed to improve results.

### Their new model:

* They choose Decisions Forests as their model framework (2048 level-2 trees, i.e. 3 thresholds per tree).

* They use features from the [Integral Channels Features framework](http://pages.ucsd.edu/~ztu/publication/dollarBMVC09ChnFtrs_0.pdf). (Basically just a mixture of common/simple features per window.)

* They add optical flow as a feature.

* They add context around the window as a feature. (A second detector that detects windows containing two persons.)

* Their model significantly improves upon the state of the art (from 34 to 22% MR on Caltech dataset).

*Overview of models developed over the years, starting with Viola Jones (VJ) and ending with their suggested model (Katamari-v1). (DF = Decision Forest, DPM = Deformable Parts Model, DN = Deep Neural Network; I = Inria Dataset, C = Caltech Dataset)*

|

Instance Normalization: The Missing Ingredient for Fast Stylization

Dmitry Ulyanov and Andrea Vedaldi and Victor Lempitsky

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/07/27 (9 years ago)

Abstract: It this paper we revisit the fast stylization method introduced in Ulyanov et. al. (2016). We show how a small change in the stylization architecture results in a significant qualitative improvement in the generated images. The change is limited to swapping batch normalization with instance normalization, and to apply the latter both at training and testing times. The resulting method can be used to train high-performance architectures for real-time image generation. The code will is made available on github.

more

less

Dmitry Ulyanov and Andrea Vedaldi and Victor Lempitsky

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/07/27 (9 years ago)

Abstract: It this paper we revisit the fast stylization method introduced in Ulyanov et. al. (2016). We show how a small change in the stylization architecture results in a significant qualitative improvement in the generated images. The change is limited to swapping batch normalization with instance normalization, and to apply the latter both at training and testing times. The resulting method can be used to train high-performance architectures for real-time image generation. The code will is made available on github.

|

[link]

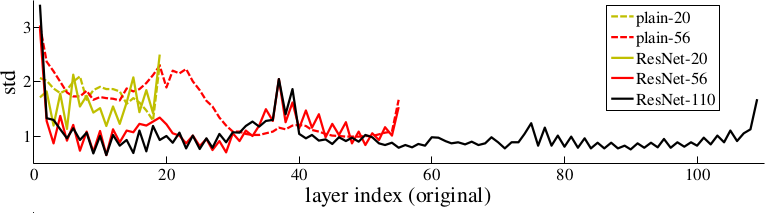

* Style transfer between images works - in its original form - by iteratively making changes to a content image, so that its style matches more and more the style of a chosen style image.

* That iterative process is very slow.

* Alternatively, one can train a single feed-forward generator network to apply a style in one forward pass. The network is trained on a dataset of input images and their stylized versions (stylized versions can be generated using the iterative approach).

* So far, these generator networks were much faster than the iterative approach, but their quality was lower.

* They describe a simple change to these generator networks to increase the image quality (up to the same level as the iterative approach).

### How

* In the generator networks, they simply replace all batch normalization layers with instance normalization layers.

* Batch normalization normalizes using the information from the whole batch, while instance normalization normalizes each feature map on its own.

* Equations

* Let `H` = Height, `W` = Width, `T` = Batch size

* Batch Normalization:

*

* Instance Normalization

*

* They apply instance normalization at test time too (identically).

### Results

* Same image quality as iterative approach (at a fraction of the runtime).

* One content image with two different styles using their approach:

*

|

Stacked Hourglass Networks for Human Pose Estimation

Alejandro Newell and Kaiyu Yang and Jia Deng

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/03/22 (9 years ago)

Abstract: This work introduces a novel Convolutional Network architecture for the task of human pose estimation. Features are processed across all scales and consolidated to best capture the various spatial relationships associated with the body. We show how repeated bottom-up, top-down processing used in conjunction with intermediate supervision is critical to improving the performance of the network. We refer to the architecture as a 'stacked hourglass' network based on the successive steps of pooling and upsampling that are done to produce a final set of estimates. State-of-the-art results are achieved on the FLIC and MPII benchmarks outcompeting all recent methods.

more

less

Alejandro Newell and Kaiyu Yang and Jia Deng

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.CV

First published: 2016/03/22 (9 years ago)

Abstract: This work introduces a novel Convolutional Network architecture for the task of human pose estimation. Features are processed across all scales and consolidated to best capture the various spatial relationships associated with the body. We show how repeated bottom-up, top-down processing used in conjunction with intermediate supervision is critical to improving the performance of the network. We refer to the architecture as a 'stacked hourglass' network based on the successive steps of pooling and upsampling that are done to produce a final set of estimates. State-of-the-art results are achieved on the FLIC and MPII benchmarks outcompeting all recent methods.

|

[link]

Official code: https://github.com/anewell/pose-hg-train

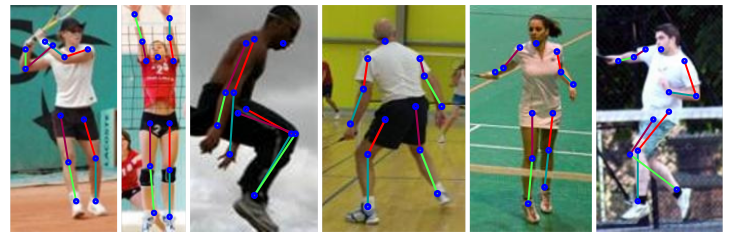

* They suggest a new model architecture for human pose estimation (i.e. "lay a skeleton over a person").

* Their architecture is based progressive pooling followed by progressive upsampling, creating an hourglass form.

* Input are images showing a person's body.

* Outputs are K heatmaps (for K body joints), with each heatmap showing the likely position of a single joint on the person (e.g. "akle", "wrist", "left hand", ...).

### How

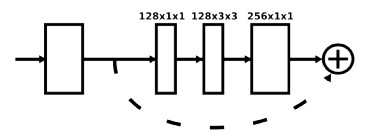

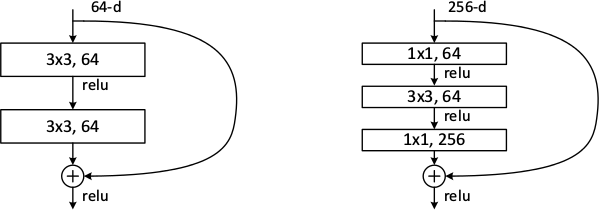

* *Basic building block*

* They use residuals as their basic building block.

* Each residual has three layers: One 1x1 convolution for dimensionality reduction (from 256 to 128 channels), a 3x3 convolution, a 1x1 convolution for dimensionality increase (back to 256).

* Visualized:

*

* *Architecture*

* Their architecture starts with one standard 7x7 convolutions that has strides of (2, 2).

* They use MaxPooling (2x2, strides of (2, 2)) to downsample the images/feature maps.

* They use Nearest Neighbour upsampling (factor 2) to upsample the images/feature maps.

* After every pooling step they add three of their basic building blocks.