|

Welcome to ShortScience.org! |

|

- ShortScience.org is a platform for post-publication discussion aiming to improve accessibility and reproducibility of research ideas.

- The website has 1584 public summaries, mostly in machine learning, written by the community and organized by paper, conference, and year.

- Reading summaries of papers is useful to obtain the perspective and insight of another reader, why they liked or disliked it, and their attempt to demystify complicated sections.

- Also, writing summaries is a good exercise to understand the content of a paper because you are forced to challenge your assumptions when explaining it.

- Finally, you can keep up to date with the flood of research by reading the latest summaries on our Twitter and Facebook pages.

Value Iteration Networks

Tamar, Aviv and Levine, Sergey and Abbeel, Pieter

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

Tamar, Aviv and Levine, Sergey and Abbeel, Pieter

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

Originally posted on my Github repo [paper-notes](https://github.com/karpathy/paper-notes/blob/master/vin.md).

# Value Iteration Networks

By Berkeley group: Aviv Tamar, Yi Wu, Garrett Thomas, Sergey Levine, and Pieter Abbeel

This paper introduces a poliy network architecture for RL tasks that has an embedded differentiable *planning module*, trained end-to-end. It hence falls into a category of fun papers that take explicit algorithms, make them differentiable, embed them in a larger neural net, and train everything end-to-end.

**Observation**: in most RL approaches the policy is a "reactive" controller that internalizes into its weights actions that historically led to high rewards.

**Insight**: To improve the inductive bias of the model, embed a specifically-structured neural net planner into the policy. In particular, the planner runs the value Iteration algorithm, which can be implemented with a ConvNet. So this is kind of like a model-based approach trained with model-free RL, or something. Lol.

NOTE: This is very different from the more standard/obvious approach of learning a separate neural network environment dynamics model (e.g. with regression), fixing it, and then using a planning algorithm over this intermediate representation. This would not be end-to-end because we're not backpropagating the end objective through the full model but rely on auxiliary objectives (e.g. log prob of a state given previous state and action when training a dynamics model), and in practice also does not work well.

NOTE2: A recurrent agent (e.g. with an LSTM policy), or a feedforward agent with a sufficiently deep network trained in a model-free setting has some capacity to learn planning-like computation in its hidden states. However, this is nowhere near as explicit as in this paper, since here we're directly "baking" the planning compute into the architecture. It's exciting.

## Value Iteration

Value Iteration is an algorithm for computing the optimal value function/policy $V^*, \pi^*$ and involves turning the Bellman equation into a recurrence:

This iteration converges to $V^*$ as $n \rightarrow \infty$, which we can use to behave optimally (i.e. the optimal policy takes actions that lead to the most rewarding states, according to $V^*$).

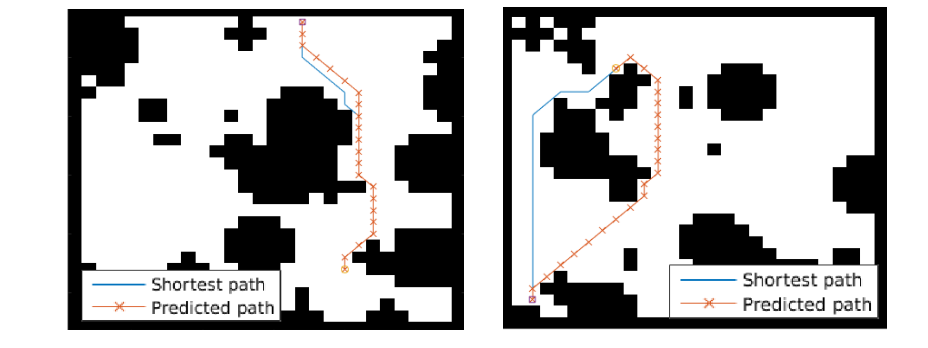

## Grid-world domain

The paper ends up running the model on several domains, but for the sake of an effective example consider the grid-world task where the agent is at some particular position in a 2D grid and has to reach a specific goal state while also avoiding obstacles. Here is an example of the toy task:

The agent gets a reward +1 in the goal state, -1 in obstacles (black), and -0.01 for each step (so that the shortest path to the goal is an optimal solution).

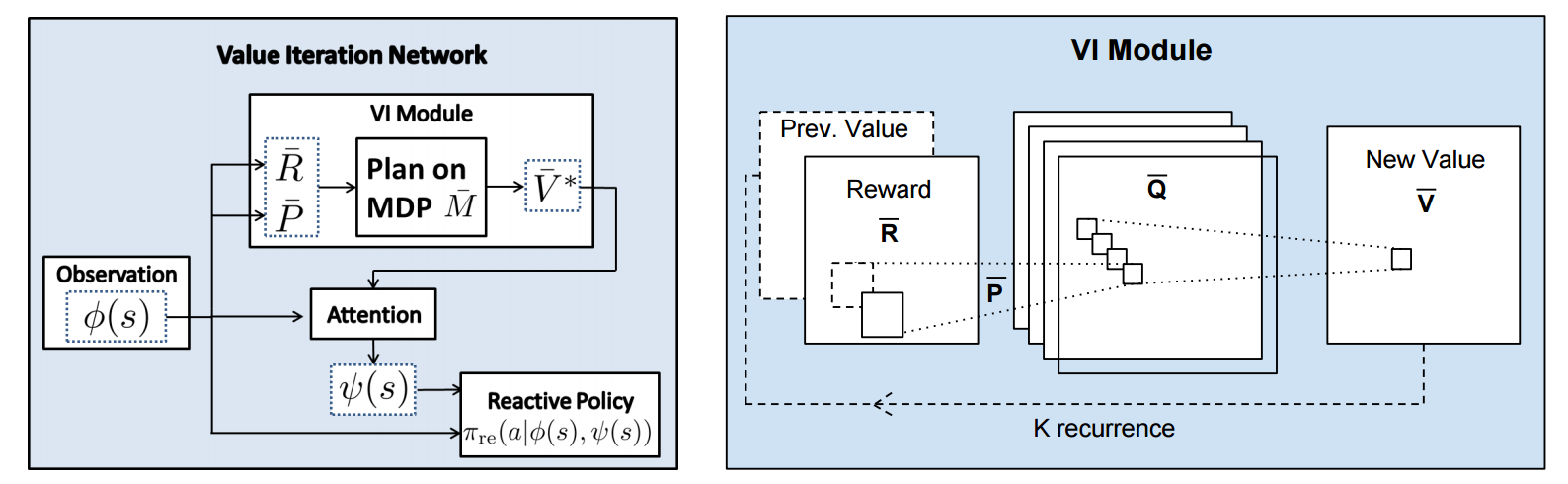

## VIN model

The agent is implemented in a very straight-forward manner as a single neural network trained with TRPO (Policy Gradients with a KL constraint on predictive action distributions over a batch of trajectories). So the only loss function used is to maximize expected reward, as is standard in model-free RL. However, the policy network of the agent has a very specific structure since it (internally) runs value iteration.

First, there's the core Value Iteration **(VI) Module** which runs the recurrence formula (reproducing again):

The input to this recurrence are the two arrays R (the reward array, reward for each state) and P (the dynamics array, the probabilities of transitioning to nearby states with each action), which are of course unknown to the agent, but can be predicted with neural networks as a function of the current state. This is a little funny because the networks take a _particular_ state **s** and are internally (during the forward pass) predicting the rewards and dynamics for all states and actions in the entire environment. Notice, extremely importantly and once again, that at no point are the reward and dynamics functions explicitly regressed to the observed transitions in the environment. They are just arrays of numbers that plug into value iteration recurrence module.

But anyway, once we have **R,P** arrays, in the Grid-world above due to the local connectivity, value iteration can be implemented with a repeated application of convolving **P** over **R**, as these filters effectively *diffuse* the estimated reward function (**R**) through the dynamics model (**P**), followed by max pooling across the actions. If **P** is a not a function of the state, it would simply be the filters in the Conv layer. Notice that posing this as convolution also assumes that the env dynamics are position-invariant. See the diagram below on the right:

Once the array of numbers that we interpret as holding the estimated $V^*$ is computed after running **K** steps of the recurrence (K is fixed beforehand. For example for a 16x16 map it is 20, since that's a bit more than the amount of steps needed to diffuse rewards across the entire map), we "pluck out" the state-action values $Q(s,.)$ at the state the agent happens to currently be in (by an "attention" operator $\psi$), and (optionally) append these Q values to the feedforward representation of the current state $\phi(s)$, and finally predicting the action distribution.

## Experiments

**Baseline 1**: A vanilla ConvNet policy trained with TRPO. [(50 3x3 filters)\*2, 2x2 max pool, (100 3x3 filters)\*3, 2x2 max pool, FC(100), FC(4), Softmax].

**Baseline 2**: A fully convolutional network (FCN), 3 layers (with a filter that spans the whole image), of 150, 100, 10 filters. i.e. slightly different and perhaps a bit more domain-appropriate ConvNet architecture.

**Curriculum** is used during training where easier environments are trained on first. This is claimed to work better but not quantified in tables. Models are trained with TRPO, RMSProp, implemented in Theano.

Results when training on **5000** random grid-world instances (hey isn't that quite a bit low?):

TLDR VIN generalizes better.

The authors also run the model on the **Mars Rover Navigation** dataset (wait what?), a **Continuous Control** 2D path planning dataset, and the **WebNav Challenge**, a language-based search task on a graph (of a subset of Wikipedia). Skipping these because they don't add _too_ much to the core cool idea of the paper.

## Misc

**The good**: I really like this paper because the core idea is cute (the planner is *embedded* in the policy and trained end-to-end), novel (I don't think I saw this idea executed on so far elsewhere), the paper is well-written and clear, and the supplementary materials are thorough.

**On the approach**: Significant challenges remain to make this approach more practicaly viable, but it also seems that much more exciting followup work can be done in this framework. I wish the authors discussed this more in the conclusion. In particular, it seems that one has to explicitly encode the environment connectivity structure in the internal model $\bar{M}$. How much of a problem is this and what could be done about it? Or how could we do the planning in more higher-level abstract spaces instead of the actual low-level state space of the problem? Also, it seems that a potentially nice feature of this approach is that the agent could dynamically "decide" on a reward function at runtime, and the VI module can diffuse it through the dynamics and hence do the planning. A potentially interesting outcome is that the agent could utilize this kind of computation so that an LSTM controller could learn to "emit" reward function subgoals and the VI planner computes how to meet them. A nice/clean division of labor one could hope for in principle.

**The experiments**. Unfortunately, I'm not sure why the authors preferred breadth of experiments and sacrificed depth of experiments. I would have much preferred a more in-depth analysis of the gridworld environment. For instance:

- Only 5,000 training examples are used for training, which seems very little. Presumable, the baselines get stronger as you increase the number of training examples?

- Lack of visualizations: Does the model actually learn the "correct" rewards **R** and dynamics **P**? The authors could inspect these manually and correlate them to the actual model. This would have been reaaaallllyy cool. I also wouldn't expect the model to exactly learn these, but who knows.

- How does the model compare to the baselines in the number of parameters? or FLOPS? It seems that doing VI for 30 steps at each single iteration of the algorithm should be quite expensive.

- The authors should study the performance as a function of the number of recurrences **K**. A particularly funny experiment would be K = 1, where the model would be effectively predicting **V*** directly, without planning. What happens?

- If the output of VI $\psi(s)$ is concatenated to the state parameters, are these Q values actually used? What if all the weights to these numbers are zero in the trained models?

- Why do the authors only evaluate success rate when the training criterion is expected reward?

Overall a very cute idea, well executed as a first step and well explained, with a bit of unsatisfying lack of depth in the experiments in favor of breadth that doesn't add all that much.

2 Comments

|

Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning

Gal, Yarin and Ghahramani, Zoubin

arXiv e-Print archive - 2015 via Local Bibsonomy

Keywords: dblp

Gal, Yarin and Ghahramani, Zoubin

arXiv e-Print archive - 2015 via Local Bibsonomy

Keywords: dblp

|

[link]

This paper presents an interpretation of dropout training as performing approximate Bayesian learning in a deep Gaussian process (DGP) model. This connection suggests a very simple way of obtaining, for networks trained with dropout, estimates of the model's output uncertainty. This estimate is based and computed from an ensemble of networks each obtained by sampling a new dropout mask.

#### My two cents

This is a really nice and thought provoking contribution to our understanding of dropout. Unfortunately, the paper in fact doesn't provide a lot of comparisons with either other ways of estimating the predictive uncertainty of deep networks, or to other approximate inference schemes in deep GPs (actually, see update below). The qualitative examples provided however do suggest that the uncertainty estimate isn't terrible.

Irrespective of the quality of the uncertainty estimate suggested here, I find the observation itself really valuable. Perhaps future research will then shed light on how useful that method is compared to other approaches, including Bayesian dark knowledge \cite{conf/nips/BalanRMW15}.

`Update: On September 27th`, the authors uploaded to arXiv a new version that now includes comparisons with 2 alternative Bayesian learning methods for deep networks, specifically the stochastic variational inference approach of Graves and probabilistic back-propagation of Hernandez-Lobato and Adams. Dropout actually does very well against these baselines and, across datasets, is almost always amongst the best performing method!

|

Learning End-to-end Video Classification with Rank-Pooling

Fernando, Basura and Gould, Stephen

International Conference on Machine Learning - 2016 via Local Bibsonomy

Keywords: dblp

Fernando, Basura and Gould, Stephen

International Conference on Machine Learning - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

This paper describes how rank pooling, a very recent approach for pooling representations organized in a sequence $\\{{\bf v}_t\\}_{t=1}^T$, can be used in an end-to-end trained neural network architecture.

Rank pooling is an alternative to average and max pooling for sequences, but with the distinctive advantage of maintaining some order information from the sequence. Rank pooling first solves a regularized (linear) support vector regression (SVR) problem where the inputs are the vector representations ${\bf v}_t$ in the sequence and the target is the corresponding index $t$ of that representation in the sequence (see Equation 5). The output of rank pooling is then simply the linear regression parameters $\bf{u}$ learned for that sequence. Because of the way ${\bf u}$ is trained, we can see that ${\bf u}$ will capture order information, as successful training would imply that ${\bf u}^\top {\bf v}_t < {\bf u}^\top {\bf v}_{t'} $ if $t < t'$. See [this paper](https://www.robots.ox.ac.uk/~vgg/rg/papers/videoDarwin.pdf) for more on rank pooling.

While previous work has focused on using rank pooling on hand-designed and fixed representations, this paper proposes to use ConvNet features (pre-trained on ImageNet) for the representation and backpropagate through rank pooling to fine-tune the ConvNet features. Since the output of rank pooling corresponds to an argmin operation, passing gradients through this operation is not as straightforward as for average or max pooling. However, it turns out that if the objective being minimized (in our case regularized SVR) is twice differentiable, gradients with respect to its argmin can be computed (see Lemmas 1 and 2). The authors derive the gradient for rank pooling (Equation 21). Finally, since its gradient requires inverting a matrix (corresponding to a hessian), the authors propose to either use an efficient procedure for computing it by exploiting properties of sums of rank-one matrices (see Lemma 3) or to simply use an approximation based on using a diagonal hessian.

In experiments on two small scale video activity recognition datasets (UCF-Sports and Hollywood2), the authors show that fine-tuning the ConvNet features significantly improves the performance of rank pooling and makes it superior to max and average pooling.

**My two cents**

This paper was eye opening for me, first because I did not realize that one could backpropagate through an operation corresponding to an argmin that doesn't have a closed form solution (though apparently this paper isn't the first to make that observation). Moreover, I did not know about rank pooling, which itself is a really thought provoking approach to pooling representations in a way that preserves some organizational information about the original representations.

I wonder how sensitive the results are to the value of the regularization constant of the SVR problem. The authors mention some theoretical guaranties on the stability of the solution found by SVR in general, but intuitively I would expect that the regularization constant would play a large role in the stability.

I'll be looking forward to any future attempts to increase the speed of rank pooling (or any similar method). Indeed, as the authors mention, it is currently too slow to be used on the larger video datasets that are currently available.

Code for computing rank pooling (though not for computing its gradients) seems to be available [here](https://bitbucket.org/bfernando/videodarwin).

2 Comments

|

Dialog-based Language Learning

Weston, Jason

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

Weston, Jason

arXiv e-Print archive - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

This paper investigates different paradigms for learning how to answer natural language queries through various forms of feedback. Most interestingly, it investigates whether a model can learn to answer correctly questions when the feedback is presented purely in the form of a sentence (e.g. "Yes, that's right", "Yes, that's correct", "No, that's incorrect", etc.). This later form of feedback is particularly hard to leverage, since the model has to somehow learn that the word "Yes" is a sign of a positive feedback, but not the word "No".

Normally, we'd trained a model to directly predict the correct answer to questions based on feedback provided by an expert that always answers correctly. "Imitating" this expert just corresponds to regular supervised learning.

The paper however explores other variations on this learning scenario. Specifically, they consider 3 dimensions of variations.

The first dimension of variation is who is providing the answers. Instead of an expert (who is always right), the paper considers the case where the model is instead observing a different, "imperfect" expert whose answers come from a fixed policy that answers correctly only a fraction of the time (the paper looked at 0.5, 0.1 and 0.01). Note that the paper refers to these answers as coming from "the learner" (which should be the model), but since the policy is fixed and actually doesn't depend on the model, I think one can also think of it as coming from another agent, which I'll refer to as the imperfect expert (I think this is also known as "off policy learning" in the RL world).

The second dimension of variation on the learning scenario that is explored is in the nature of the "supervision type" (i.e. nature of the labels). There are 10 of them (see Figure 1 for a nice illustration). In addition to the real expert's answers only (Type 1), the paper considers other types that instead involve the imperfect expert and fall in one of the two categories below:

1. Explicit positive / negative rewards based on whether the imperfect expert's answer is correct.

2. Various forms of natural language responses to the imperfect expert's answers, which vary from worded positive/negative feedback, to hints, to mentions of the supporting fact for the correct answer.

Also, mixtures of the above are considered.

Finally, the third dimension of variation is how the model learns from the observed data. In addition to the regular supervised learning approach of imitating the observed answers (whether it's from the real expert or the imperfect expert), two other distinct approaches are considered, each inspired by the two categories of feedback mentioned above:

1. Reward-based imitation: this simply corresponds to ignoring answers from the imperfect expert for which the reward is not positive (as for when the answers come from the regular expert, they are always used I believe).

2. Forward prediction: this consists in predicting the natural language feedback to the answer of the imperfect expert. This is essentially treated as a classification problem over possible feedback (with negative sampling, since there are many possible feedback responses), that leverages a soft-attention architecture over the answers the expert could have given, which is also informed by the actual answer that was given (see Equation 2).

Also, a mixture of both of these learning approaches is considered.

The paper thoroughly explores experimentally all these dimensions, on two question-answering datasets (single supporting fact bAbI dataset and MovieQA). The neural net model architectures used are all based on memory networks. Without much surprise, imitating the true expert performs best. But quite surprisingly, forward prediction leveraging only natural language feedback to an imperfect expert often performs competitively compared to reward-based imitation.

#### My two cents

This is a very thought provoking paper! I very much like the idea of exploring how a model could learn a task based on instructions in natural language. This makes me think of this work \cite{conf/iccv/BaSFS15} on using zero-shot learning to learn a model that can produce a visual classifier based on a description of what must be recognized.

Another component that is interesting here is studying how a model can learn without knowing a priori whether a feedback is positive or negative. This sort of makes me think of [this work](http://www.thespermwhale.com/jaseweston/ram/papers/paper_16.pdf) (which is also close to this work \cite{conf/icann/HochreiterYC01}) where a recurrent network is trained to process a training set (inputs and targets) to later produce another model that's applied on a test set, without the RNN explicitly knowing what the training gradients are on this other model's parameters. In other words, it has to effectively learn to execute (presumably a form of) gradient descent on the other model's parameters.

I find all such forms of "learning to learn" incredibly interesting.

Coming back to this paper, unfortunately I've yet to really understand why forward prediction actually works. An explanation is given, that is that "this is because there is a natural coherence to predicting true answers that leads to greater accuracy in forward prediction" (see paragraph before conclusion). I can sort of understand what is meant by that, but it would be nice to somehow dig deeper into this hypothesis. Or I might be misunderstanding something here, since the paper mentions that changing how wrong answers are sampled yields a "worse" accuracy of 80% on Task 2 for the bAbI dataset and a policy accuracy of 0.1, but Table 1 reports an accuracy 54% for this case (which is not better, but worse).

Similarly, I'd like to better understand Equation 2, specifically the β* term, and why exactly this is an appropriate form of incorporating which answer was given and why it works. I really was unable to form an intuition around Equation 2.

In any case, I really like that there's work investigating this theme and hope there can be more in the future!

|

A Neural Algorithm of Artistic Style

Leon A. Gatys and Alexander S. Ecker and Matthias Bethge

arXiv e-Print archive - 2015 via Local arXiv

Keywords: cs.CV, cs.NE, q-bio.NC

First published: 2015/08/26 (10 years ago)

Abstract: In fine art, especially painting, humans have mastered the skill to create unique visual experiences through composing a complex interplay between the content and style of an image. Thus far the algorithmic basis of this process is unknown and there exists no artificial system with similar capabilities. However, in other key areas of visual perception such as object and face recognition near-human performance was recently demonstrated by a class of biologically inspired vision models called Deep Neural Networks. Here we introduce an artificial system based on a Deep Neural Network that creates artistic images of high perceptual quality. The system uses neural representations to separate and recombine content and style of arbitrary images, providing a neural algorithm for the creation of artistic images. Moreover, in light of the striking similarities between performance-optimised artificial neural networks and biological vision, our work offers a path forward to an algorithmic understanding of how humans create and perceive artistic imagery.

more

less

Leon A. Gatys and Alexander S. Ecker and Matthias Bethge

arXiv e-Print archive - 2015 via Local arXiv

Keywords: cs.CV, cs.NE, q-bio.NC

First published: 2015/08/26 (10 years ago)

Abstract: In fine art, especially painting, humans have mastered the skill to create unique visual experiences through composing a complex interplay between the content and style of an image. Thus far the algorithmic basis of this process is unknown and there exists no artificial system with similar capabilities. However, in other key areas of visual perception such as object and face recognition near-human performance was recently demonstrated by a class of biologically inspired vision models called Deep Neural Networks. Here we introduce an artificial system based on a Deep Neural Network that creates artistic images of high perceptual quality. The system uses neural representations to separate and recombine content and style of arbitrary images, providing a neural algorithm for the creation of artistic images. Moreover, in light of the striking similarities between performance-optimised artificial neural networks and biological vision, our work offers a path forward to an algorithmic understanding of how humans create and perceive artistic imagery.

|

[link]

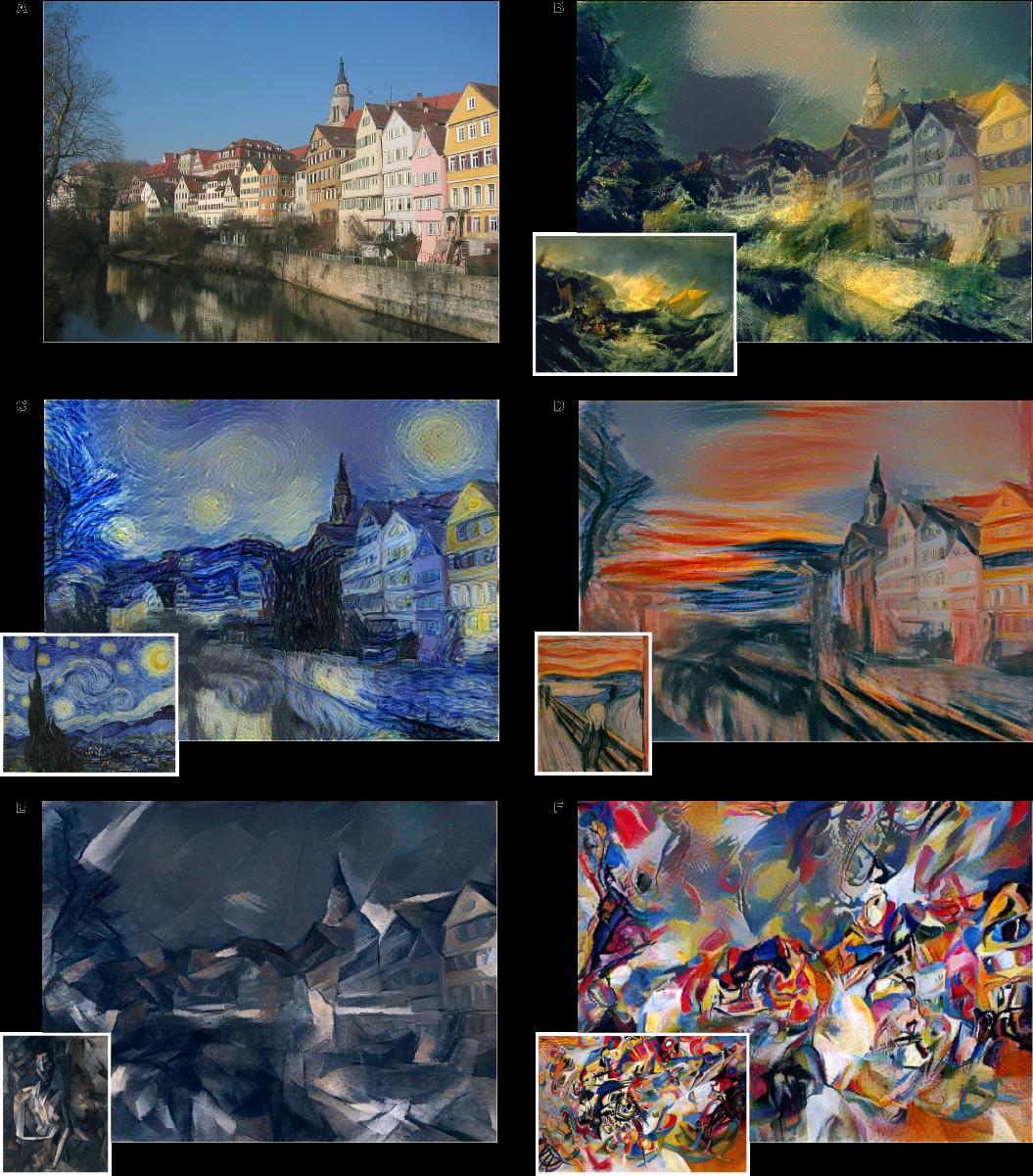

* The paper describes a method to separate content and style from each other in an image.

* The style can then be transfered to a new image.

* Examples:

* Let a photograph look like a painting of van Gogh.

* Improve a dark beach photo by taking the style from a sunny beach photo.

### How

* They use the pretrained 19-layer VGG net as their base network.

* They assume that two images are provided: One with the *content*, one with the desired *style*.

* They feed the content image through the VGG net and extract the activations of the last convolutional layer. These activations are called the *content representation*.

* They feed the style image through the VGG net and extract the activations of all convolutional layers. They transform each layer to a *Gram Matrix* representation. These Gram Matrices are called the *style representation*.

* How to calculate a *Gram Matrix*:

* Take the activations of a layer. That layer will contain some convolution filters (e.g. 128), each one having its own activations.

* Convert each filter's activations to a (1-dimensional) vector.

* Pick all pairs of filters. Calculate the scalar product of both filter's vectors.

* Add the scalar product result as an entry to a matrix of size `#filters x #filters` (e.g. 128x128).

* Repeat that for every pair to get the Gram Matrix.

* The Gram Matrix roughly represents the *texture* of the image.

* Now you have the content representation (activations of a layer) and the style representation (Gram Matrices).

* Create a new image of the size of the content image. Fill it with random white noise.

* Feed that image through VGG to get its content representation and style representation. (This step will be repeated many times during the image creation.)

* Make changes to the new image using gradient descent to optimize a loss function.

* The loss function has two components:

* The mean squared error between the new image's content representation and the previously extracted content representation.

* The mean squared error between the new image's style representation and the previously extracted style representation.

* Add up both components to get the total loss.

* Give both components a weight to alter for more/less style matching (at the expense of content matching).

*One example input image with different styles added to it.*

-------------------------

### Rough chapter-wise notes

* Page 1

* A painted image can be decomposed in its content and its artistic style.

* Here they use a neural network to separate content and style from each other (and to apply that style to an existing image).

* Page 2

* Representations get more abstract as you go deeper in networks, hence they should more resemble the actual content (as opposed to the artistic style).

* They call the feature responses in higher layers *content representation*.

* To capture style information, they use a method that was originally designed to capture texture information.

* They somehow build a feature space on top of the existing one, that is somehow dependent on correlations of features. That leads to a "stationary" (?) and multi-scale representation of the style.

* Page 3

* They use VGG as their base CNN.

* Page 4

* Based on the extracted style features, they can generate a new image, which has equal activations in these style features.

* The new image should match the style (texture, color, localized structures) of the artistic image.

* The style features become more and more abtstract with higher layers. They call that multi-scale the *style representation*.

* The key contribution of the paper is a method to separate style and content representation from each other.

* These representations can then be used to change the style of an existing image (by changing it so that its content representation stays the same, but its style representation matches the artwork).

* Page 6

* The generated images look most appealing if all features from the style representation are used. (The lower layers tend to reflect small features, the higher layers tend to reflect larger features.)

* Content and style can't be separated perfectly.

* Their loss function has two terms, one for content matching and one for style matching.

* The terms can be increased/decreased to match content or style more.

* Page 8

* Previous techniques work only on limited or simple domains or used non-parametric approaches (see non-photorealistic rendering).

* Previously neural networks have been used to classify the time period of paintings (based on their style).

* They argue that separating content from style might be useful and many other domains (other than transfering style of paintings to images).

* Page 9

* The style representation is gathered by measuring correlations between activations of neurons.

* They argue that this is somehow similar to what "complex cells" in the primary visual system (V1) do.

* They note that deep convnets seem to automatically learn to separate content from style, probably because it is helpful for style-invariant classification.

* Page 9, Methods

* They use the 19 layer VGG net as their basis.

* They use only its convolutional layers, not the linear ones.

* They use average pooling instead of max pooling, as that produced slightly better results.

* Page 10, Methods

* The information about the image that is contained in layers can be visualized. To do that, extract the features of a layer as the labels, then start with a white noise image and change it via gradient descent until the generated features have minimal distance (MSE) to the extracted features.

* The build a style representation by calculating Gram Matrices for each layer.

* Page 11, Methods

* The Gram Matrix is generated in the following way:

* Convert each filter of a convolutional layer to a 1-dimensional vector.

* For a pair of filters i, j calculate the value in the Gram Matrix by calculating the scalar product of the two vectors of the filters.

* Do that for every pair of filters, generating a matrix of size #filters x #filters. That is the Gram Matrix.

* Again, a white noise image can be changed with gradient descent to match the style of a given image (i.e. minimize MSE between two Gram Matrices).

* That can be extended to match the style of several layers by measuring the MSE of the Gram Matrices of each layer and giving each layer a weighting.

* Page 12, Methods

* To transfer the style of a painting to an existing image, proceed as follows:

* Start with a white noise image.

* Optimize that image with gradient descent so that it minimizes both the content loss (relative to the image) and the style loss (relative to the painting).

* Each distance (content, style) can be weighted to have more or less influence on the loss function.

|