|

Welcome to ShortScience.org! |

|

- ShortScience.org is a platform for post-publication discussion aiming to improve accessibility and reproducibility of research ideas.

- The website has 1584 public summaries, mostly in machine learning, written by the community and organized by paper, conference, and year.

- Reading summaries of papers is useful to obtain the perspective and insight of another reader, why they liked or disliked it, and their attempt to demystify complicated sections.

- Also, writing summaries is a good exercise to understand the content of a paper because you are forced to challenge your assumptions when explaining it.

- Finally, you can keep up to date with the flood of research by reading the latest summaries on our Twitter and Facebook pages.

FaceNet: A Unified Embedding for Face Recognition and Clustering

Florian Schroff and Dmitry Kalenichenko and James Philbin

arXiv e-Print archive - 2015 via Local arXiv

Keywords: cs.CV

First published: 2015/03/12 (10 years ago)

Abstract: Despite significant recent advances in the field of face recognition, implementing face verification and recognition efficiently at scale presents serious challenges to current approaches. In this paper we present a system, called FaceNet, that directly learns a mapping from face images to a compact Euclidean space where distances directly correspond to a measure of face similarity. Once this space has been produced, tasks such as face recognition, verification and clustering can be easily implemented using standard techniques with FaceNet embeddings as feature vectors. Our method uses a deep convolutional network trained to directly optimize the embedding itself, rather than an intermediate bottleneck layer as in previous deep learning approaches. To train, we use triplets of roughly aligned matching / non-matching face patches generated using a novel online triplet mining method. The benefit of our approach is much greater representational efficiency: we achieve state-of-the-art face recognition performance using only 128-bytes per face. On the widely used Labeled Faces in the Wild (LFW) dataset, our system achieves a new record accuracy of 99.63%. On YouTube Faces DB it achieves 95.12%. Our system cuts the error rate in comparison to the best published result by 30% on both datasets. We also introduce the concept of harmonic embeddings, and a harmonic triplet loss, which describe different versions of face embeddings (produced by different networks) that are compatible to each other and allow for direct comparison between each other.

more

less

Florian Schroff and Dmitry Kalenichenko and James Philbin

arXiv e-Print archive - 2015 via Local arXiv

Keywords: cs.CV

First published: 2015/03/12 (10 years ago)

Abstract: Despite significant recent advances in the field of face recognition, implementing face verification and recognition efficiently at scale presents serious challenges to current approaches. In this paper we present a system, called FaceNet, that directly learns a mapping from face images to a compact Euclidean space where distances directly correspond to a measure of face similarity. Once this space has been produced, tasks such as face recognition, verification and clustering can be easily implemented using standard techniques with FaceNet embeddings as feature vectors. Our method uses a deep convolutional network trained to directly optimize the embedding itself, rather than an intermediate bottleneck layer as in previous deep learning approaches. To train, we use triplets of roughly aligned matching / non-matching face patches generated using a novel online triplet mining method. The benefit of our approach is much greater representational efficiency: we achieve state-of-the-art face recognition performance using only 128-bytes per face. On the widely used Labeled Faces in the Wild (LFW) dataset, our system achieves a new record accuracy of 99.63%. On YouTube Faces DB it achieves 95.12%. Our system cuts the error rate in comparison to the best published result by 30% on both datasets. We also introduce the concept of harmonic embeddings, and a harmonic triplet loss, which describe different versions of face embeddings (produced by different networks) that are compatible to each other and allow for direct comparison between each other.

|

[link]

FaceNet directly maps face images to $\mathbb{R}^{128}$ where distances directly correspond to a measure of face similarity. They use a triplet loss function. The triplet is (face of person A, other face of person A, face of person which is not A). Later, this is called (anchor, positive, negative).

The loss function is learned and inspired by LMNN. The idea is to minimize the distance between the two images of the same person and maximize the distance to the other persons image.

## LMNN

Large Margin Nearest Neighbor (LMNN) is learning a pseudo-metric

$$d(x, y) = (x -y) M (x -y)^T$$

where $M$ is a positive-definite matrix. The only difference between a pseudo-metric and a metric is that $d(x, y) = 0 \Leftrightarrow x = y$ does not hold.

## Curriculum Learning: Triplet selection

Show simple examples first, then increase the difficulty. This is done by selecting the triplets.

They use the triplets which are *hard*. For the positive example, this means the distance between the anchor and the positive example is high. For the negative example this means the distance between the anchor and the negative example is low.

They want to have

$$||f(x_i^a) - f(x_i^p)||_2^2 + \alpha < ||f(x_i^a) - f(x_i^n)||_2^2$$

where $\alpha$ is a margin and $x_i^a$ is the anchor, $x_i^p$ is the positive face example and $x_i^n$ is the negative example. They increase $\alpha$ over time. It is crucial that $f$ maps the images not in the complete $\mathbb{R}^{128}$, but on the unit sphere. Otherwise one could double $\alpha$ by simply making $f' = 2 \cdot f$.

## Tasks

* **Face verification**: Is this the same person?

* **Face recognition**: Who is this person?

## Datasets

* 99.63% accuracy on Labeled FAces in the Wild (LFW)

* 95.12% accuracy on YouTube Faces DB

## Network

Two models are evaluated: The [Zeiler & Fergus model](http://www.shortscience.org/paper?bibtexKey=journals/corr/ZeilerF13) and an architecture based on the [Inception model](http://www.shortscience.org/paper?bibtexKey=journals/corr/SzegedyLJSRAEVR14).

## See also

* [DeepFace](http://www.shortscience.org/paper?bibtexKey=conf/cvpr/TaigmanYRW14#martinthoma)

|

Generating Visual Explanations

Hendricks, Lisa Anne and Akata, Zeynep and Rohrbach, Marcus and Donahue, Jeff and Schiele, Bernt and Darrell, Trevor

European Conference on Computer Vision - 2016 via Local Bibsonomy

Keywords: dblp

Hendricks, Lisa Anne and Akata, Zeynep and Rohrbach, Marcus and Donahue, Jeff and Schiele, Bernt and Darrell, Trevor

European Conference on Computer Vision - 2016 via Local Bibsonomy

Keywords: dblp

|

[link]

This paper deals with an important problem where a deep classification system is made explainable. After the (continuing) success of Deep Networks, researchers are trying to open the blackbox and this work is one of the foremosts. The authors explored the strength of a deep learning method (vision-language model) to explain the performance of another deep learning model (image classification). The approach jointly predicts a class label and explains why it predicted so in natural language. The paper starts with a very important differentiation between two basic schools of *explnation* systems - the *introspection* explanation system and the *justification* explanation system. The introspection system looks into the model to get an explanation (e.g., "This is a Western Grebe because filter 2 has a high activation..."). On the other hand, a justification system justifies the decision by producing sentence details on how visual evidence is compatible with the system output (e.g., "This is a Western Grebe because it has red eyes..."). The paper focuses on *justification* explanation system and proposes a novel one. The authors argue that unlike a description of an image or a sentence defining a class (not necessarily in presence of an image), visual explanation, conditioned on an input image, provides much more of an explanatory text on why the image is classified as a certain category mentioning only image relevant features. The broad outline of the approach is given in Fig (2) of the paper. https://i.imgur.com/tta2qDp.png The first stage consists of a deep convolutional network for classification which generates a softmax distribution over the classes. As the task handles fine-grained bird species classification, it uses a compact bilinear feature representation known to work well for the fine-grained classification tasks. The second stage is a stacked LSTM which generates natural language sentences or explanations justifying the decision of the first stage. The first LSTM of the stack receives the previously generated word. The second LSTM receives the output of the first LSTM along with image features and predicted label distribution from the classification network. This LSTM produces the sequence of output words until an "end-of-sentence" token is generated. The intuition behind using predicted label distribution for explanation is that it would inform the explanation generation model which words and attributes are more likely to occur in the description. Two kinds of losses are used for the second stage *i.e.*, the language model. The first one is termed as the *Relevance Loss* which is the typical sentence generation loss that is seen in literature. This is the sum of cross-entropy losses of the generated words with respect to the ground truth words. Its role is to optimize the alignment between generated and ground truth sentences. However, this loss is not very effective in producing sentences which include class discriminative information. class specificity is a global sentence property. This is illustrated with the following example - *whereas a sentence "This is an all black bird with a bright red eye" is class specific to a "Bronzed Cowbird", words and phrases in the sentence, such as "black" or "red eye" are less class discriminative on their own.* As a result, cross entropy loss on individual words turns out to be less effective in capturing the global sentence property of which class specifity is an example. The authors address this issue by proposing an addiitonal loss, termed as the *Discriminative Loss* which is based on a reinforcement learning paradigm. Before computing the loss, a sentence is sampled. The sentence is passed through a LSTM-based classification network whose task is to produce the ground truth category $C$ given only the sampled sentence. The reward for this operation is simply the probability of the ground truth category $C$ given only the sentence. The intuition is - for the model to produce an output with a large reward, the generated sentence must include enough information to classify the original image properly. The *Discriminative Loss* is the expectation of the negative of this reward and a wieghted linear combination of the two losses is optimized during training. My experience in reinforcement learning is limited. However, I must say I did not quite get why is sampling of the sentences required (which called for the special algorithm for backpropagation). If the idea is to see whether a generated sentence can be used to get at the ground truth category, could the last internal state of one of the stacked LSTM not be used? It would have been better to get some more intution behind the sampling operation. Another thing which (is fairly obvious but still I felt) is missing is not mentioning the loss used in the fine grained classification network. The experimentation is rigorous. The proposed method is compared with four different baseline and ablation models - description, definition, explanation-label, explanation-discriminative with different permutation and combinations of the presence of two types losses, class precition informations etc. Also the evaluation metrics measure different qualities of the generated exlanations, specifically image and class relevances. To measure image relevance METEOR/CIDEr scores of the generated sentences with the ground truth (image based) explanations are computed. On the other hand, to measure the class relevance, CIDEr scores with class definition (not necessarily based on the images from the dataset) sentences are computed. The proposed approach has continuously shown better performance than any of the baseline or ablation methods. I'd specifically mention about one experiment where the effect of class conditioning is studies (end of Sec 5.2). The finding is quite interesting as it shows that providing or not providing correct class information has drastic effect at the generated explanations. It is seen that giving incorrect class information makes the explanation model hallucinate colors or attributes which are not present in the image but are specific to the class. This raises the question whether it is worth giving the class information when the classifier is poor on the first hand? But, I think the answer lies in the observation that row 5 (with class prediction information) in table 1 is always better than row 4 (no class prediction information). Since, row 5 is better than row 4, this means the classifier is also reasonable and this in turn implies that end-to-end training can improve all the stages of a pipeline which ultimately improves the overall performance of the system too! In summary, the paper is a very good first step to explain intelligent systems and should encourage a lot more effort in this direction.  |

Adam: A Method for Stochastic Optimization

Kingma, Diederik P. and Ba, Jimmy

arXiv e-Print archive - 2014 via Local Bibsonomy

Keywords: dblp

Kingma, Diederik P. and Ba, Jimmy

arXiv e-Print archive - 2014 via Local Bibsonomy

Keywords: dblp

|

[link]

* They suggest a new stochastic optimization method, similar to the existing SGD, Adagrad or RMSProp.

* Stochastic optimization methods have to find parameters that minimize/maximize a stochastic function.

* A function is stochastic (non-deterministic), if the same set of parameters can generate different results. E.g. the loss of different mini-batches can differ, even when the parameters remain unchanged. Even for the same mini-batch the results can change due to e.g. dropout.

* Their method tends to converge faster to optimal parameters than the existing competitors.

* Their method can deal with non-stationary distributions (similar to e.g. SGD, Adadelta, RMSProp).

* Their method can deal with very sparse or noisy gradients (similar to e.g. Adagrad).

### How

* Basic principle

* Standard SGD just updates the parameters based on `parameters = parameters - learningRate * gradient`.

* Adam operates similar to that, but adds more "cleverness" to the rule.

* It assumes that the gradient values have means and variances and tries to estimate these values.

* Recall here that the function to optimize is stochastic, so there is some randomness in the gradients.

* The mean is also called "the first moment".

* The variance is also called "the second (raw) moment".

* Then an update rule very similar to SGD would be `parameters = parameters - learningRate * means`.

* They instead use the update rule `parameters = parameters - learningRate * means/sqrt(variances)`.

* They call `means/sqrt(variances)` a 'Signal to Noise Ratio'.

* Basically, if the variance of a specific parameter's gradient is high, it is pretty unclear how it should be changend. So we choose a small step size in the update rule via `learningRate * mean/sqrt(highValue)`.

* If the variance is low, it is easier to predict how far to "move", so we choose a larger step size via `learningRate * mean/sqrt(lowValue)`.

* Exponential moving averages

* In order to approximate the mean and variance values you could simply save the last `T` gradients and then average the values.

* That however is a pretty bad idea, because it can lead to high memory demands (e.g. for millions of parameters in CNNs).

* A simple average also has the disadvantage, that it would completely ignore all gradients before `T` and weight all of the last `T` gradients identically. In reality, you might want to give more weight to the last couple of gradients.

* Instead, they use an exponential moving average, which fixes both problems and simply updates the average at every timestep via the formula `avg = alpha * avg + (1 - alpha) * avg`.

* Let the gradient at timestep (batch) `t` be `g`, then we can approximate the mean and variance values using:

* `mean = beta1 * mean + (1 - beta1) * g`

* `variance = beta2 * variance + (1 - beta2) * g^2`.

* `beta1` and `beta2` are hyperparameters of the algorithm. Good values for them seem to be `beta1=0.9` and `beta2=0.999`.

* At the start of the algorithm, `mean` and `variance` are initialized to zero-vectors.

* Bias correction

* Initializing the `mean` and `variance` vectors to zero is an easy and logical step, but has the disadvantage that bias is introduced.

* E.g. at the first timestep, the mean of the gradient would be `mean = beta1 * 0 + (1 - beta1) * g`, with `beta1=0.9` then: `mean = 0.9 * g`. So `0.9g`, not `g`. Both the mean and the variance are biased (towards 0).

* This seems pretty harmless, but it can be shown that it lowers the convergence speed of the algorithm by quite a bit.

* So to fix this pretty they perform bias-corrections of the mean and the variance:

* `correctedMean = mean / (1-beta1^t)` (where `t` is the timestep).

* `correctedVariance = variance / (1-beta2^t)`.

* Both formulas are applied at every timestep after the exponential moving averages (they do not influence the next timestep).

|

Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks

Alec Radford and Luke Metz and Soumith Chintala

arXiv e-Print archive - 2015 via Local arXiv

Keywords: cs.LG, cs.CV

First published: 2015/11/19 (10 years ago)

Abstract: In recent years, supervised learning with convolutional networks (CNNs) has seen huge adoption in computer vision applications. Comparatively, unsupervised learning with CNNs has received less attention. In this work we hope to help bridge the gap between the success of CNNs for supervised learning and unsupervised learning. We introduce a class of CNNs called deep convolutional generative adversarial networks (DCGANs), that have certain architectural constraints, and demonstrate that they are a strong candidate for unsupervised learning. Training on various image datasets, we show convincing evidence that our deep convolutional adversarial pair learns a hierarchy of representations from object parts to scenes in both the generator and discriminator. Additionally, we use the learned features for novel tasks - demonstrating their applicability as general image representations.

more

less

Alec Radford and Luke Metz and Soumith Chintala

arXiv e-Print archive - 2015 via Local arXiv

Keywords: cs.LG, cs.CV

First published: 2015/11/19 (10 years ago)

Abstract: In recent years, supervised learning with convolutional networks (CNNs) has seen huge adoption in computer vision applications. Comparatively, unsupervised learning with CNNs has received less attention. In this work we hope to help bridge the gap between the success of CNNs for supervised learning and unsupervised learning. We introduce a class of CNNs called deep convolutional generative adversarial networks (DCGANs), that have certain architectural constraints, and demonstrate that they are a strong candidate for unsupervised learning. Training on various image datasets, we show convincing evidence that our deep convolutional adversarial pair learns a hierarchy of representations from object parts to scenes in both the generator and discriminator. Additionally, we use the learned features for novel tasks - demonstrating their applicability as general image representations.

|

[link]

* DCGANs are just a different architecture of GANs.

* In GANs a Generator network (G) generates images. A discriminator network (D) learns to differentiate between real images from the training set and images generated by G.

* DCGANs basically convert the laplacian pyramid technique (many pairs of G and D to progressively upscale an image) to a single pair of G and D.

### How

* Their D: Convolutional networks. No linear layers. No pooling, instead strided layers. LeakyReLUs.

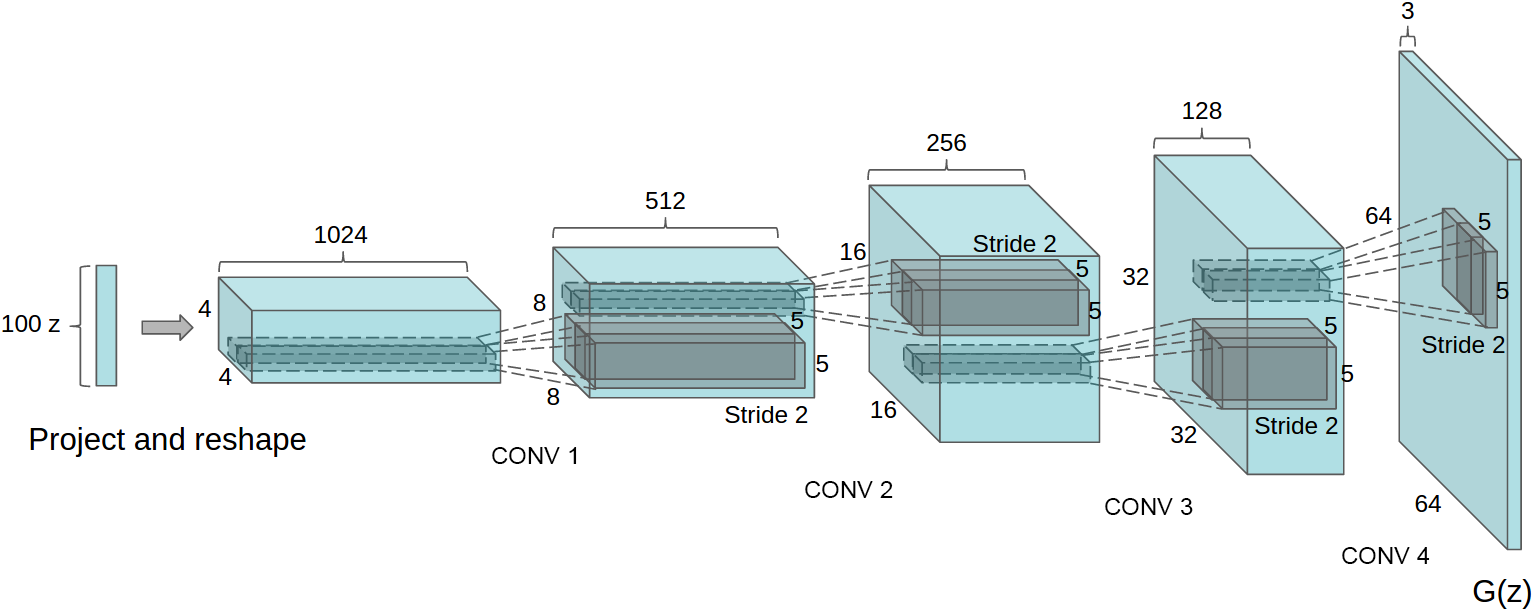

* Their G: Starts with 100d noise vector. Generates with linear layers 1024x4x4 values. Then uses fractionally strided convolutions (move by 0.5 per step) to upscale to 512x8x8. This is continued till Cx32x32 or Cx64x64. The last layer is a convolution to 3x32x32/3x64x64 (Tanh activation).

* The fractionally strided convolutions do basically the same as the progressive upscaling in the laplacian pyramid. So it's basically one laplacian pyramid in a single network and all upscalers are trained jointly leading to higher quality images.

* They use Adam as their optimizer. To decrease instability issues they decreased the learning rate to 0.0002 (from 0.001) and the momentum/beta1 to 0.5 (from 0.9).

*Architecture of G using fractionally strided convolutions to progressively upscale the image.*

### Results

* High quality images. Still with distortions and errors, but at first glance they look realistic.

* Smooth interpolations between generated images are possible (by interpolating between the noise vectors and feeding these interpolations into G).

* The features extracted by D seem to have some potential for unsupervised learning.

* There seems to be some potential for vector arithmetics (using the initial noise vectors) similar to the vector arithmetics with wordvectors. E.g. to generate mean with sunglasses via `vector(men) + vector(sunglasses)`.

")

*Generated images, bedrooms.*

")

*Generated images, faces.*

### Rough chapter-wise notes

* Introduction

* For unsupervised learning, they propose to use to train a GAN and then reuse the weights of D.

* GANs have traditionally been hard to train.

* Approach and model architecture

* They use for D an convnet without linear layers, withput pooling layers (only strides), LeakyReLUs and Batch Normalization.

* They use for G ReLUs (hidden layers) and Tanh (output).

* Details of adversarial training

* They trained on LSUN, Imagenet-1k and a custom dataset of faces.

* Minibatch size was 128.

* LeakyReLU alpha 0.2.

* They used Adam with a learning rate of 0.0002 and momentum of 0.5.

* They note that a higher momentum lead to oscillations.

* LSUN

* 3M images of bedrooms.

* They use an autoencoder based technique to filter out 0.25M near duplicate images.

* Faces

* They downloaded 3M images of 10k people.

* They extracted 350k faces with OpenCV.

* Empirical validation of DCGANs capabilities

* Classifying CIFAR-10 GANs as a feature extractor

* They train a pair of G and D on Imagenet-1k.

* D's top layer has `512*4*4` features.

* They train an SVM on these features to classify the images of CIFAR-10.

* They achieve a score of 82.8%, better than unsupervised K-Means based methods, but worse than Exemplar CNNs.

* Classifying SVHN digits using GANs as a feature extractor

* They reuse the same pipeline (D trained on CIFAR-10, SVM) for the StreetView House Numbers dataset.

* They use 1000 SVHN images (with the features from D) to train the SVM.

* They achieve 22.48% test error.

* Investigating and visualizing the internals of the networks

* Walking in the latent space

* The performs walks in the latent space (= interpolate between input noise vectors and generate several images for the interpolation).

* They argue that this might be a good way to detect overfitting/memorizations as those might lead to very sudden (not smooth) transitions.

* Visualizing the discriminator features

* They use guided backpropagation to visualize what the feature maps in D have learned (i.e. to which images they react).

* They can show that their LSUN-bedroom GAN seems to have learned in an unsupervised way what beds and windows look like.

* Forgetting to draw certain objects

* They manually annotated the locations of objects in some generated bedroom images.

* Based on these annotations they estimated which feature maps were mostly responsible for generating the objects.

* They deactivated these feature maps and regenerated the images.

* That decreased the appearance of these objects. It's however not as easy as one feature map deactivation leading to one object disappearing. They deactivated quite a lot of feature maps (200) and they objects were often still quite visible or replaced by artefacts/errors.

* Vector arithmetic on face samples

* Wordvectors can be used to perform semantic arithmetic (e.g. `king - man + woman = queen`).

* The unsupervised representations seem to be useable in a similar fashion.

* E.g. they generated images via G. They then picked several images that showed men with glasses and averaged these image's noise vectors. They did with same with men without glasses and women without glasses. Then they performed on these vectors `men with glasses - mean without glasses + women without glasses` to get `woman with glasses

|

Inverted Residuals and Linear Bottlenecks: Mobile Networks for Classification, Detection and Segmentation

Mark Sandler and Andrew Howard and Menglong Zhu and Andrey Zhmoginov and Liang-Chieh Chen

arXiv e-Print archive - 2018 via Local arXiv

Keywords: cs.CV

First published: 2018/01/13 (8 years ago)

Abstract: In this paper we describe a new mobile architecture, MobileNetV2, that improves the state of the art performance of mobile models on multiple tasks and benchmarks as well as across a spectrum of different model sizes. We also describe efficient ways of applying these mobile models to object detection in a novel framework we call SSDLite. Additionally, we demonstrate how to build mobile semantic segmentation models through a reduced form of DeepLabv3 which we call Mobile DeepLabv3. The MobileNetV2 architecture is based on an inverted residual structure where the input and output of the residual block are thin bottleneck layers opposite to traditional residual models which use expanded representations in the input an MobileNetV2 uses lightweight depthwise convolutions to filter features in the intermediate expansion layer. Additionally, we find that it is important to remove non-linearities in the narrow layers in order to maintain representational power. We demonstrate that this improves performance and provide an intuition that led to this design. Finally, our approach allows decoupling of the input/output domains from the expressiveness of the transformation, which provides a convenient framework for further analysis. We measure our performance on Imagenet classification, COCO object detection, VOC image segmentation. We evaluate the trade-offs between accuracy, and number of operations measured by multiply-adds (MAdd), as well as the number of parameters

more

less

Mark Sandler and Andrew Howard and Menglong Zhu and Andrey Zhmoginov and Liang-Chieh Chen

arXiv e-Print archive - 2018 via Local arXiv

Keywords: cs.CV

First published: 2018/01/13 (8 years ago)

Abstract: In this paper we describe a new mobile architecture, MobileNetV2, that improves the state of the art performance of mobile models on multiple tasks and benchmarks as well as across a spectrum of different model sizes. We also describe efficient ways of applying these mobile models to object detection in a novel framework we call SSDLite. Additionally, we demonstrate how to build mobile semantic segmentation models through a reduced form of DeepLabv3 which we call Mobile DeepLabv3. The MobileNetV2 architecture is based on an inverted residual structure where the input and output of the residual block are thin bottleneck layers opposite to traditional residual models which use expanded representations in the input an MobileNetV2 uses lightweight depthwise convolutions to filter features in the intermediate expansion layer. Additionally, we find that it is important to remove non-linearities in the narrow layers in order to maintain representational power. We demonstrate that this improves performance and provide an intuition that led to this design. Finally, our approach allows decoupling of the input/output domains from the expressiveness of the transformation, which provides a convenient framework for further analysis. We measure our performance on Imagenet classification, COCO object detection, VOC image segmentation. We evaluate the trade-offs between accuracy, and number of operations measured by multiply-adds (MAdd), as well as the number of parameters

|

[link]

This work expands on prior techniques for designing models that can both be stored using fewer parameters, and also execute using fewer operations and less memory, both of which are key desiderata for having trained machine learning models be usable on phones and other personal devices. The main contribution of the original MobileNets paper was to introduce the idea of using "factored" decompositions of Depthwise and Pointwise convolutions, which separate the procedures of "pull information from a spatial range" and "mix information across channels" into two distinct steps. In this paper, they continue to use this basic Depthwise infrastructure, but also add a new design element: the inverted-residual linear bottleneck. The reasoning behind this new layer type comes from the observation that, often, the set of relevant points in a high-dimensional space (such as the 'per-pixel' activations inside a conv net) actually lives on a lower-dimensional manifold. So, theoretically, and naively, one could just try to use lower dimensional internal representations to map the dimensionality of that assumed manifold. However, the authors argue that ReLU non-linearities kill information (because of the region where all inputs are mapped to zero), and so having layers contain only the number of dimensions needed for the manifold would mean that you end up with too-few dimensions after the ReLU information loss. However, you need to have non-linearities somewhere in the network in order to be able to learn complex, non-linear functions. So, the authors suggest a method to mostly use smaller-dimensional representations internally, but still maintain ReLus and the network's needed complexity. https://i.imgur.com/pN4d9Wi.png - A lower-dimensional output is "projected up" into a higher dimensional output - A ReLu is applied on this higher-dimensional layer - That layer is then projected down into a smaller-dimensional layer, which uses a linear activation to avoid information loss - A residual connection between the lower-dimensional output at the beginning and end of the expansion This way, we still maintain the network's non-linearity, but also replace some of the network's higher-dimensional layers with lower-dimensional linear ones  |