|

Welcome to ShortScience.org! |

|

- ShortScience.org is a platform for post-publication discussion aiming to improve accessibility and reproducibility of research ideas.

- The website has 1584 public summaries, mostly in machine learning, written by the community and organized by paper, conference, and year.

- Reading summaries of papers is useful to obtain the perspective and insight of another reader, why they liked or disliked it, and their attempt to demystify complicated sections.

- Also, writing summaries is a good exercise to understand the content of a paper because you are forced to challenge your assumptions when explaining it.

- Finally, you can keep up to date with the flood of research by reading the latest summaries on our Twitter and Facebook pages.

Generating Images with Perceptual Similarity Metrics based on Deep Networks

Alexey Dosovitskiy and Thomas Brox

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG, cs.CV, cs.NE

First published: 2016/02/08 (8 years ago)

Abstract: Image-generating machine learning models are typically trained with loss functions based on distance in the image space. This often leads to over-smoothed results. We propose a class of loss functions, which we call deep perceptual similarity metrics (DeePSiM), that mitigate this problem. Instead of computing distances in the image space, we compute distances between image features extracted by deep neural networks. This metric better reflects perceptually similarity of images and thus leads to better results. We show three applications: autoencoder training, a modification of a variational autoencoder, and inversion of deep convolutional networks. In all cases, the generated images look sharp and resemble natural images.

more

less

Alexey Dosovitskiy and Thomas Brox

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG, cs.CV, cs.NE

First published: 2016/02/08 (8 years ago)

Abstract: Image-generating machine learning models are typically trained with loss functions based on distance in the image space. This often leads to over-smoothed results. We propose a class of loss functions, which we call deep perceptual similarity metrics (DeePSiM), that mitigate this problem. Instead of computing distances in the image space, we compute distances between image features extracted by deep neural networks. This metric better reflects perceptually similarity of images and thus leads to better results. We show three applications: autoencoder training, a modification of a variational autoencoder, and inversion of deep convolutional networks. In all cases, the generated images look sharp and resemble natural images.

|

[link]

* The authors define in this paper a special loss function (DeePSiM), mostly for autoencoders.

* Usually one would use a MSE of euclidean distance as the loss function for an autoencoder. But that loss function basically always leads to blurry reconstructed images.

* They add two new ingredients to the loss function, which results in significantly sharper looking images.

### How

* Their loss function has three components:

* Euclidean distance in image space (i.e. pixel distance between reconstructed image and original image, as usually used in autoencoders)

* Euclidean distance in feature space. Another pretrained neural net (e.g. VGG, AlexNet, ...) is used to extract features from the original and the reconstructed image. Then the euclidean distance between both vectors is measured.

* Adversarial loss, as usually used in GANs (generative adversarial networks). The autoencoder is here treated as the GAN-Generator. Then a second network, the GAN-Discriminator is introduced. They are trained in the typical GAN-fashion. The loss component for DeePSiM is the loss of the Discriminator. I.e. when reconstructing an image, the autoencoder would learn to reconstruct it in a way that lets the Discriminator believe that the image is real.

* Using the loss in feature space alone would not be enough as that tends to lead to overpronounced high frequency components in the image (i.e. too strong edges, corners, other artefacts).

* To decrease these high frequency components, a "natural image prior" is usually used. Other papers define some function by hand. This paper uses the adversarial loss for that (i.e. learns a good prior).

* Instead of training a full autoencoder (encoder + decoder) it is also possible to only train a decoder and feed features - e.g. extracted via AlexNet - into the decoder.

### Results

* Using the DeePSiM loss with a normal autoencoder results in sharp reconstructed images.

* Using the DeePSiM loss with a VAE to generate ILSVRC-2012 images results in sharp images, which are locally sound, but globally don't make sense. Simple euclidean distance loss results in blurry images.

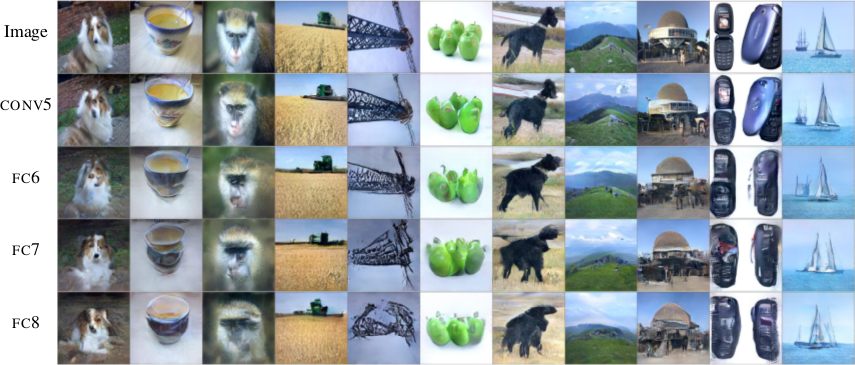

* Using the DeePSiM loss when feeding only image space features (extracted via AlexNet) into the decoder leads to high quality reconstructions. Features from early layers will lead to more exact reconstructions.

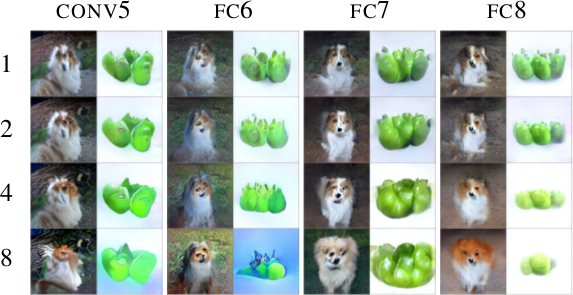

* One can again feed extracted features into the network, but then take the reconstructed image, extract features of that image and feed them back into the network. When using DeePSiM, even after several iterations of that process the images still remain semantically similar, while their exact appearance changes (e.g. a dog's fur color might change, counts of visible objects change).

*Images generated with a VAE using DeePSiM loss.*

*Images reconstructed from features fed into the network. Different AlexNet layers (conv5 - fc8) were used to generate the features. Earlier layers allow more exact reconstruction.*

*First, images are reconstructed from features (AlexNet, layers conv5 - fc8 as columns). Then, features of the reconstructed images are fed back into the network. That is repeated up to 8 times (rows). Images stay semantically similar, but their appearance changes.*

--------------------

### Rough chapter-wise notes

* (1) Introduction

* Using a MSE of euclidean distances for image generation (e.g. autoencoders) often results in blurry images.

* They suggest a better loss function that cares about the existence of features, but not as much about their exact translation, rotation or other local statistics.

* Their loss function is based on distances in suitable feature spaces.

* They use ConvNets to generate those feature spaces, as these networks are sensitive towards important changes (e.g. edges) and insensitive towards unimportant changes (e.g. translation).

* However, naively using the ConvNet features does not yield good results, because the networks tend to project very different images onto the same feature vectors (i.e. they are contractive). That leads to artefacts in the generated images.

* Instead, they combine the feature based loss with GANs (adversarial loss). The adversarial loss decreases the negative effects of the feature loss ("natural image prior").

* (3) Model

* A typical choice for the loss function in image generation tasks (e.g. when using an autoencoders) would be squared euclidean/L2 loss or L1 loss.

* They suggest a new class of losses called "DeePSiM".

* We have a Generator `G`, a Discriminator `D`, a feature space creator `C` (takes an image, outputs a feature space for that image), one (or more) input images `x` and one (or more) target images `y`. Input and target image can be identical.

* The total DeePSiM loss is a weighted sum of three components:

* Feature loss: Squared euclidean distance between the feature spaces of (1) input after fed through G and (2) the target image, i.e. `||C(G(x))-C(y)||^2_2`.

* Adversarial loss: A discriminator is introduced to estimate the "fakeness" of images generated by the generator. The losses for D and G are the standard GAN losses.

* Pixel space loss: Classic squared euclidean distance (as commonly used in autoencoders). They found that this loss stabilized their adversarial training.

* The feature loss alone would create high frequency artefacts in the generated image, which is why a second loss ("natural image prior") is needed. The adversarial loss fulfills that role.

* Architectures

* Generator (G):

* They define different ones based on the task.

* They all use up-convolutions, which they implement by stacking two layers: (1) a linear upsampling layer, then (2) a normal convolutional layer.

* They use leaky ReLUs (alpha=0.3).

* Comparators (C):

* They use variations of AlexNet and Exemplar-CNN.

* They extract the features from different layers, depending on the experiment.

* Discriminator (D):

* 5 convolutions (with some striding; 7x7 then 5x5, afterwards 3x3), into average pooling, then dropout, then 2x linear, then 2-way softmax.

* Training details

* They use Adam with learning rate 0.0002 and normal momentums (0.9 and 0.999).

* They temporarily stop the discriminator training when it gets too good.

* Batch size was 64.

* 500k to 1000k batches per training.

* (4) Experiments

* Autoencoder

* Simple autoencoder with an 8x8x8 code layer between encoder and decoder (so actually more values than in the input image?!).

* Encoder has a few convolutions, decoder a few up-convolutions (linear upsampling + convolution).

* They train on STL-10 (96x96) and take random 64x64 crops.

* Using for C AlexNet tends to break small structural details, using Exempler-CNN breaks color details.

* The autoencoder with their loss tends to produce less blurry images than the common L2 and L1 based losses.

* Training an SVM on the 8x8x8 hidden layer performs significantly with their loss than L2/L1. That indicates potential for unsupervised learning.

* Variational Autoencoder

* They replace part of the standard VAE loss with their DeePSiM loss (keeping the KL divergence term).

* Everything else is just like in a standard VAE.

* Samples generated by a VAE with normal loss function look very blurry. Samples generated with their loss function look crisp and have locally sound statistics, but still (globally) don't really make any sense.

* Inverting AlexNet

* Assume the following variables:

* I: An image

* ConvNet: A convolutional network

* F: The features extracted by a ConvNet, i.e. ConvNet(I) (feaures in all layers, not just the last one)

* Then you can invert the representation of a network in two ways:

* (1) An inversion that takes an F and returns roughly the I that resulted in F (it's *not* key here that ConvNet(reconstructed I) returns the same F again).

* (2) An inversion that takes an F and projects it to *some* I so that ConvNet(I) returns roughly the same F again.

* Similar to the autoencoder cases, they define a decoder, but not encoder.

* They feed into the decoder a feature representation of an image. The features are extracted using AlexNet (they try the features from different layers).

* The decoder has to reconstruct the original image (i.e. inversion scenario 1). They use their DeePSiM loss during the training.

* The images can be reonstructed quite well from the last convolutional layer in AlexNet. Chosing the later fully connected layers results in more errors (specifially in the case of the very last layer).

* They also try their luck with the inversion scenario (2), but didn't succeed (as their loss function does not care about diversity).

* They iteratively encode and decode the same image multiple times (probably means: image -> features via AlexNet -> decode -> reconstructed image -> features via AlexNet -> decode -> ...). They observe, that the image does not get "destroyed", but rather changes semantically, e.g. three apples might turn to one after several steps.

* They interpolate between images. The interpolations are smooth.

|

Group Normalization

Yuxin Wu and Kaiming He

arXiv e-Print archive - 2018 via Local arXiv

Keywords: cs.CV, cs.LG

First published: 2018/03/22 (6 years ago)

Abstract: Batch Normalization (BN) is a milestone technique in the development of deep learning, enabling various networks to train. However, normalizing along the batch dimension introduces problems --- BN's error increases rapidly when the batch size becomes smaller, caused by inaccurate batch statistics estimation. This limits BN's usage for training larger models and transferring features to computer vision tasks including detection, segmentation, and video, which require small batches constrained by memory consumption. In this paper, we present Group Normalization (GN) as a simple alternative to BN. GN divides the channels into groups and computes within each group the mean and variance for normalization. GN's computation is independent of batch sizes, and its accuracy is stable in a wide range of batch sizes. On ResNet-50 trained in ImageNet, GN has 10.6% lower error than its BN counterpart when using a batch size of 2; when using typical batch sizes, GN is comparably good with BN and outperforms other normalization variants. Moreover, GN can be naturally transferred from pre-training to fine-tuning. GN can outperform its BN-based counterparts for object detection and segmentation in COCO, and for video classification in Kinetics, showing that GN can effectively replace the powerful BN in a variety of tasks. GN can be easily implemented by a few lines of code in modern libraries.

more

less

Yuxin Wu and Kaiming He

arXiv e-Print archive - 2018 via Local arXiv

Keywords: cs.CV, cs.LG

First published: 2018/03/22 (6 years ago)

Abstract: Batch Normalization (BN) is a milestone technique in the development of deep learning, enabling various networks to train. However, normalizing along the batch dimension introduces problems --- BN's error increases rapidly when the batch size becomes smaller, caused by inaccurate batch statistics estimation. This limits BN's usage for training larger models and transferring features to computer vision tasks including detection, segmentation, and video, which require small batches constrained by memory consumption. In this paper, we present Group Normalization (GN) as a simple alternative to BN. GN divides the channels into groups and computes within each group the mean and variance for normalization. GN's computation is independent of batch sizes, and its accuracy is stable in a wide range of batch sizes. On ResNet-50 trained in ImageNet, GN has 10.6% lower error than its BN counterpart when using a batch size of 2; when using typical batch sizes, GN is comparably good with BN and outperforms other normalization variants. Moreover, GN can be naturally transferred from pre-training to fine-tuning. GN can outperform its BN-based counterparts for object detection and segmentation in COCO, and for video classification in Kinetics, showing that GN can effectively replace the powerful BN in a variety of tasks. GN can be easily implemented by a few lines of code in modern libraries.

|

[link]

If you were to survey researchers, and ask them to name the 5 most broadly influential ideas in Machine Learning from the last 5 years, I’d bet good money that Batch Normalization would be somewhere on everyone’s lists. Before Batch Norm, training meaningfully deep neural networks was an unstable process, and one that often took a long time to converge to success. When we added Batch Norm to models, it allowed us to increase our learning rates substantially (leading to quicker training) without the risk of activations either collapsing or blowing up in values. It had this effect because it addressed one of the key difficulties of deep networks: internal covariate shift. To understand this, imagine the smaller problem, of a one-layer model that’s trying to classify based on a set of input features. Now, imagine that, over the course of training, the input distribution of features moved around, so that, perhaps, a value that was at the 70th percentile of the data distribution initially is now at the 30th. We have an obvious intuition that this would make the model quite hard to train, because it would learn some mapping between feature values and class at the beginning of training, but that would become invalid by the end. This is, fundamentally, the problem faced by higher layers of deep networks, since, if the distribution of activations in a lower layer changed even by a small amount, that can cause a “butterfly effect” style outcome, where the activation distributions of higher layers change more dramatically. Batch Normalization - which takes each feature “channel” a network learns, and normalizes [normalize = subtract mean, divide by variance] it by the mean and variance of that feature over spatial locations and over all the observations in a given batch - helps solve this problem because it ensures that, throughout the course of training, the distribution of inputs that a given layer sees stays roughly constant, no matter what the lower layers get up to. On the whole, Batch Norm has been wildly successful at stabilizing training, and is now canonized - along with the likes of ReLU and Dropout - as one of the default sensible training procedures for any given network. However, it does have its difficulties and downsides. One salient one of these comes about when you train using very small batch sizes - in the range of 2-16 examples per batch. Under these circumstance, the mean and variance calculated off of that batch are noisy and high variance (for the general reason that statistics calculated off of small sample sizes are noisy and high variance), which takes away from the stability that Batch Norm is trying to provide. One proposed alternative to Batch Norm, that didn’t run into this problem of small sample sizes, is Layer Normalization. This operates under the assumption that the activations of all feature “channels” within a given layer hopefully have roughly similar distributions, and, so, you an normalize all of them by taking the aggregate mean over all channels, *for a given observation*, and use that as the mean and variance you normalize by. Because there are typically many channels in a given layer, this means that you have many “samples” that go into the mean and variance. However, this assumption - that the distributions for each feature channel are roughly the same - can be an incorrect one. A useful model I have for thinking about the distinction between these two approaches is the idea that both are calculating approximations of an underlying abstract notion: the in-the-limit mean and variance of a single feature channel, at a given point in time. Batch Normalization is an approximation of that insofar as it only has a small sample of points to work with, and so its estimate will tend to be high variance. Layer Normalization is an approximation insofar as it makes the assumption that feature distributions are aligned across channels: if this turns out not to be the case, individual channels will have normalizations that are biased, due to being pulled towards the mean and variance calculated over an aggregate of channels that are different than them. Group Norm tries to find a balance point between these two approaches, one that uses multiple channels, and normalizes within a given instance (to avoid the problems of small batch size), but, instead of calculating the mean and variance over all channels, calculates them over a group of channels that represents a subset. The inspiration for this idea comes from the fact that, in old school computer vision, it was typical to have parts of your feature vector that - for example - represented a histogram of some value (say: localized contrast) over the image. Since these multiple values all corresponded to a larger shared “group” feature. If a group of features all represent a similar idea, then their distributions will be more likely to be aligned, and therefore you have less of the bias issue. One confusing element of this paper for me was that the motivation part of the paper strongly implied that the reason group norm is sensible is that you are able to combine statistically dependent channels into a group together. However, as far as I an tell, there’s no actually clustering or similarity analysis of channels that is done to place certain channels into certain groups; it’s just done so semi-randomly based on the index location within the feature channel vector. So, under this implementation, it seems like the benefits of group norm are less because of any explicit seeking out of dependant channels, and more that just having fewer channels in each group means that each individual channel makes up more of the weight in its group, which does something to reduce the bias effect anyway. The upshot of the Group Norm paper, results-wise, is that Group Norm performs better than both Batch Norm and Layer Norm at very low batch sizes. This is useful if you’re training on very dense data (e.g. high res video), where it might be difficult to store more than a few observations in memory at a time. However, once you get to batch sizes of ~24, Batch Norm starts to do better, presumably since that’s a large enough sample size to reduce variance, and you get to the point where the variance of BN is preferable to the bias of GN.  |

Progressive Neural Networks

Andrei A. Rusu and Neil C. Rabinowitz and Guillaume Desjardins and Hubert Soyer and James Kirkpatrick and Koray Kavukcuoglu and Razvan Pascanu and Raia Hadsell

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG

First published: 2016/06/15 (7 years ago)

Abstract: Learning to solve complex sequences of tasks--while both leveraging transfer and avoiding catastrophic forgetting--remains a key obstacle to achieving human-level intelligence. The progressive networks approach represents a step forward in this direction: they are immune to forgetting and can leverage prior knowledge via lateral connections to previously learned features. We evaluate this architecture extensively on a wide variety of reinforcement learning tasks (Atari and 3D maze games), and show that it outperforms common baselines based on pretraining and finetuning. Using a novel sensitivity measure, we demonstrate that transfer occurs at both low-level sensory and high-level control layers of the learned policy.

more

less

Andrei A. Rusu and Neil C. Rabinowitz and Guillaume Desjardins and Hubert Soyer and James Kirkpatrick and Koray Kavukcuoglu and Razvan Pascanu and Raia Hadsell

arXiv e-Print archive - 2016 via Local arXiv

Keywords: cs.LG

First published: 2016/06/15 (7 years ago)

Abstract: Learning to solve complex sequences of tasks--while both leveraging transfer and avoiding catastrophic forgetting--remains a key obstacle to achieving human-level intelligence. The progressive networks approach represents a step forward in this direction: they are immune to forgetting and can leverage prior knowledge via lateral connections to previously learned features. We evaluate this architecture extensively on a wide variety of reinforcement learning tasks (Atari and 3D maze games), and show that it outperforms common baselines based on pretraining and finetuning. Using a novel sensitivity measure, we demonstrate that transfer occurs at both low-level sensory and high-level control layers of the learned policy.

|

[link]

TLDR; The authors propose Progressive Neural Networks (ProgNN), a new way to do transfer learning without forgetting prior knowledge (as is done in finetuning). ProgNNs train a neural neural on task 1, freeze the parameters, and then train a new network on task 2 while introducing lateral connections and adapter functions from network 1 to network 2. This process can be repeated with further columns (networks). The authors evaluate ProgNNs on 3 RL tasks and find that they outperform finetuning-based approaches. #### Key Points - Finetuning is a destructive process that forgets previous knowledge. We don't want that. - Layer h_k in network 3 gets additional lateral connections from layers h_(k-1) in network 2 and network 1. Parameters of those connections are learned, but network 2 and network 1 are frozen during training of network 3. - Downside: # of Parameters grows quadratically with the number of tasks. Paper discussed some approaches to address the problem, but not sure how well these work in practice. - Metric: AUC (Average score per episode during training) as opposed to final score. Transfer score = Relative performance compared with single net baseline. - Authors use Average Perturbation Sensitivity (APS) and Average Fisher Sensitivity (AFS) to analyze which features/layers from previous networks are actually used in the newly trained network. - Experiment 1: Variations of Pong game. Baseline that finetunes only final layer fails to learn. ProgNN beats other baselines and APS shows re-use of knowledge. - Experiment 2: Different Atari games. ProgNets result in positive Transfer 8/12 times, negative transfer 2/12 times. Negative transfer may be a result of optimization problems. Finetuning final layers fails again. ProgNN beats other approaches. - Experiment 3: Labyrinth, 3D Maze. Pretty much same result as other experiments. #### Notes - It seems like the assumption is that layer k always wants to transfer knowledge from layer (k-1). But why is that true? Network are trained on different tasks, so the layer representations, or even numbers of layers, may be completely different. And Once you introduce lateral connections from all layers to all other layers the approach no longer scales. - Old tasks cannot learn from new tasks. Unlike humans. - Gating or residuals for lateral connection could make sense to allow to network to "easily" re-use previously learned knowledge. - Why use AUC metric? I also would've liked to see the final score. Maybe there's a good reason for this, but the paper doesn't explain. - Scary that finetuning the final layer only fails in most experiments. That's a very commonly used approach in non-RL domains. - Someone should try this on non-RL tasks. - What happens to training time and optimization difficult as you add more columns? Seems prohibitively expensive.  |

Formal Guarantees on the Robustness of a Classifier against Adversarial Manipulation.

Matthias Hein and Maksym Andriushchenko

Neural Information Processing Systems Conference - 2017 via Local dblp

Keywords:

Matthias Hein and Maksym Andriushchenko

Neural Information Processing Systems Conference - 2017 via Local dblp

Keywords:

|

[link]

Hein and Andriushchenko give a intuitive bound on the robustness of neural networks based on the local Lipschitz constant. With robustness, the authors refer a small $\epsilon$-ball around each sample; this ball is supposed to describe the region where the neural network predicts a constant class. This means that adversarial examples have to compute changes large enough to leave these robust areas. Larger $\epsilon$-balls imply higher robustness to adversarial examples.

When considering a single example $x$, and a classifier $f = (f_1, \ldots, f_K)^T$ (i.e. in a multi-class setting), the bound can be stated as follows. For $q$ and $p$ such that $\frac{1}{q} + \frac{1}{p} = 1$ and $c$ being the class predicted for $x$, the it holds

$x = \arg\max_j f_j(x + \delta)$

for all $\delta$ with

$\|\delta\|_p \leq \max_{R > 0}\min \left\{\min_{j \neq c} \frac{f_c(x) – f_j(x)}{\max_{y \in B_p(x, R)} \|\nabla f_c(y) - \nabla f_j(y)\|_q}, R\right\}$.

Here, $B_p(x, R)$ describes the $R$-ball around $x$ measured using the $p$-norm. Based on the local Lipschitz constant (in the denominator), the bound essentially measures how far we can deviate from the sample $x$ (measured in the $p$-norm) until $f_j(x) > f_c(x)$ for some $j \neq c$. The higher the local Lipschitz constant, the smaller deviations are allowed, i.e. adversarial examples are easier to find. Note that the bound also depends on the confidence, i.e. the edge $f_c(x)$ has in comparison to all other $f_j(x)$.

In the remaining paper, the authors also provide bounds for simple classifiers including linear classifiers, kernel methods and two-layer perceptrons (i.e. one hidden layer). For the latter, they also propose a new type of regularization called cross-Lipschitz regularization:

$P(f) = \frac{1}{nK^2} \sum_{i = 1}^n \sum_{l,m = 1}^K \|\nabla f_l(x_i) - \nabla f_m(x_i)\|_2^2$.

This regularization term is intended to reduce the Lipschitz constant locally around training examples. They show experimental results using this regularization on MNIST and CIFAR, see the paper for details.

Also view this summary at [davidstutz.de](https://davidstutz.de/category/reading/).

|

Explaining and Harnessing Adversarial Examples

Ian J. Goodfellow and Jonathon Shlens and Christian Szegedy

arXiv e-Print archive - 2014 via Local arXiv

Keywords: stat.ML, cs.LG

First published: 2014/12/20 (9 years ago)

Abstract: Several machine learning models, including neural networks, consistently misclassify adversarial examples---inputs formed by applying small but intentionally worst-case perturbations to examples from the dataset, such that the perturbed input results in the model outputting an incorrect answer with high confidence. Early attempts at explaining this phenomenon focused on nonlinearity and overfitting. We argue instead that the primary cause of neural networks' vulnerability to adversarial perturbation is their linear nature. This explanation is supported by new quantitative results while giving the first explanation of the most intriguing fact about them: their generalization across architectures and training sets. Moreover, this view yields a simple and fast method of generating adversarial examples. Using this approach to provide examples for adversarial training, we reduce the test set error of a maxout network on the MNIST dataset.

more

less

Ian J. Goodfellow and Jonathon Shlens and Christian Szegedy

arXiv e-Print archive - 2014 via Local arXiv

Keywords: stat.ML, cs.LG

First published: 2014/12/20 (9 years ago)

Abstract: Several machine learning models, including neural networks, consistently misclassify adversarial examples---inputs formed by applying small but intentionally worst-case perturbations to examples from the dataset, such that the perturbed input results in the model outputting an incorrect answer with high confidence. Early attempts at explaining this phenomenon focused on nonlinearity and overfitting. We argue instead that the primary cause of neural networks' vulnerability to adversarial perturbation is their linear nature. This explanation is supported by new quantitative results while giving the first explanation of the most intriguing fact about them: their generalization across architectures and training sets. Moreover, this view yields a simple and fast method of generating adversarial examples. Using this approach to provide examples for adversarial training, we reduce the test set error of a maxout network on the MNIST dataset.

|

[link]

#### Problem addressed:

A fast way of finding adversarial examples, and a hypothesis for the adversarial examples

#### Summary:

This paper tries to explain why adversarial examples exists, the adversarial example is defined in another paper \cite{arxiv.org/abs/1312.6199}. The adversarial example is kind of counter intuitive because they normally are visually indistinguishable from the original example, but leads to very different predictions for the classifier. For example, let sample $x$ be associated with the true class $t$. A classifier (in particular a well trained dnn) can correctly predict $x$ with high confidence, but with a small perturbation $r$, the same network will predict $x+r$ to a different incorrect class also with high confidence.

This paper explains that the exsistence of such adversarial examples is more because of low model capacity in high dimensional spaces rather than overfitting, and got some empirical support on that. It also shows a new method that can reliably generate adversarial examples really fast using `fast sign' method. Basically, one can generate an adversarial example by taking a small step toward the sign direction of the objective. They also showed that training along with adversarial examples helps the classifier to generalize.

#### Novelty:

A fast method to generate adversarial examples reliably, and a linear hypothesis for those examples.

#### Datasets:

MNIST

#### Resources:

Talk of the paper https://www.youtube.com/watch?v=Pq4A2mPCB0Y

#### Presenter:

Yingbo Zhou

|